In the realm of SEO, as Bill Hunt once put it so well, you have to get the basics right before you even begin to think about innovating.

A foundation allows you to put your best foot forward. If you try to build or add on to your house with a weak foundation, you’re only setting yourself up for disaster.

On the other hand, when you get the basics right, as you continue to add to your house, or in this instance, your campaign, you’ll see more impact from your efforts.

Here are three areas to audit on local sites to create your strong SEO foundation.

1. Technical Audit

Everything starts with an in-depth technical audit. If a site is full of technical issues that haven’t been addressed, you can’t expect to see much, if any, improvement from your ongoing efforts.

If you inherited the work for this site from a previous webmaster, performing an audit will reveal exactly what you’re working with.

You’ll also have the opportunity to address items that could have harmed the site. It’s important to address these issues early on so that search engines can begin understanding the changes that have been made to the site.

On the other end of the spectrum are sites that are entirely new and still in the development phase.

Performing a technical audit pre-launch will give you an opportunity to make sure you’re launching the best possible new website. It’s important to do this pre-launch because you want to make sure you aren’t delivering significant hurdles as it is first being crawled.

Most modern SEO tools offer some level of auditing. I’m a big fan of having multiple perspectives when auditing on-page and off-pages signals.

If you can, use a few different SEO tools when performing your audits. It’s entirely up to you what tools to use for your technical audit. My gold standards are Screaming Frog, SEMrush, Ahrefs, and DeepCrawl.

Common technical issues that you might find during your audit can often include:

Internal Links

A strong internal linking strategy can make or break a site. Internal links act as a second form of navigation for users and crawlers.

If there are issues with how pages are linking to each other on your site, the site won’t live up to its full potential.

The two most significant issues you will likely run into when looking at internal redirects are broken and/or internal redirects. Both cause significant inefficiencies for both crawlers and users as they follow these links.

Most of the time, you will run into these issues after the site has gone through a major migration, like moving to HTTPS or changing the site’s content management system (CMS).

Broken links are a fairly straightforward issue – when following the link, you don’t arrive at the page you were trying to navigate to – it’s pretty cut and dried.

You want to identify where this content is now being served on the site and update these links. In cases where the content no longer exists on the site, remove the link.

If the page has a new URL, you should also set up a redirect. These redirects will act as a safety net for any internal links you may miss as well as continuing to receive equity from any off-site links the old page URL may have earned.

Remember, do not spam redirects. If an old page’s content is no longer housed anywhere else on the site, it’s better to let the page 404, rather than pointing it to a random new page.

Spamming your redirects could potentially cause you problems with Google, which you certainly don’t want.

One other major internal linking issue that you can run into are internal redirects. Like I previously mentioned, from an on-site perspective, internal redirects should act more as a safety net.

While redirects still pass equity from page-to-page, they aren’t efficient. You want your internal links to resolve to their final destination URL, not hop through a chain of two or more URLs.

Markup Implementation

Proper markup code implementation is incredibly important for every optimized website.

This code gives you the opportunity to provide search engines even more detailed information about the pages on your site they are crawling.

In the case of a local-focused site, you want to make sure you are delivering as much information about the business as possible.

The first thing you should look at on the site is how the code is currently being used.

- What page is housing the location information?

- What type of local markup is being used?

- Is there room for improvement and additions to the data delivered?

The delivery of location information, specifically with NAP info (name, address, and phone number), will depend on the type of business you’re dealing with.

Single-location and multi-location businesses will utilize different architecture styles. We’ll discuss this a bit later.

When possible, it’s essential to use service-specific local markup.

For example, if you’re a local law firm, instead of just using the local business schema the site should instead use the LegalService markup.

By using a more descriptive version of local schema markup, you’ll give crawlers a better understanding of the services your business offers.

When combined with a well-targeted keyword strategy, search engines will be able to rank the site in local searches, including maps. A full list of service-specific local schema markup can be found at schema.org.

It’s important to give as much appropriate information in your markup code as possible. When looking at how a site is using markup code, make sure you’re constantly looking for ways to improve.

See if there is business information that you can add to make the code you are delivering even more well-rounded.

Once you have updated or improved your site’s markup data, make sure you validate it in Google’s Structured Data Testing tool. This will let you know if there are any errors you need to address or if there are even more ways to improve this code.

Crawl Errors in GSC

One of the best tools an SEO can have in their corner is Google Search Console. It basically acts as a direct line of communication with Google.

One of the first things I do when working with a new site is to dive into GSC. You want to know how Google is crawling your site and if the crawlers are running into any issues that could be holding you back.

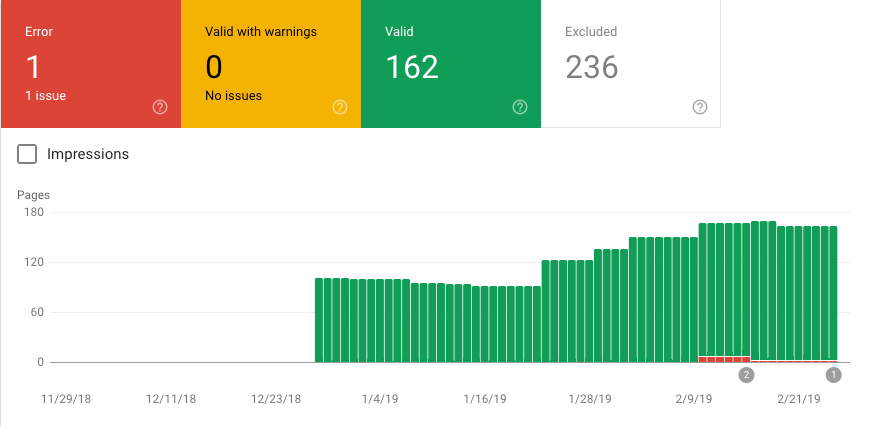

Finding this information is where the coverage report comes into play. When examining the coverage report in the new Google Search Console dashboard, you are given an enormous wealth of crawl information for your site.

Not only will you see how Google is indexing your site for the set period of time, but you’ll also see what errors the crawlbot has encountered.

One of the most common issues you’ll come across will be any internal redirects or broken links found during the crawl. These should have been addressed during your initial site audit, but it’s always good to double check these.

Once you’re sure these issues are resolved, you can submit them to Google for validation so that they can recrawl and see your fixes.

Another important area of GSC to make sure you visit during your audit is the sitemap section. You want to make sure that your sitemaps have been submitted and aren’t returning any errors.

A sitemap acts as a roadmap to Google on how a site should be crawled. It’s crucial that when you upload this directly to Google, you are giving them the most up-to-date, correct version of your sitemap as possible, that only reflects the URLs you want crawled and indexed.

As you are resolving these errors and submitting them for validation, you should begin to see your total error count drop as Google continues to recrawl your site.

Check GSC often to for any new errors so you can quickly resolve them.

Potential Server Issues

Much like Google Search Console, becoming best friends with the site’s hosting platform is always a good idea. It’s essential to do your due diligence when choosing a hosting platform.

In the past, I’ve run into issues where a local business I was working with was in the top three positions for their main keyword and also held several instant answer results. One day, the site suddenly lost it all.

Upon further investigation, we found that the issue came from an open port on the server we were on that wasn’t necessary for the sites we were working with.

After consulting with our hosting platform and closing this port, we re-submitted the site to Google for indexing. Within 24 hours, the website was back in the top of SERPs and regained the instant answer features.

Being able to do this depends on the hosting provider you plan to use.

Now, whenever I’m vetting a hosting platform, one of the first things I look at is what type of support they offer. I want to know that if I run into an issue, I’ll have someone in my corner that can help me resolve potential problems.

2. Strategy & Cannibalization

Now it’s time to make sure your on-page elements are all in place.

When it comes to local SEO, this can be a bit tricky, due to all the small moving pieces that make for a well-optimized site.

Even if your site is working well from a technical perspective, it still won’t perform at its highest potential without a strategy.

When creating content for a local site, it can be all too easy to cannibalize your own content. This is especially true for a website with a single location focus.

It’s important to evaluate the keywords the site is ranking for and which pages are ranking for those keywords.

If you see those keywords fluctuate between multiple pages (and it wasn’t an intentional shift), that can be an indication that the search engines are confused on the topical focus of those pages.

When working on a new site, taking a step back to evaluate the overall on-page strategy implemented on the site is crucial.

The approach to a single-location business can be vastly different from a multiple-location business.

Typically, a single-location business will use the homepage to target the location and its primary service while using silos to break down more services.

For a multi-location business, there several strategies that could be used to accurately target each location more efficiently.

3. Off-Page Audit

Off-site signals help build your site’s authority. So it’s vital for these signals to be on point.

Here is where you need to put your focus.

Citations & NAP Consistency

Having consistency with NAP information across both the site and its citations will help build authority in multiple aspects of search results. This information backs up where your business is located.

Because the site is sending these signals to search engines consistently, the search engines will have an easier time understanding where to rank the business.

These signals are also crucial to better placements in maps for relevant local searches.

I like to begin citation cleanup in tandem with my technical and strategy audits because it can take a bit of time for these issues to resolve themselves.

It’s a time-consuming process to pull these citations, gain access or reach out to these sites, and ensure corrections are made.

For this reason, I use citations services (e.g., Yext, Whitespark, BrightLocal, Moz Local) to help do this work for me.

This will allow these items to begin taking hold and viewed by the search engines’ crawlers for their consideration as other on-site items are being repaired or improved.

Backlinks

For my money, I still believe there is value in auditing and submitting a disavowal file for undeniably toxic links.

Why? I’ve always looked at the benefit to the user with local link building efforts, almost like PR.

A local business site should look at a link and answer this simple question: do I want my business to be associated with this external site?

Looking at a link from this perspective will allow you to make sure the site you are working on has a clean link profile that helps its organic search rankings.

Build Your Local SEO House

Auditing and correcting any issues you find in these three main areas will help you create a much stronger foundation for your website and future SEO efforts.

Now you can start moving into the fun and creative side of marketing to gain more organic traffic.

Happy auditing!

More Resources:

Image Credits

Screenshot taken by author, March 2019