It’s spring, and that means it’s time to peer into your site to find the often-overlooked items that drag down natural search performance. In our homes, we tend to put off cleaning the closets, dusting the baseboards, and clearing the spider webs out of the hard-to-reach places. Similarly, some aspects of search engine optimization we tend to put off for another day.

Today is the day for SEO spring cleaning! These six steps will make your site shine, and boost its natural search performance in the process.

… some aspects of search engine optimization we tend to put off for another day.

6 Spring Cleaning Tips for SEO

Inspect your site. Start the process with a crawl of your site to collect the data you’ll need. Use a crawler such as Deep Crawler (which is especially helpful for sites on Angular, the front-end platform) or Screaming Frog.

Crawlers help you identify all manner of metadata and site errors, as well as the server header status for each page. Each of these data points will identify an area that needs attention.

Eliminate duplicate metadata. Making each title tag and meta description unique is an easy and rewarding place to start. Your crawl data will show you which pages have the same title tag and meta description. Simply write new ones for the metadata that are the same.

Take special care with the title tags because they are still the single most important on-page SEO element. Each title tag should be unique, begin with the most valuable keyword themes, and end with your site or brand name — all maxed out at around 65 characters.

Meta descriptions, while not a ranking factor, affect click-through rates from the search results to your site. They can reach over 300 characters in Google’s search results, but many are still truncated at about 155 characters. To be safe, include your major keywords themes and call to action in the first 155 characters, and embellish in the latter 150 characters.

While you’re at it, kill the meta keywords. They haven’t been used in rankings or had any other SEO value in the last nine years. Check to see if your internal site search engine uses them. If yes, ask your developers to remove them from your content management system and your site.

Redirect broken links. In addition to your crawler data, the Crawl Errors report in Google Search Console — Search Console > Crawl > Crawl Errors — is an excellent place to collect data around pages that need to be redirected. Both will tell you which pages are returning a 404 file-not-found status error, which indicates that the page no longer exists. The only way a non-existent page registers in these tools is if your crawler or Google finds a link to it, attempts to crawl the page, and receives the 404 message.

Each broken link represents an opportunity to salvage the link authority that that page has collected over its lifespan. Designate a new URL that each 404 page should 301 redirect to, and pass that list to your developer team to implement.

The same should be done with “soft” 404 errors, which look like true 404s but are really just 302 temporary redirects that pass the user to a page that looks like an error page. A soft 404 has none of the index-cleaning benefits of a true 404 error or a 301 redirect. A soft 404 only ensures that both the fake error page and the URL for the broken page will continue to live on in the index. Soft 404 errors in the Search Console Crawl Error report should also be paired with a new URL to 301 redirect to.

A few teams will have the ability to implement 301 redirects directly in the content management system. If you’re one of those teams, prioritize this 301 redirect activity.

Break the redirect chains. As more and more pages become obsolete over the years, chains of 301 redirects form — one URL redirecting to another, redirecting to another, and so on. These chains require multiple server requests to execute, waste server resources, and slow down page load times. Your crawler can produce a report of the redirect chains. Share that report with your developer team and request that the old chain is replaced by a simple redirect from the first URL to the last one.

Sweep the dust bunnies out of your index. Google’s index, and the indexes of the other search engines, get cluttered with the remnants of obsolete pages. These digital dust bunnies will stay in the index until you sweep them out with 301 redirects.

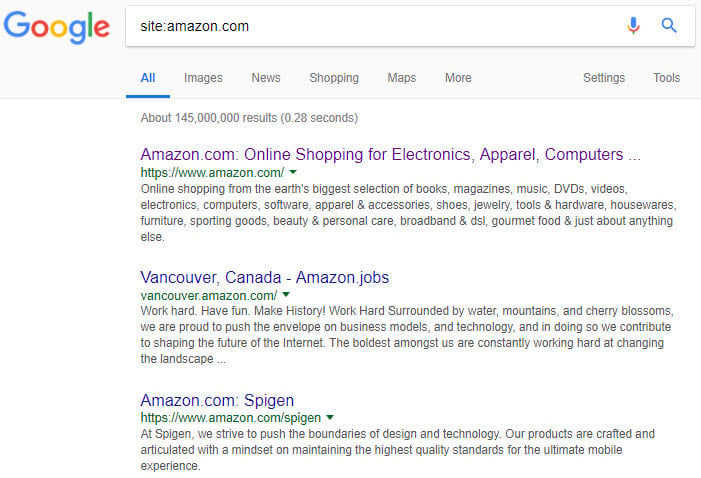

To find the pages you need to 301 redirect because they’re no longer useful, do a site query like the one below. Simply add any domain after “site:” and see what Google is indexing for your site.

A site query for Amazon.com in Google’s web search.

—

To narrow down the number of pages, refine the site query with special operators or conditions — such as “inurl:” and “intitle:” as shown below. For a comprehensive list, see Google’s “Advanced Operators” page.

A site-specific query (site:) with multiple conditions (inurl: and intitle:) in Google’s web search.

Spruce up low-performing pages. Your web analytics provides another window into tasks that need to be taken care of. We spend a lot of time focusing on the pages that perform well, but what about those that don’t?

Look at the data from a different angle. Identify pages that are performing poorly, and that should be driving much higher natural search performance. What’s holding them back?

If they’re supposed to be targeting valuable keyword themes but are attracting low volumes of natural search traffic, where did the optimization go wrong? Do they drive traffic but no conversions? If so, do they have conversion elements on them?

Beyond Spring

Resolve to make your spring cleaning routine a regular part of your SEO life. Imagine if you cleaned your house only once per year. It would presumably impede your ability to live. Conduct SEO cleaning once a month, with a due date, to keep your site performing at its peak.