Google Duplex, one giant leap for AI … or another step towards the ultimate deep fake?

At the beginning of May, in Google I/O 2018 Keynotes Sundai Pichard presented Google Duplex.

That’s one small step for a man, one giant leap for mankind. Neil Amrstrong, 20/7/1969

As you can see from the video below, Duplex is not only able to imitate natural speech (almost) perfectly, but it is also able to understand the context of the speech and adapt to the interlocutor.

In earlier posts, speaking about GAN and Deep fakes, I reported the ability of AI’s current systems to reconstruct faces with facial mimics and lip-sync, learning from footage of the person in question, making him give almost any speech thanks to the Wavenet‘s text-to-speech technology.

But it would seem that generating audio from pre-packaged texts, is already history: now Wavenet has been equipped with human voices, like the one of John Legend (below), in order to sound even more natural.

In the examples reported by Pichard at the conference, Duplex was able to make several types of reservations, while being able to interact appropriately. The result (at least in these contexts) is indistinguishable from a human voice. Of course, currently, the key was to limit the field to a specific domain such as reservations. We are (for now) far from a system able to start and keep conversations of a more general nature, also because the human conversation requires some level of common ground between the interlocutors, in order to anticipate the direction of the conversation.

After all, even humans have great difficulty in holding conversations in totally unknown areas. Sure, the most self-confident can improvise, but improvisation is nothing else but an attempt to bring the dialogue back to a more “comfortable” track.

How it works

Architecture

At the heart of Duplex, there is a Recurring Neural Network (RNN) built using TensorFlow Extended (TFX), which according to Google is a “general purpose” machine learning platform. That RNN has been trained on a set of appropriately anonymized telephone conversations.

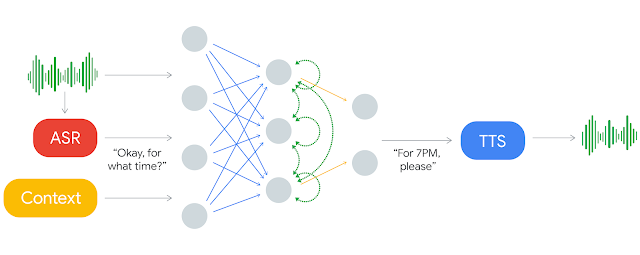

The conversation is transformed in advance by ASR (Automatic Speech Recognition) into text. This text is then supplied as input to the Duplex RNN, together with the audio structure, and the contextual parameters of the conversation (eg the type of appointment desired, the desired time etc.). The result will be the text of the sentences to be pronounced, which will then be appropriately “read aloud” via TTS (Text-To-Speech).

Google Duplex works using a combination of Wavenet for the ASR (Automatic Speech Recognition) part, and Tacotron for the TTS.

Naturalness

To sound more natural, Duplex inserts ad hoc breaks, such as “mmh”, “ah”, “oh!”, Which reproduces the same human “disfluencies”, sounding more familiar to people.

In addition, Google has also worked on the latency of the responses, which must align with the expectations of the interlocutor. For example, humans tend to expect low latencies in response to simple stimuli, such as greetings, or to phrases such as “I did not understand”. In some cases Duplex does not even wait for the outcome from RNN but uses faster approximations, perhaps combined with more hesitant answers, to simulate a difficulty in understanding.

Ethical and moral issues

While undoubtedly this technology and these results have aroused amazement, it is also true that this precise virtual indistinguishability from the human voice raises more than one perplexity.

On the one hand, there is undoubtedly the potential usefulness of this system, such as the possibility of making reservations automatically when it is unfeasible (eg when you are at work), or as an aid to people with disabilities such as deafness or dysphasia. On the other hand, especially considering the progress made by complementary technologies such as video synthesis, it makes clear that the risk of creating deep fakes so realistic as to be totally indistinguishable from reality is becoming more than a possibility.

Many argue that it would be necessary to warn the interlocutor that he is talking to an artificial intelligence. However, such an approach seems unrealistic (we should make it mandatory by law – which law? By which jurisdiction? And how to implement it anyway?), but it could also undermine the effectiveness of the system, as people might tend to behave differently once they know how to talk to a machine, no matter how realistic.

Notes

According to Google, this allows you to have less than 100 ms of response latency in these cases. Paradoxically, in other cases, it was discovered that introducing more latency (eg in the case of answers to particularly complex questions) helped to make the conversation look more natural.

LINKS

Google Duplex: An AI system to achieve real-world tasks over the phone

Comment: Google Duplex isn’t the only thing announced at I/O that has societal implications

Google Assistant Routines begin initial rollout, replaces ‘My Day’

The future of the Google Assistant: Helping you get things done to give you time back

Is Google Duplex ethical and moral?

Deciding Whether To Fear or Celebrate Google’s Mind-Blowing AI Demo

Google Duplex beat the Turing test: Are we doomed?