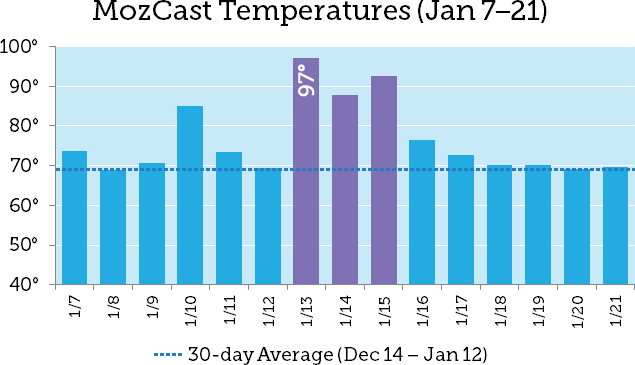

On January 13th, MozCast measured significant algorithm flux lasting about three days (the dotted line shows the 30-day average prior to the 13th, which is consistent with historical averages) …

That same day, Google announced the release of a core update dubbed the January 2020 Core Update (in line with their recent naming conventions) …

On January 16th, Google announced the update was “mostly done,” aligning fairly well with the measured temperatures in the graph above. Temperatures settled down after the three-day spike …

It appears that the dust has mostly settled on the January 2020 Core Update. Interpreting core updates can be challenging, but are there any takeaways we can gather from the data?

How does it compare to other updates?

How did the January 2020 Core Update stack up against recent core updates? The chart below shows the previous four named core updates, back to August 2018 (AKA “Medic”) …

While the January 2020 update wasn’t on par with “Medic,” it tracks closely to the previous three updates. Note that all of these updates are well above the MozCast average. While not all named updates are measurable, all of the recent core updates have generated substantial ranking flux.

Which verticals were hit hardest?

MozCast is split into 20 verticals, matching Google AdWords categories. It can be tough to interpret single-day movement across categories, since they naturally vary, but here’s the data for the range of the update (January 14–16) for the seven categories that topped 100°F on January 14 …

Health tops the list, consistent with anecdotal evidence from previous core updates. One consistent finding, broadly speaking, is that sites impacted by one core update seem more likely to be impacted by subsequent core updates.

Who won and who lost this time?

Winners/losers analyses can be dangerous, for a few reasons. First, they depend on your particular data set. Second, humans have a knack for seeing patterns that aren’t there. It’s easy to take a couple of data points and over-generalize. Third, there are many ways to measure changes over time.

We can’t entirely fix the first problem — that’s the nature of data analysis. For the second problem, we have to trust you, the reader. We can partially address the third problem by making sure we’re looking at changes both in absolute and relative terms. For example, knowing a site gained 100% SERP share isn’t very interesting if it went from one ranking in our data set to two. So, for both of the following charts, we’ll restrict our analysis to subdomains that had at least 25 rankings across MozCast’s 10,000 SERPs on January 14th. We’ll also display the raw ranking counts for some added perspective.

Here are the top 25 winners by % change over the 3 days of the update. The “Jan 14” and “Jan 16” columns represent the total count of rankings (i.e. SERP share) on those days …

If you’ve read about previous core updates, you may see a couple of familiar subdomains, including VeryWellHealth.com and a couple of its cousins. Even at a glance, this list goes well beyond healthcare and represents a healthy mix of verticals and some major players, including Instagram and the Google Play store.

I hate to use the word “losers,” and there’s no way to tell why any given site gained or lost rankings during this time period (it may not be due to the core update), but I’ll present the data as impartially as possible. Here are the 25 sites that lost the most rankings by percentage change …

Orbitz took heavy losses in our data set, as did the phone number lookup site ZabaSearch. Interestingly, one of the Very Well family of sites (three of which were in our top 25 list) landed in the bottom 25. There are a handful of healthcare sites in the mix, including the reputable Cleveland Clinic (although this appears to be primarily a patient portal).

What can we do about any of this?

Google describes core updates as “significant, broad changes to our search algorithms and systems … designed to ensure that overall, we’re delivering on our mission to present relevant and authoritative content to searchers.” They’re quick to say that a core update isn’t a penalty and that “there’s nothing wrong with pages that may perform less well.” Of course, that’s cold comfort if your site was negatively impacted.

We know that content quality matters, but that’s a vague concept that can be hard to pin down. If you’ve taken losses in a core update, it is worth assessing if your content is well matched to the needs of your visitors, including whether it’s accurate, up to date, and generally written in a way that demonstrates expertise.

We also know that sites impacted by one core update seem to be more likely to see movement in subsequent core updates. So, if you’ve been hit in one of the core updates since “Medic,” keep your eyes open. This is a work in progress, and Google is making adjustments as they go.

Ultimately, the impact of core updates gives us clues about Google’s broader intent and how best to align with that intent. Look at sites that performed well and try to understand how they might be serving their core audiences. If you lost rankings, are they rankings that matter? Was your content really a match to the intent of those searchers?