If you have launched a new website, updated a single page on your existing domain, or altered many pages and/or the structure of your site, you will likely want Google to display your latest content in its SERPs.

Google’s crawlers are pretty good at their job. If you think of a new article on a big domain, for example, the search engine will crawl and index any changes pretty quickly thanks to the natural traffic and links from around the web which will alert its algorithms to this new content.

For most sites, however, it is good practice to give Google a little assistance with its indexing job.

Each of the following official Google tools can achieve this. And each are more or less suitable depending on whether you want Google to recrawl a single or page or more of your site.

It is also important to note two things before we start:

- None of these tools can force Google to start indexing your site immediately. You do have to be patient

- Quicker and more comprehensive indexing of your site will occur if your content is fresh, original, useful, easy to navigate, and being linked to from elsewhere on the web. These tools can’t guarantee Google will deem your site indexable. And they shouldn’t be used as an alternative to publishing content which is adding value to the internet ecosystem.

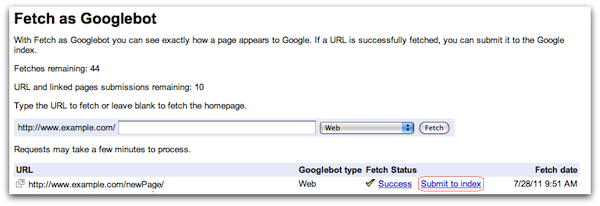

Google’s Fetch tool is the most logical starting point for getting your great new site or content indexed.

First, you need to have a Google account in order to have a Google Webmaster account – from there you will be prompted to ‘add a property’ which you will then have to verify. This is all very straightforward if you have not yet done this.

Once you have the relevant property listed in your Webmaster Tools account, you can then ‘perform a fetch’ on any URL related to that property. If your site is fetchable (you can also check if it is displaying correctly) you can then submit for it to be added to Google’s index.

This tool also allows you to submit a single URL (‘Crawl only this URL’) or the selected URL and any pages it links to directly (‘Crawl this URL and its direct links’). Although both of these requests come with their own limits; 10 for the former option and 2 for the latter.

You might also have heard of Google’s Add URL tool.

Think of this as a simpler version of the above Fetch tool. It is a slower, simpler tool without the functionality and versatility of Fetch. But it still exists, so – it seems – still worth adding your URL to if you can.

You can also use Add URL with just a Google account, rather than adding and verifying a property to Webmaster Tools. Simply add your URL and click to assure the service you aren’t a robot!

If you have amended many pages on a domain or changed the structure of the site, adding a sitemap to Google is the best option.

Like Fetch As Google, you need to add a sitemap via the Webmaster search console.

[See our post Sitemaps & SEO: An introductory guide if you are in the dark about what sitemaps are].

Once you have generated or built a sitemap: on Webmaster Tools select the domain on which it appears, select ‘crawl’/’sitemaps’/’add/test sitemap’, type in its URL (or, as you can see, the domain URL appended with sitemap.xml) and ‘submit’.

As I pointed out in the introduction to this post…

Google is pretty good at crawling and indexing the web but giving the spiders as much assistance with their job as possible makes for quicker and cleaner SEO.

Simply having your property added to Webmaster Tools, running Google Analytics, and then using the above tools are the foundation for getting your site noticed by the search giant.

But good, useful content on a well-designed usable site really gets you visible – by Google and most importantly humans.