The development of technology like AI and natural language processing are a huge player in the way a search engine retrieves information. Computer algorithms interpret the words the user types and the number of web pages based on the frequency of linguistic connections in the billions of texts the system was trained on.

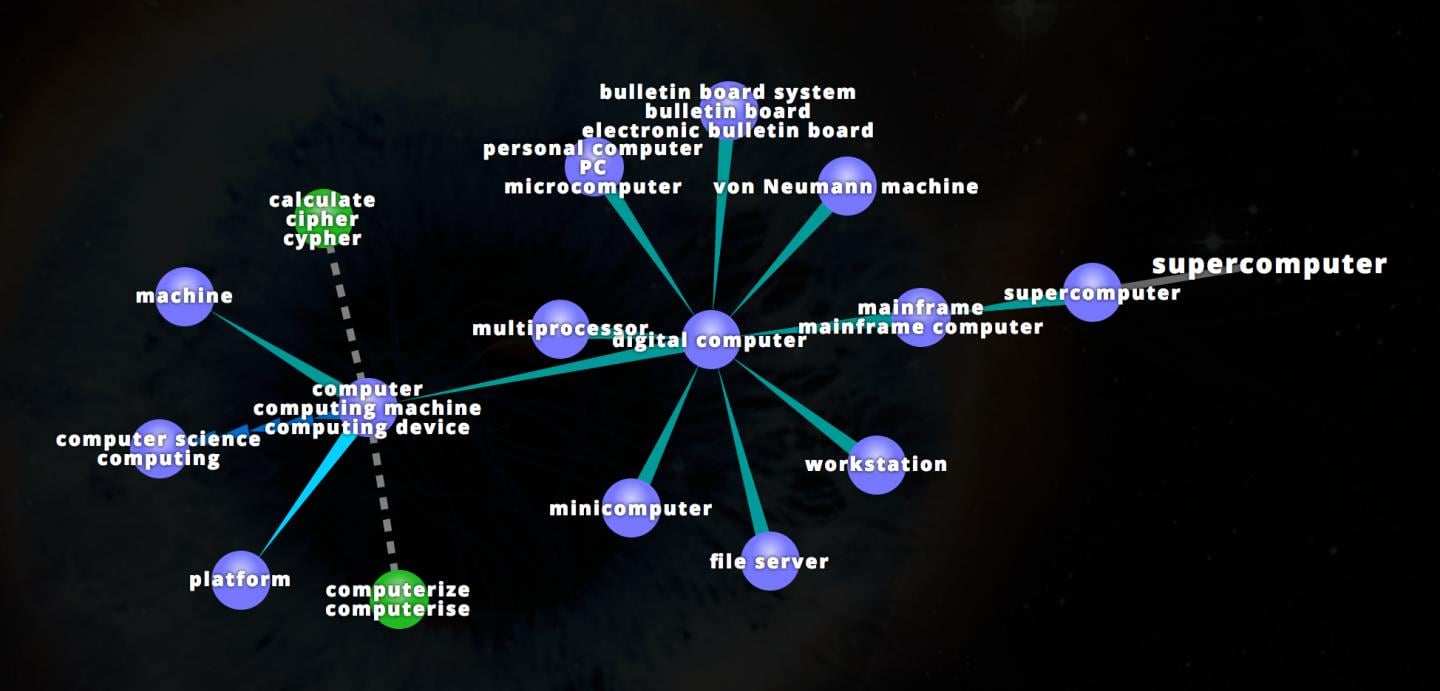

WordNet is a lexical database for the English language. It groups English words into sets of synonyms called synsets, provides short definitions and usage examples, and records a number of relations among these synonym sets or their members. Researchers from The University of Texas at Austin developed a method to incorporate information from WordNet into informational retrieval systems. (VisuWords)

WordNet is a lexical database for the English language. It groups English words into sets of synonyms called synsets, provides short definitions and usage examples, and records a number of relations among these synonym sets or their members. Researchers from The University of Texas at Austin developed a method to incorporate information from WordNet into informational retrieval systems. (VisuWords)

This is not the only source of information. Semantic relationships are strengthened by professional annotators who hand-tune the results, and the algorithms that generate them. Web searchers tell the algorithms which connections are the best through what they click on.

This model may seem successful but it does have flaws. Search engines are not as “smart” as they may seem. They lack a true understanding of language and human logic. Sometimes they replicate and deepen biases embedded in our searchers, rather than bringing up new information.

Matthew Lease, an associate professor in the School of Information at the University of Texas at Austin (UT Austin), believes there are better ways to harness the dual power of computers and human minds in order to create more intelligence information retrieval (IR) systems.

Lease and his team have combined AI with the insights of annotators and the information encoded in the resources to develop new approaches to IR that benefit search engines. This includes niche search engines, like the ones for medical knowledge or non-English texts.

In one of the papers written about this research, the team presents a method which combines the input from annotators in order to determine the best overall annotation for a text. The team applied this method to two problems: analyzing free-text research articles describing medical studies in order to extract details of each study and recognizing named-entities by analyzing breaking news to identify the events, people and places involved.

“An important challenge in natural language processing is accurately finding the important information contained in free-text, which lets us extract into databases and combine it with other data in order to make more intelligent decisions and new discoveries,” Lease said. “We’ve been using crowdsourcing to annotate medical and news articles at scale so that our intelligent systems will be able to more accurately find the key information contained in each article.”

Traditionally, the annotation has been performed by in-house domain experts. More recently, crowdsourcing has become the popular way to gather large labeled datasets. This method saves money. Annotations from lay people are usually lower quality than ones from a domain expert. This makes it necessary to estimate the reliability of crowd annotators and aggregate individual annotations in order to come up with a single set of “reference standard” consensus labels.

Lease’s team found that this method could train a neural network, meaning that it could accurately predict named entities and extract relevant information in unannotated texts. This method has improved upon existing methods.

This method can also provide an estimate of the individual worker’s label quality. It is also useful for error analysis and intelligently routing tasks and identifying the best person to annotate each text.

The second paper on this research says that neural models for natural language processing (NLP) often ignore resources that already exist, like Word Net, or domain-specific ontologies like the Unified Medical Language System that encodes knowledge about any given field.

The team proposed a method of exploitation of existing linguistic resources through using weight sharing to improve NLP models for automatic text classification. This model learns how to classify if a published medical article describes clinical trials that may be relevant to a clinical question.

The team applied a type of weight sharing to sentiment analysis of movie reviews and a biomedical search related to anemia. This approach showed yielded and improved performance on classification tasks when compared to other strategies that didn’t use weight sharing.

“This provides a general framework for codifying and exploiting domain knowledge in data-driven neural network models,” according to Byron Wallace, Lease’s collaborator from Northeastern University.

Lease, Wallace and their team used GPUs on the Maverick supercomputer at TACC in order to enable their analyses and train the machine learning system. Along with these GPUs, TACC uses cutting-edge processing architectures that have been developed by Intel and the machine learning libraries are starting to play catch up.

“With the introduction of Stampede2 and its many core infrastructure, we are glad to see more optimization of CPU-based machine learning frameworks,” said Niall Gaffney, Director of Data Intensive Computing at TACC.

Gaffeny believes that TACC’s work with Caffee – a deep learning framework developed by the University of California, Berkley—is finding that CPUs have the equivalent performance for many AI jobs at GPUs.

By improving core natural language processing technologies for automatic information extraction and classification of texts, search engines that are built on these technologies can continue to improve.

Two papers on this research were published and presented at the Annual Meeting of the Association for Computational Linguistics in Vancouver, Canada. You can read the first paper here, and the second paper here.