Bing revealed today that it has been using BERT in search results before Google, and it’s also being used at a larger scale.

Google’s use of BERT in search results is currently affecting 10% of search results in the US, as well as featured snippets in two dozen countries. Bing, on the other hand, is now utilizing BERT worldwide.

Bing has been using BERT since April, which was roughly half a year ahead of Google. In a blog post, Bing details the challenges it ran into when when rolling out BERT to global search results.

Applying a deep learning model like BERT to web search on a worldwide scale can be prohibitively expensive, Bing admits. It was eventually made possible with Microsoft’s ‘Azure’ cloud computing service.

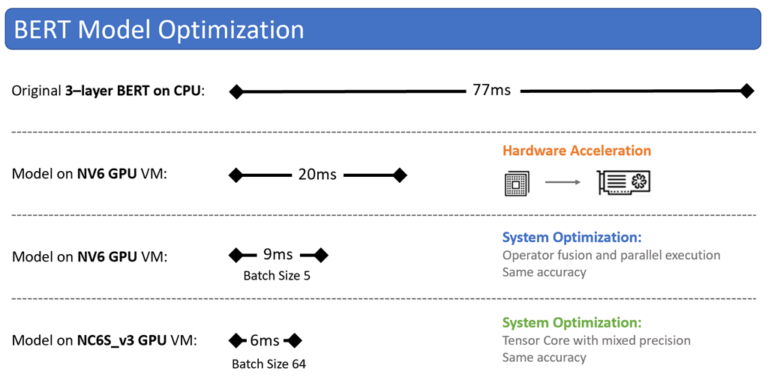

What was initially estimated to require tens of thousands of servers to achieve was accomplished with 2000+ Azure GPU Virtual Machines. Bing went from serving a three-layer BERT model on 20 CPU cores at 77 milliseconds per inference, to serving 64 inferences in 6 milliseconds using a GPU model on an Azure Virtual Machine.

The switch from running BERT on a CPU model to running BERT on a GPU model lead to a 800x increase in throughput improvement:

“With these GPU optimizations, we were able to use 2000+ Azure GPU Virtual Machines across four regions to serve over 1 million BERT inferences per second worldwide. Azure N-series GPU VMs are critical in enabling transformative AI workloads and product quality improvements for Bing with high availability, agility, and significant cost savings, especially as deep learning models continue to grow in complexity.”

These improvements to Bing search are available globally as of today. For more information on BERT, see: