Google has said that its most recent major search update, the inclusion of the BERT algorithm, will help it better understand the intent behind users’ search queries, which should mean more relevant results. BERT will impact 10% of searches, the company said, meaning it’s likely to have some impact on your brand’s organic visibility and traffic — you just might not notice.

This is our high-level look at what we know so far about what Google is touting as “one of the biggest leaps forward in the history of Search.” When you’re ready to go deeper, check out our companion piece: A deep dive into BERT: How BERT launched a rocket into natural language understanding, by Dawn Anderson.

When did BERT roll out in Google Search?

BERT began rolling out in Google’s search system the week of October 21, 2019 for English-language queries, including featured snippets.

The algorithm will expand to all languages in which Google offers Search, but there is no set timeline, yet, said Google’s Danny Sullivan. A BERT model is also being used to improve featured snippets in two dozen countries.

What is BERT?

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a neural network-based technique for natural language processing pre-training. In plain English, it can be used to help Google better discern the context of words in search queries.

For example, in the phrases “nine to five” and “a quarter to five,” the word “to” has two different meanings, which may be obvious to humans but less so to search engines. BERT is designed to distinguish between such nuances to facilitate more relevant results.

Google open-sourced BERT in November 2018. This means that anyone can use BERT to train their own language processing system for question answering or other tasks.

What is a neural network?

Neural networks of algorithms are designed for pattern recognition, to put it very simply. Categorizing image content, recognizing handwriting and even predicting trends in financial markets are common real-world applications for neural networks — not to mention applications for search such as click models.

They train on data sets to recognize patterns. BERT pre-trained using the plain text corpus of Wikipedia, Google explained when it open-sourced it.

What is natural language processing?

Natural language processing (NLP) refers to a branch of artificial intelligence that deals with linguistics, with the aim of enabling computers to understand the way humans naturally communicate.

Examples of advancements made possible by NLP include social listening tools, chatbots, and word suggestions on your smartphone.

In and of itself, NLP is not a new feature for search engines. BERT, however, represents an advancement in NLP through bidirectional training (more on that below).

How does BERT work?

The breakthrough of BERT is in its ability to train language models based on the entire set of words in a sentence or query (bidirectional training) rather than the traditional way of training on the ordered sequence of words (left-to-right or combined left-to-right and right-to-left). BERT allows the language model to learn word context based on surrounding words rather than just the word that immediately precedes or follows it.

Google calls BERT “deeply bidirectional” because the contextual representations of words start “from the very bottom of a deep neural network.”

“For example, the word ‘bank‘ would have the same context-free representation in ‘bank account‘ and ‘bank of the river.‘ Contextual models instead generate a representation of each word that is based on the other words in the sentence. For example, in the sentence ‘I accessed the bank account,’ a unidirectional contextual model would represent ‘bank‘ based on ‘I accessed the‘ but not ‘account.’ However, BERT represents ‘bank‘ using both its previous and next context — ‘I accessed the … account.’”

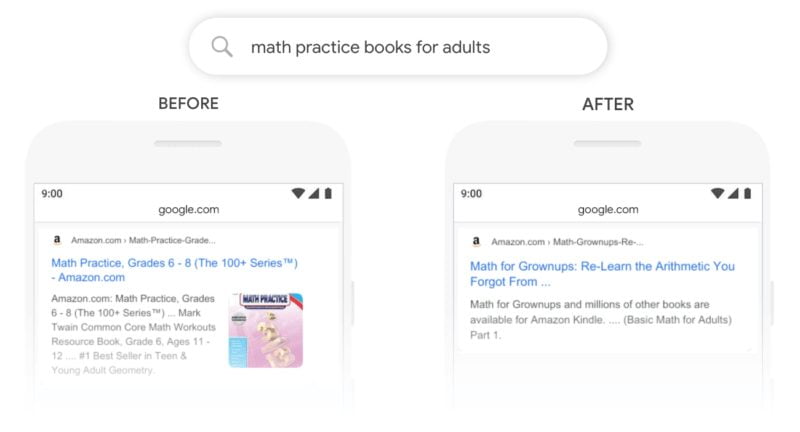

Google has shown several examples of how BERT’s application in Search may impact results. In one example, the query “math practice books for adults” formerly surfaced a listing for a book for Grades 6 – 8 at the top of the organic results. With BERT applied, Google surfaces a listing for a book titled “Math for Grownups” at the top of the results.

You can see in a current result for this query that the book for Grades 6 – 8 is still ranking, but there are two books specifically aimed at adults now ranking above it, including in the featured snippet.

A search result change like the one above reflects the new understanding of the query using BERT. The Young Adult content isn’t being penalized, rather the adult-specific listings are deemed better-aligned with the searcher’s intent.

Does Google use BERT to make sense of all searches?

No, not exactly. BERT will enhance Google’s understanding of about one in 10 searches in English in the U.S.

“Particularly for longer, more conversational queries, or searches where prepositions like ‘for’ and ‘to’ matter a lot to the meaning, Search will be able to understand the context of the words in your query,” Google wrote in its blog post.

However, not all queries are conversational or include prepositions. Branded searches and shorter phrases are just two examples of types of queries that may not require BERT’s natural language processing.

How will BERT impact my featured snippets?

As we saw in the example above, BERT may affect the results that appear in featured snippets when it’s applied.

In another example below, Google compares the featured snippets for the query “parking on a hill with no curb,” explaining, “In the past, a query like this would confuse our systems — we placed too much importance on the word ‘curb’ and ignored the word ‘no’, not understanding how critical that word was to appropriately responding to this query. So we’d return results for parking on a hill with a curb.”

What’s the difference between BERT and RankBrain?

Some of BERT’s capabilities might sound similar to Google’s first artificial intelligence method for understanding queries, RankBrain. But, they are two separate algorithms that may be used to inform search results.

“The first thing to understand about RankBrain is that it runs in parallel with the normal organic search ranking algorithms, and it is used to make adjustments to the results calculated by those algorithms,” said Eric Enge, general manager at Perficient Digital.

RankBrain adjusts results by looking at the current query and finding similar past queries. Then, it reviews the performance of the search results for those historic queries. “Based on what it sees, RankBrain may adjust the output of the results of the normal organic search ranking algorithms,” said Enge.

RankBrain also helps Google interpret search queries so that it can surface results that may not contain the exact words in the query. In the example below, Google was able to figure out that the user was seeking information about the Eiffel Tower, despite the name of the tower not appearing in the query “height of the landmark in paris.”

“BERT operates in a completely different manner,” said Enge. “Traditional algorithms do try to look at the content on a page to understand what it’s about and what it may be relevant to. However, traditional NLP algorithms typically are only able to look at the content before a word OR the content after a word for additional context to help it better understand the meaning of that word. The bidirectional component of BERT is what makes it different.” As mentioned above, BERT looks at the content before and after a word to inform its understanding of the meaning and relevance of that word. “This is a critical enhancement in natural language processing as human communication is naturally layered and complex.”

Both BERT and RankBrain are used by Google to process queries and web page content to gain a better understanding of what the words mean.

BERT isn’t here to replace RankBrain. Google may use multiple methods to understand a query, meaning that BERT could be applied on its own, alongside other Google algorithms, in tandem with RankBrain, any combination thereof or not at all, depending on the search term.

What other Google products might BERT affect?

Google’s announcement for BERT pertains to Search only, however, there will be some impact on the Assistant as well. When queries conducted on Google Assistant trigger it to provide featured snippets or web results from Search, those results may be influenced by BERT.

Google has told Search Engine Land that BERT isn’t currently being used for ads, but if it does get integrated in the future, it may help alleviate some of the bad close variants matching that plagues advertisers.

“How can I optimize for BERT?” That’s not really the way to think about it

“There’s nothing to optimize for with BERT, nor anything for anyone to be rethinking,” said Sullivan. “The fundamentals of us seeking to reward great content remain unchanged.”

Google’s advice on ranking well has consistently been to keep the user in mind and create content that satisfies their search intent. Since BERT is designed to interpret that intent, it makes sense that giving the user what they want continues to be Google’s go-to advice.

“Optimizing” now means that you can focus more on good, clear writing, instead of compromising between creating content for your audience and the linear phrasing construction for machines.

Want to learn more about BERT?

Here is our additional coverage and other resources on BERT.