The SEO industry often refers to “Google algorithmic penalties” as a catch-all phrase for websites that fail to live up to expectations in Google Search. However, the term used is fundamentally wrong because there are no Google algorithmic penalties. There are of course Google penalties, which Google officially and euphemistically calls Manual Spam Actions. And there are Google algorithms and algorithm updates. They both can and do determine how websites rank. However, it is essential to understand that they are completely different things before they can be influenced in a meaningful way.

Algorithms are (re-)calculations

Google relies to a large extent on their algorithms. As far as SEO is concerned very few of these algorithms existence are officially confirmed by Google. Google Panda, which is focused on on-page content quality and Google Penguin, which has been designed with off-page signals in mind, are likely the two most commonly cited and feared algorithms. While there are a few more named algorithms or updates to existing algorithms, it is important that these are the few named instances compared to the countless algorithms used at any time and hundreds of updates released throughout the year. In fact, on average there are multiple releases, major or minor every single day. The SEO industry, let alone the general public, rarely take notice of these changes. The release process is understandably a closely guarded Google secret. While new algorithms rarely are perfect from the start, they evolve. However, SEOs and marketers must know that algorithms by their very definition do not allow for exceptions. Google has always denied the existence of white- or black-lists of any sort and for a good reason. They simply do not exist.

Penguin and Panda are just two most infamous among countless Google algorithms.

Web sites are affected by algorithms, when their signals on- and off-page reach certain thresholds. Which are neither static values nor public domain information. That is why short of correlating certain events, like officially confirmed updates with sudden website ranking drops, it is not possible to conclusively confirm with 100% confidence that any particular site was or was not affected by a specific or set of algorithms. Unlike with manual penalties, Google does not disclose when a website is affected by algorithms, or how. That is not to say that the impact of an algorithm cannot be influenced desirably. They absolutely can! A recent, accurate and substantial crawl data both on- and off-page is needed to understand and evaluate which signals Google picks up. That objective can only be attained when conducting a site audit. It is best to apply a variety of tools and data sources, including the information Google does share via Google Search Console. In the best case scenario, server logs covering an extended and recent period of time are also used to verify findings. The latter step is also important to address a crucial question: How long will it take for Google to pick up on the new, improved signals before a site’s rankings improve again? That central question can only be answered individually for any given website and depends mostly on how frequently and thoroughly a website is being crawled and indexed. Small sites and sites that actively manage their crawl budget tend to benefit more swiftly. Large, cumbersome websites with a lot of crawl budget waste crawl prioritization signals and can take months or even years before they are recrawled.

A sudden drop in search visibility can be caused by an algorithm update and or by a manual penalty.

When algorithms fail

Ideally, Google algorithms would detect and filter 100% of the Google Webmaster Guidelines violations. But they don’t. Search is a complex matter and thus far, no one has been able to come up with an algorithm equally able to keep up with human ingenuity. Despite tremendous efforts to algorithmically combat spam, sites still manage to cut corners and get around rules – from Google’s point of view – to outrank their competitors. That is the main reason for Google penalties aka Manual Spam Actions. With these manual interventions and the occasional report on the webspam operation Google has been keeping spam sites in check for many years.

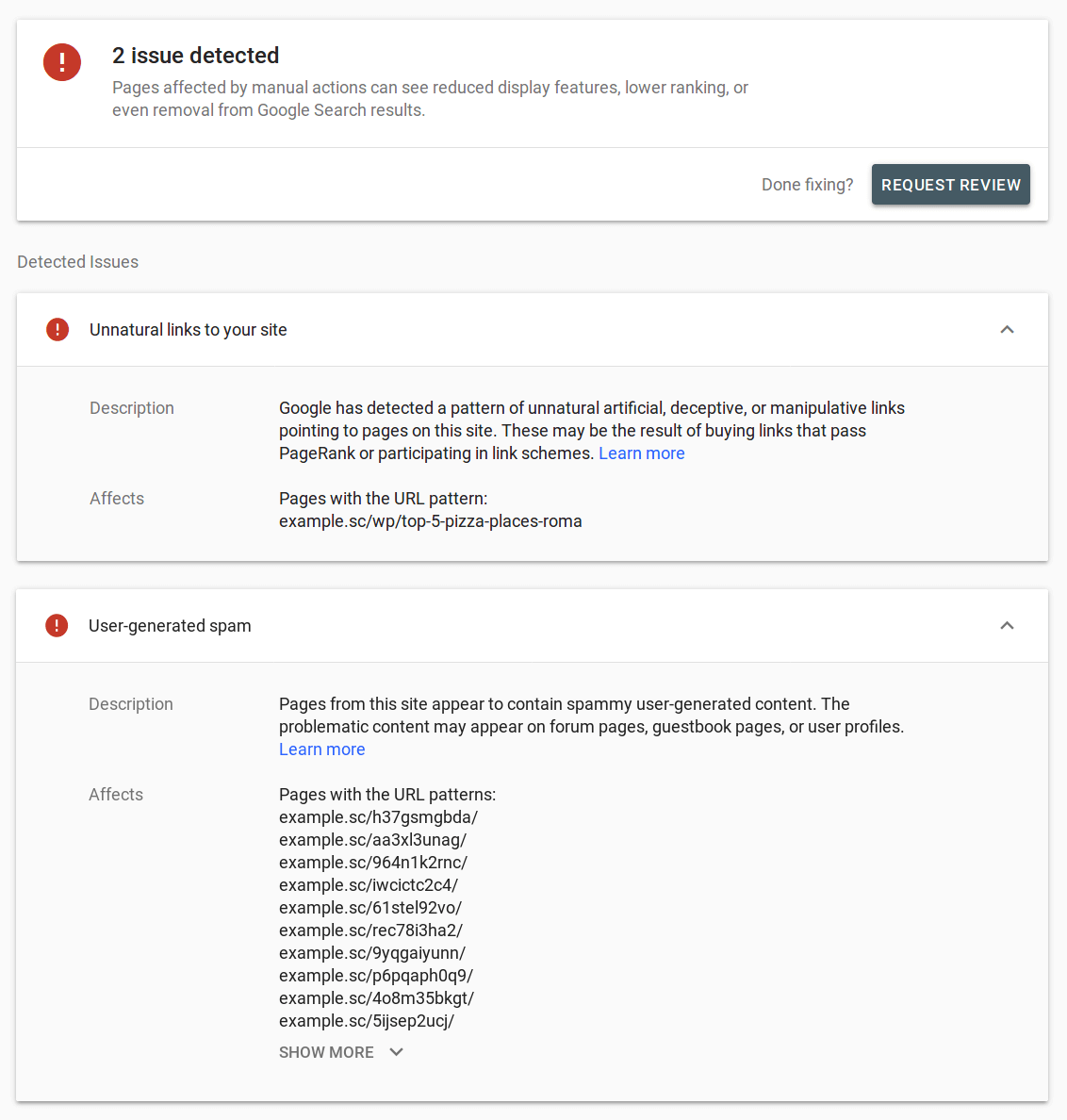

There are several reasons why sites get penalized by Google. The Ultimate Google Penalty Guide explains the issue in detail. When comparing manual penalties against algorithms, they may appear to have a similar impact. There are, however, several important differences from an SEO perspective. For starters, manual penalties trigger a message in GSC, highlighting the issue detected. Not only does Google provide transparent information regarding the type of violation identified, they also frequently share hints on how to fix and remedy the problem. In other words, there’s certainty when it comes to manual penalties. If a website is penalized, the site owner can find out their current status easily.

Unlike algorithms, Google penalties can be confirmed with 100% accuracy.

Another important distinction is that manual penalties usually time-out eventually. Google has never disclosed how long it takes for manual penalties to be removed, other than sporadically and cryptically stating that it takes a very, very long time to happen. Sitting-out manual penalties is not a viable proposition, especially given the negative impact they have on sites declining Google Search visibility and SERP real-estate lost.

Another difference when comparing the impact of algorithms is that unlike algorithms, Google penalties do not need to wait for the site to be recrawled before rankings can improve again. Instead, the site owner can ask Google specifically to lift the penalty through a dedicated process Google in the Reconsideration Request. Similarly to not disclosing the specific time range of a penalty, Google does not provide any hints regarding the anticipated processing time of reconsideration requests. This is a manual, labor-intensive process that involves Google Search employees evaluating the information submitted. Experience shows that anything from several hours to several weeks, and longer is a distinct possibility.

Similarly to investigating signals that may trigger algorithms, the initial work when resolving manual penalties is done by crawling the website, it’s backlinks and investigating these signals.

Algorithms and penalties

It is important to know that both algorithms and penalties can simultaneously affect a website. Their trigger signals can even overlap. For a site’s health, visibility in search and ultimately commercial success, algorithms and penalties are relevant factors to be managed. This is why periodic technical checks and Google Webmaster Guidelines compliance reviews are a must. Google does occasionally update their Webmaster Guidelines, often without much fanfare, to reflect the changing realities of today’s web better. That’s why periodic audits should be part of a company’s due diligence.

Neither algorithms nor penalties are to be dreaded. Experiencing an abrupt, unanticipated drop in search can be an opportunity to clean house. Once the shock dissipates, there is then an opportunity to grow both SERP real estate, Google Search visibility and CTR way beyond what was previously considered respectable results.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.