Sometimes I forget that Google Lens is there. But when I remember and use it, it works surprisingly well — depending on the object or image being captured. In my experience, it gets things right about 65 to 70 percent of the time.

Previously only available on the Pixel and Pixel XL, Google Lens has now become (indirectly) available on all Android phones through Google Photos. For now, Lens lives inside the Photos app. It’s reportedly coming but not yet available for iOS.

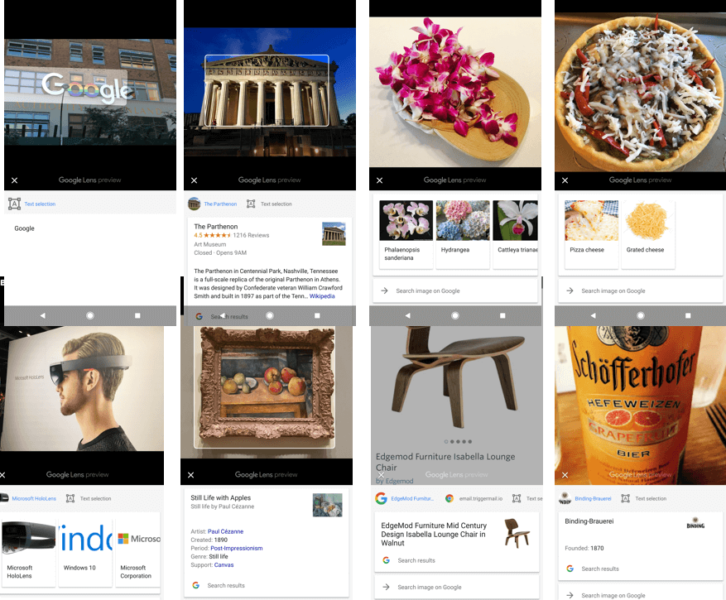

It’s a somewhat awkward user experience: Take a picture of an object or product, then “Lens” the photograph rather than the object itself. If Lens recognizes the image, it provides an identification or annotation and the option to initiate a search.

This indirect rollout could be part of a larger effort to train the system on a larger scale. I tried it on a diverse group of photographs I had taken over the past year. It delivered a correct or mostly correct answer in almost every case.

Google Lens: Visual search on images in Google Photos

Lens often performs best when there’s text associated with the image. For example, it does a great job with movie posters, product packaging and business cards, as a way to create new contacts.

Perhaps most impressively, among the images I tested (above), it accurately identified Cézanne as the artist behind the painting, though not the painting itself. It also correctly identified The Parthenon replica building in Nashville, Tennessee.

Yet there’s something quite awkward about being required to take a picture before Lensing it. Most people probably won’t do this. A few may use it on older images lacking information or context. Some clever individuals may collect product images in the real world and then later search them with Lens and potentially buy them online.

But these critiques and observations will be moot if this rollout is simply a prelude to a more natural, Pixel-like implementation of Lens on Android phones (and possibly iOS).