SEO has existed in some form or another for almost two decades, and I’ve had a front row seat to it all. You could say Google and I have had a pretty intimate relationship for the majority of my career; we’ve been through a lot together.

However, much has changed in the past 15 years: keyword utility has been heavily modified, link building for just backlinks became less important (disclosure: I made and lost a ton of cash in the linking business), social links as signals increased, quality content and semantics became increasingly substantial, and most recently, mobile-friendliness became a ranking factor-and that’s just scratching the surface.

There is perhaps nothing more important than an SEO audit when it comes to the health of a website. An in-depth audit provides a roadmap necessary to identify and pinpoint any existing weaknesses within your site. But, with the breadth of data and information available, many SEO’s find themselves lost in the chaos. Plus, there is a ton of bad information out there.

In these situations the best advice I can give is take a deep breath, prepare, organize, and analyze. And most important, always stick to the basics.

Pre-Audit Checklist: Account Access

Talk to the Client

When you are doing a site audit its important you have a certain level of understanding about the business. Communicate with your client so you can get the insight necessary to understand how your recommended SEO strategy will affect their bottom line. What drives conversions? Where do their quality leads come from and what do those consist of? Is their conversion data accurate? What are their goals for the next one, three, and five years? Are there particular keywords they think they should be ranking for – or not ranking for? Why do they think they should rank for those keywords? These are all questions you should ask the client because they are the expert in their own business.

Define Goals

You’d be surprised by how many people seem to lose sight of goals for their web properties. This is one of the most important steps in the any process. Without goals, what do you have to measure the data against? Have an in-depth conversation with your client and make sure to get their goals down on paper and start to establish a timeline that will reflect those goals.

Analytics

Make sure you have admin rights to access the site’s Google Analytics and any other third-party analytics platforms. If you are really lucky, the client may even have a few years of data you’ll be able to utilize during your audit.

Webmaster Tools

This is perhaps the most invaluable free tool – you must get admin access to Google Webmaster Tools! GMT provides specific sets of keyword and query data that Google Analytics does not have. If the client does not have GMT set up yet, you can easily set this up for them by creating and submitting a site map.

Google Adwords

If the client has invested in Google Adwords at all in the past, it may be a good idea for you to take a look at that data. Understanding the conversion data, bounce rate, keywords, and cost metrics can give you valuable insight into the direction you need to take their SEO strategy. Plus, it’ll give you a bit of an “inside look” into their business: who managed it, what they spent, and how it was set up.

Are They Mobile?

It should not be any surprise that more and more traffic is coming from mobile. Google made a historic announcement in late February 2015 stating that in mid-April they would favor sites that are mobile friendly. If that is not enough reason to be mobile-friendly then consider another field of employment. Mobile is not going anywhere but up.

Components of a SEO Site Audit

Have you completed all of the above? Congratulations, now the real work begins! Below, I’ve highlighted the core components of my SEO audit, the data you’ll need to obtain, and what you’ll need to analyze to form your strategy. It is up to you to take this data to the next level and form recommendations for your client. Not all data is created equal and no one can give you advice when it comes to the correct strategy.

Take it step by step, you’ve done the preparation, now you need to organize and analyze the data.

Site Crawl

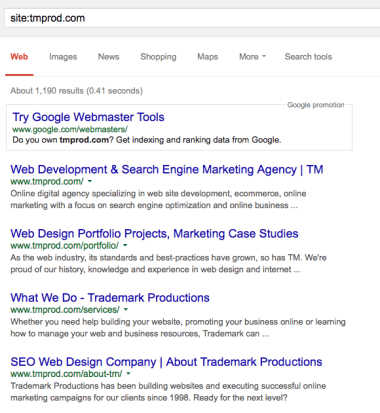

Start with a site index in Google to compare what is being indexed with your output from a crawl. Go to Google and in the search box type “Site:http://www.MyDomain.com” to review what Google has indexed (and remember it is estimated). I’ve used our domain, TMprod.com for example purposes. You can see that TMprod.com has around 1,150 pages in Google’s index.

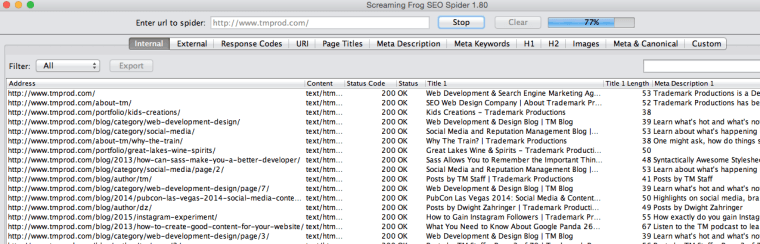

Now for a real deep crawl, get all the pieces to the puzzle. Use Screaming Frog or Scrutiny5 and enter the URL you want to crawl. Once it is 100% complete, export into a CSV and/or spreadsheet. Here you will be able to analyze the current state of your clients website.

Organize the data further in the exported spreadsheet. You’ll gain insight on page errors, on-site factors, duplicate meta data, site files, links and more. This should act as the blueprint to your SEO audit.

Site Speed

As you may know, site speed is a huge factor, not just for SERPs but also for visitor usability. Google’s PageSpeed Insights tool allows you to check the speed of the site for both mobile and desktop. It also gives helpful suggestions on how to improve performance.

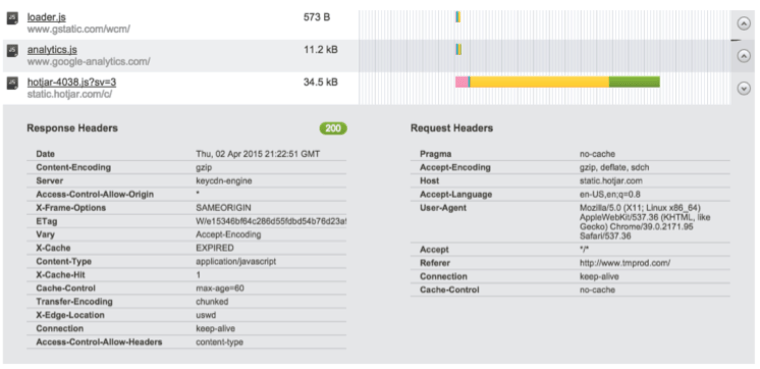

Pingdom is another great tool to check the speed of the site and what the hold-ups are. The data they provide helps you determine if a library file, font, image, or JS are weighted and causing the sluggish site speed. Often times, third-party JavaScript files can slow down a site. Make sure to vet these inconsistencies and determine whether they are necessary. You can also see below that a JavaScript file from a third-party analytics tool is a little slow in responding for our site.

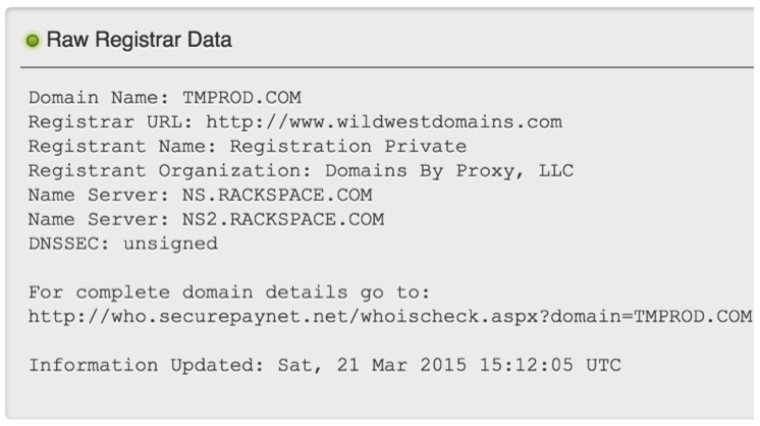

Domain

What is the domain? Do a history check in Archive.org way back machine to get an idea of what has been done with the domain in question in the past. I like DomainTools.com or a simple run at Who.is. Are there subdomains? Be sure to check the www vs. non-www in Google Webmaster Tools > configuration > settings.

Domain authority is important (some would argue more than others). Is that domain asset being taken advantage of to the fullest extent? Are there other domains associated?

Next you should analyze domain authority and offsite equity. Use tools like Moz, Raven Tools or Majestic SEO to provide more information. Take a look at the client’s backlinks and see where the links are coming from and why. This will help to draw conclusions about the state of the site, its authority and how to improve their strategy.

Site Info

It’s a good idea to know what you dealing with regarding a sites build. BuiltWith.com is a great tool to know pretty much everything about the site operations and associations. Software, server type, libraries, who hosts it, CMS, frameworks, ad networks, encodings, CSS – all great information to know. Also, it tells you more about how the operation is run, how clean the build may or may not be, and potential problems.

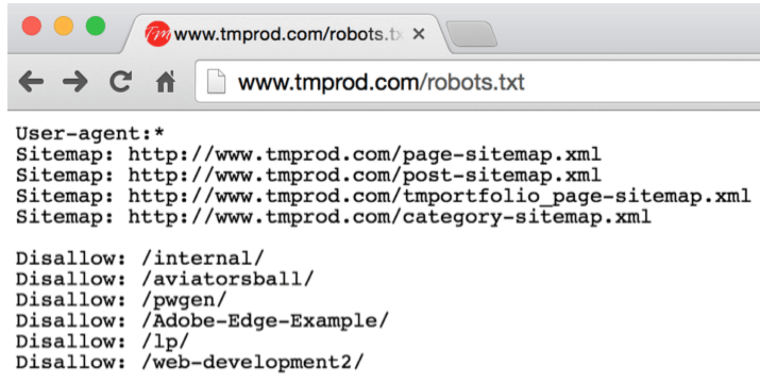

Architecture and Site Structure

The site structure defines the hierarchy of the site. It is important that these pages are prioritized to reflect the most important aspects of the business. How many clicks does it take the user to get to what they are looking for? Could this be improved? Do the page names and the navigation work as a part of the SEO strategy? What about usability for a visitor? Are there sitemaps to review and are they effectively and logically organized? Do they match the XML that was submitted for GWT/BWT?

These are all questions that you should be asking yourself when looking at the architecture of the client’s site.

File and URL Names

Many times you’ll see very specific page names and URL’s rewritten from software. Are these readable for the end-user? Would it serve as some type of breadcrumb for me to find my way? Are they logical and reference target keywords?

Example of Good URL: http://www.MyWebsite.com/about-us

Example of Bad URL: http://www.MyWebsite.com?navItemNumber=3421.

Session ID’s should also be expelled from your site URL and left in cookies or other user-tracking agents.

Other things I look for are capitalization versus lower case across the URL, filenames, and meta. A good rule of thumb is to always separate keywords with hyphens (not underscores) and make sure to carry the pattern throughout the site.

Be on the lookout for #’s in a URL. Commonly a developer or software will use that in a URL to change content on a page without really changing the page. It’s a neat trick but instead of http://www.example.com/about, you get http://www.example.com#about and Google will ignore everything after a # in a URL. May be something you don’t want to happen.

KPIs

Does the company have KPI’s in place (Key Performance Indicators)? They can be goals, engagement, sales, ranking, domain authority, and the list goes on and on. You’ll want to know what they are looking for; these go right along with goals. If they are specific keywords then you’ll want these front and center.

Keywords

Which ones are being targeted? The client may be able to give you some information on which they’d like to be targeting, but there’s a good chance they won’t know which keywords they’re actually being ranked for. A great place to start is Google Webmaster Tools > optimization > content keywords. Also, SEMrush.com, Raven Tools research central and SpyFu.com are some awesome tools for keyword insight.

Is the website ranking for the keywords they intended to target? What is the competition level? Keyword difficulty? Costs? Start by answering some of these questions, and then start to look at the rankings of the targeted keywords with tools like Moz Rank Checker, Authority Labs, or the SEOBook Free Rank checker to check the difficulty of a keyword and its competition level.

Make distinctions between short and long-tail keywords/phrases that are being targeted and start to organize your spreadsheet by keyword concept. Google AdWords keyword planner tool is great place to start and it’s free. Although it only gives you insight on paid search, you can assume that if a keyword is highly competitive on Adwords, then the same correlates to organic search.

Using a tool like the Keyword Difficulty Tool at Moz, Wordstream or Advanced Web Rankings, you should be able to determine how competitive the industry is and what competition is in that space. Note the authority of the competition, what type of keyword relevance they have to the site domain level and page level, and if all the keywords are relevant.

Keyword research tends to be amongst the most time-consuming when it comes to site audits, but it always reveals the most insight. Organization is key here. Get all the data you need from the above sources, export the info into a spreadsheet and start organizing, highlighting and prioritizing. Once you do, you’ll be surprised by how much you learn about the company, the industry, and its competitors. This is where you’re recommended strategy really starts to formulate.

Content

Now that you have a good grasp on keywords, it is time to look at on-page saturation. Are the keywords in the content of each page? Use a tool like Internet Marketing Ninjas Optimization Tool and Moz on-page grader. Are your keywords reflected in the title tags? Is the content more geared towards search engines (overly optimized) or towards site visitors? Are the meta description tag and H tags working with or against the content on-page? Are there misspellings or poor grammar?

It’s a good idea to also do a page-to-page content comparison; Internet Marketing Ninjas Side-by-Side SEO Comparison Tool reveals a ton of great info. Read through a few pages of content, are any plagiarized (Check site content for plagiarism tool)?

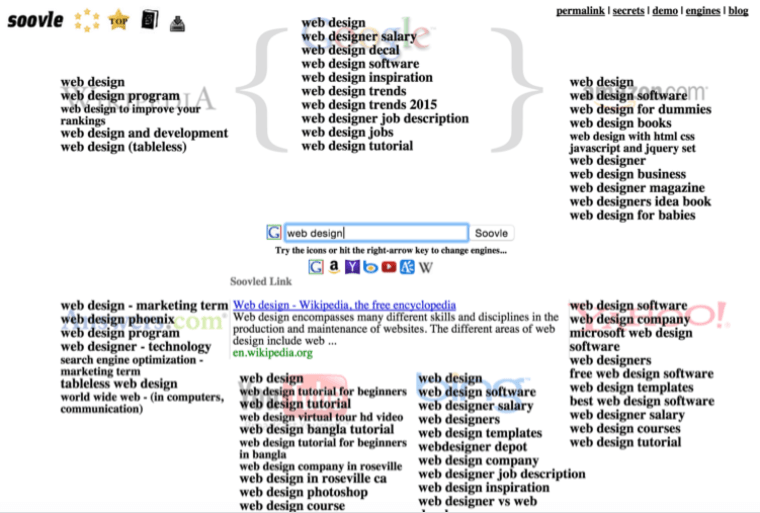

Now, take note of which keyword phrases are more informational based and could be used for content development. This requires reading and search for terms as you are in the moment on a large part but tools like Topsy and Soovle can help with content ideas.

Duplicate Content

This can be a mother of an issue for a site. Use Copyscape and search with quotes around the content in Google to help to identify if other variations of your content is out there. Are there duplicate versions of the homepage or other easily identifiable duplicate content? What is causing this? Duplicate content is bad for SEO because a search engine does not know which version to include or exclude from their index.

Most time they will only give any type of credit, authority or “juice” (old school term for link juice, providing credit to the page from external links inbound to that site/page) to the strongest, most authoritative version site. Your content could become a replicated result.

Some common culprits of duplicate content are:

- Printable pages

- Tracking ID’s

- Post ID’s and rewrites

- Extraneous URL’s

You may also want to explore if Rel=Prev/Next could be used.

What is that? These are html link components that identify relationships between component URL’s in pagination. We have all been to a site with content that is paginated across two, three, or more pages. Search engines appreciate this practice. It means the first page of the sequence get’s priority in the SERP. Subsequent pages will get indexed, but it’s highly unlikely that they will get featured on the SERP on related search queries.

Rel=Canocical

This is an important tag and usually very helpful, especially with data driven sites. It tells a search engine what content is duplicated and where the master is, otherwise it will crawl and make decisions. Rel=canocial can easily be set in the http headers of a site in the .htaccess or PHP. It’s a hint to search engines but doesn’t affect how the page is displayed or perform any redirection at the server level. Check GWT (Google Webmaster Tools) to see if they are present.

A simple check of the robots.txt file will help to garnish information that could prove helpful. You’ll get an idea of what pages are served to search engine spiders. Compare this to what you / your client intended to be indexed.

Meta

Are the page specific meta tags in place? Are there any identical, missing, short of long titles and meta-descriptions. GWT will report this out as will many other tools like Moz. Check the length: 70 characters for Title-Tag and 160 for description including spaces. Read through these descriptions: Are they properly descriptive? Are keywords targeted towards that page and content? Are the main keyword(s) included? Is there proper grammar and spelling? Remember that site crawl we talked about in the beginning of the audit? If you used Screaming Frog you’d have all this data.

Images

Sometimes images can be a problem for a number of reasons. Are any broken (links to the actual files)? You should be able to see this in a crawl report or tools like PowerMapper.com or WordPress links checker. WordPress automates to notify you when images and links break, which very helpful.

Are the images the right size? Image sizes can really drag down load time. I’ve seen many times how optimizing the images of a site can have some great effects overall. If the images are large that equals more downstream bandwidth and effects site speed; try Raven Site Auditor for image info on your site. There are some awesome WordPress plugins for image compression on upload, like Smush.it that are extremely helpful for the non-Photoshop users.

Are ALT tags being used on images and are they linked anywhere? Alternative text is pretty important and should be a part of your best practices. Keywords injected into an informative alternative title for that image are helpful to users and search engines.

Also, consider linking. WordPress by default will link to the original image. Do you want this? Can it be helpful if they are crawled and show up in search results?

Lastly, is anyone stealing your images or by accident did you or that agency/developer use someone else’s images? Could be a problem either way. Note that the larger stock photo outlets are notorious for sending out cease and desist with penalties for copyright infringement. Tineye is a great reverse image search tool.

Conversion Basics

Are the forms on the site set up properly? This can be considered highly relative to the company and goals for a conversion, sale, or lead. If possible to get ask for information on goal funnels in GA and if they are advertising what the average CPA (cost per acquisition) is that they are targeting?

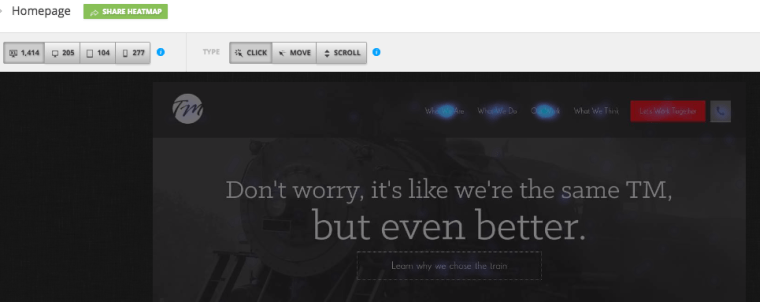

Are you or your client trying variations of landing pages (usually where sign-up or conversion forms are)? Do the web forms actually work (be sure to check desktop and mobile)? Do the forms ask for too much information that may be preventing users from completing them? A new tool that just came out of beta is HotJar, and I’m a fan personally. They can record user sessions of site usage, heatmaps, and my favorite – track fallout from a form funnel on your site.

This is also a great time to check if they are using any type of CAPTCHA or CMS. Are those plugins updated and working properly? If they are saving to a database, is that behind https and secure? These are all common usability complexities that can cause hurdles for form conversion.

Links

Oh how I love thee, those DoFollow of yesterday that were fun and meant so much to me. OK, now back to why they are still important. The Internet is made up of a ton of sites and clicking links are the gateway to explore. They are primarily broken up into internal links (linking from one page to another page all inside the same domain) and offsite links (those links that you link out to from your website or those that link into your website).

Internal Linking

Start by checking the internal linking structure of the website. Your internal links are important because you want people to stay on your website. If there is not good internal linking (navigation, footer, and in-content links) then this could result in people bouncing off your site.

There are many tools like that will check your linking structure like Meta Forensics, which is also important to know. The anchor text popularity and distribution, ALT tags for links, and whether they are do or nofollow all should be reviewed to meet the best practices and suggestion of GA. An important factor is that there are no broken links. If you are running a CMS like WordPress there are plugins that can alert you if any are identified. Screaming Frog will do the same on a desktop. Why should this matter? User experience is one big factor.

External Links

Backlinks, sometimes referred to as IBL’s (inbound links) are other websites linking over to your website. They have been known as popularity votes for your website by Google and other search engines. The iconic PageRank patented by Larry Page and held by Stanford was groundbreaking to help organize the web organizing sites based on popularity. The basis of the patent is look at the links inbound to a website and what the anchor text was of those links and count them as votes. Google would look at popularity and quality of these links over tike to help place you in a ranking among others in search results. During the early 2000’s and through though the decade SEO’s went on a wild ride playing cat and mouse with Google’s algorithm. The more links you got the better you ranked. It is a rudimentary explanation but I think you get it. Google revolutionized how the web was won. Today link popularity is still important however, Google has matured and simply getting links to get links no longer is a positive factor for any website. There are many signals involved that help you rank but back links still come in to play.

With backlinks there are many factors to be concerned about. A complete crawl will be helpful to know who links to you, what page the link to and what anchor text they use if any. My favorite tool for this and the largest indexed web outside of Google is MajecticSEO. Other tools are Moz Open Site Explorer and of course you can see some data in Google Webmaster Tools.

Once you have a crawl aggregate the anchor text distribution, it is time to look the quality of the backlinks. Why are you getting them? Does your site have too many of a certain link type? How many are followed versus. nofollowed? How many are 301 redirects? Are there sitewide links from a domain? Are there scraper sites, forums, or other large sites linking to yours? This is all great information to help you determine if you need to curate the links, look at disavowing some links in GWT (before you do this you need to read up on the process to do it correctly).

Once you have a list of your backlinks, you will see a ton of information that could help determine why you have certain rankings, attacks, or other problems and opportunities. And remember, there is no reason you can’t run a crawl on a competitors backlinks and compare them to yours. Who are your top 10? Do they have common backlinks you do not? What type of anchor text are they using? How many linking domains do they have? There could be a ton of low-hanging fruit to relish in.

Social Signals

Whether we like it or not, social is here to stay for the time being. Social has popularity and usage and when utilized well can help with traffic and sales so we have to consider it. Start with the brand name or products – are all the social properties claimed (Use KnowEm). Brand continuity is a big problem I see often. What properties are squatted on? Can you get those back? Is there a naming convention that you can use that covers 80-90% of the properties?

If your site has a blog or a content marketing plan, how is this being shared across social networks? Are the pages well linked and / or is URL shortening being used? Check analytics and see where that content is working, and if your target social sites are not getting traffic to your website, then work on changing that.

Citations and Business Listings

How are external citations, otherwise knows as business listings? Local is a big deal and I cross this over with social and linking in a big way. These directories show up in search results and can drive traffic, sales, and leads. Are all of the NAP (Name address and phone) listed the same on all directories (Moz local and Yext are good, Whitespark is my favorite)? Are they consistent and do they target geospecific phrases (“NY Dentist” as an example)? Are there any reviews? What do those say? What are the mentions of your business or client’s business? Google Keyword Alerts is the default simple notification of mentions of your company or brand. Ahrefs have some great mention reporting tools that will help with building more backlinks.

Editor’s Note: To learn more about site audit and its various aspects check out this SEJ Marketing Think Tank webinar recap, or watch the video below.

In Closing

Armed with the above information, you’ve got a few tasks to perform. This is not a complete, all-inclusive list, as there is so much more you can do and every bit of information leads to another discovery. However, I believe you will now have a ton of insights that should lead to ideas on how to change your site to increase rankings, get more traffic, leads, or sales.

Image Credits

Featured Image: Created by author for SEJ

Image #1: PubCon 2007 photo by Dwight Zahringer

All screenshots taken April 2015

Subscribe to SEJ

Get our daily newsletter from SEJ’s Founder Loren Baker about the latest news in the industry!