Maintaining its focus on machine learning and imaging, Apple’s Deep Fusion technology will help you take better pictures when you use iPhone 11 series smartphones.

What is Deep Fusion?

“Computational photography mad science,” is how Apple’s SVP Worldwide Marketing, Phil Schiller described the capabilities of Deep Fusion when announcing the iPhone.

Apple’s press release puts it this way:

“Deep Fusion, coming later this fall, is a new image processing system enabled by the Neural Engine of A13 Bionic. Deep Fusion uses advanced machine learning to do pixel-by-pixel processing of photos, optimizing for texture, details and noise in every part of the photo.”

Deep Fusion will work with the dual-camera (Ultra Wide and Wide) system on the iPhone 11.

It also works with the triple-camera system (Ultra Wide, Wide and Telephoto) on the iPhone 11 Pro range.

How Deep Fusion works

Deep Fusion fuses nine separate exposures together into a single image, Schiller explained.

What that means is that when you capture an image while in this mode, your iPhone’s camera will capture four short images, one long exposure and four secondary images each time you take a photo.

Before you press the shutter button it’s already shot four short images and four secondary images, when you press the shutter button it takes one long exposure, and in just one second the Neural Engine analyses the combination and selects the best among them.

In that time, Deep Fusion on your A13 chip goes through every pixel on the image (all 24 million of them) to select and optimize each one of them for detail and noise – all in a second. That’s why Schiller calls it “mad science”.

The result?

Huge amounts of image detail, impressive dynamic range and very low noise. You’ll really see this if you zoom in on detail, particularly with textiles.

This is why Apple’s example image featured a man in a multi-colored woollen jumper.

“This kind of image would not have been possible before,” said Schiller. The company also claims this to be the first time a neural engine is “responsible for generating the output image”.

Apple

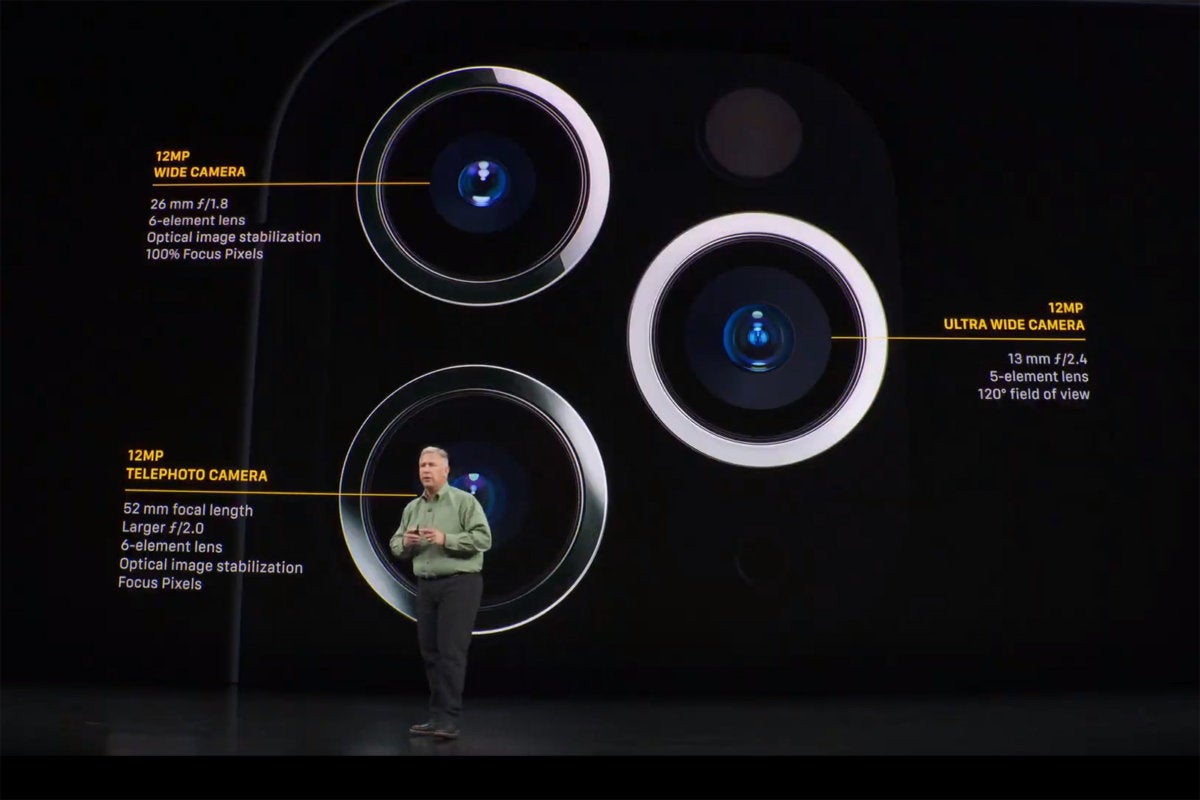

AppleThe iPhone 11 Pro now has three lenses on the back.

Some detail about the cameras

The dual-camera on iPhone 11 consists of two 12-megapixel cameras, one being a Wide Camera with a 26mm focal length and f/1.8, the other being Ultra Wide with a 13mm focal length and f/2.4 delivering images with a 120-degree field of view.

The Pro range adds a third 12-megapixel telephoto camera with a 52mm focal length, with f/2.0.

You’ll find optical image stabilization in both the telephoto and wide cameras.

The front-facing camera has also been improved. The 12-megapixel camera cn now capture 4K/6p and 1,080/120p slow motion video.

That Night mode thing

Apple is also using machine intelligence in the iPhone 11 to provide Night Mode.

This works by capturing multiple frames at multiple shutter speeds. These are then combined together to create better images.

That means less motion blur and more detail in night time shots – this should also be seen as Apple’s response to Google’s Night Sight feature in Pixel phones, though Deep Fusion takes this much further.

What’s interesting, of course, is that Apple seems to plan to sit on the new feature until later this fall, when Google may introduce Pixel 4.

Apple

AppleApple claims a 20 percent performance improvement over the previous chip.

All about the chip

Underpinning all this ML activity is the Neural engine inside Apple’s A13 Bionic processor.

During its onstage presentation, Apple claimed the chip to be the fastest CPU ever inside a smartphone, with the fastest GPU to boot.

It doesn’t stop there.

The company also claims the chip to be the most power efficient it has made so far –which is why it has been able to deliver up to four hours of additional battery life in the iPhone 11 and five hours for the 11 Pro.

To achieve this, Apple has worked on a micro level, placing thousands of voltage and clock gates that act to shut off power to elements of the chip when those parts aren’t in use.

The chip includes 8.5 billion transistors and is capable of handling one trillion operations per second. You’ll find two performance cores and four efficiency cores in the CPU, four in the GPU and eight cores in the Neural Engine.

The result?

Yes, your iPhone 11 will last longer between charges and will seem faster than the iPhone you own today (if you own one at all).

But it also means your device is capable of doing hard computational tasks such as analysing and optimizing 24 million pixels in an image within one second.

What can developers do?

I’d like you to think briefly about that and then consider that Apple is opening up a whole bunch of new machine learning features to developers in iOS 13.

These include things like:

- On-device model personalization in Core ML 3 – you can build ML models that can be personalized for a user on the device, protecting privacy.

- Improved Vision frameworks, including a feature called Image Saliency, which uses ML to figure out which elements of an image a user is most likely to focus on. You’ll also find text recognition and search in images.

- New Speech and Sound frameworks

- ARKit delivers support for using the front and back cameras at once, it also offers people occlusion, which lets you hide and show objects as people move around your AR experience.

- This kind of ML quite plainly has significance in terms of training the ML used in image optimization, feeding into the also upgraded (and increasingly AI-driven) Metal.

I could continue extending this list but what I’m trying to explain is that Apple’s Deep Fusion, while remarkable in itself, can also be seen as a poster child for the kind of machine learning augmentation it is enabling its platforms to support.

Right now we have ML models available developers can use to build applications for images, text, speech and sound.

In future (as Apple’s U1 chip and its magical AirDrop directional analysis shows) there will be opportunities to combine Apple’s ML systems with sensor-gathered data to detect things like location, direction, even which direction your eyes are facing.

Now, it’s not (yet) obvious what solutions will be unlocked by these technologies, but the big picture is actually a bigger picture than Deep Fusion provides. Apple appears to have turned the iPhone into the world’s most powerful mobile ML platform.

Deep Fusion illustrates this. Now what will you build?

Please follow me on Twitter, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.

Copyright © 2019 IDG Communications, Inc.