Did you know that while Ahrefs blog is powered by WordPress, much of the rest of the site is powered by JavaScript like React?

Most websites use some kind of JavaScript to add interactivity and to improve user experience. Some use it for menus, pulling in products or prices, grabbing content from multiple sources, or in some cases, for everything on the site. The reality of the current web is that JavaScript is ubiquitous.

As Google’s John Mueller said:

The web has moved from plain HTML — as an SEO you can embrace that. Learn from JS devs & share SEO knowledge with them. JS’s not going away.— 🍌 John 🍌 (@JohnMu) August 8, 2017

I’m not saying that SEOs need to go out and learn how to program JavaScript. It’s quite the opposite. SEOs mostly need to know how Google handles JavaScript and how to troubleshoot issues. In very few cases will an SEO even be allowed to touch the code. My goal with this post is to help you learn:

JavaScript SEO is a part of Technical SEO (Search Engine Optimization) that seeks to make JavaScript-heavy websites easy to crawl and index, as well as search-friendly. The goal is to have these websites be found and rank higher in search engines.

Is JavaScript bad for SEO; is JavaScript evil? Not at all. It’s just different from what many SEOs are used to, and there’s a bit of a learning curve. People do tend to overuse it for things where there’s probably a better solution, but you have to work with what you have at times. Just know that Javascript isn’t perfect and it isn’t always the right tool for the job. It can’t be parsed progressively, unlike HTML and CSS, and it can be heavy on page load and performance. In many cases, you may be trading performance for functionality.

In the early days of search engines, a downloaded HTML response was enough to see the content of most pages. Thanks to the rise of JavaScript, search engines now need to render many pages as a browser would so they can see content how a user sees it.

The system that handles the rendering process at Google is called the Web Rendering Service (WRS). Google has provided a simplistic diagram to cover how this process works.

Let’s say we start the process at URL.

1. Crawler

The crawler sends GET requests to the server. The server responds with headers and the contents of the file, which then gets saved.

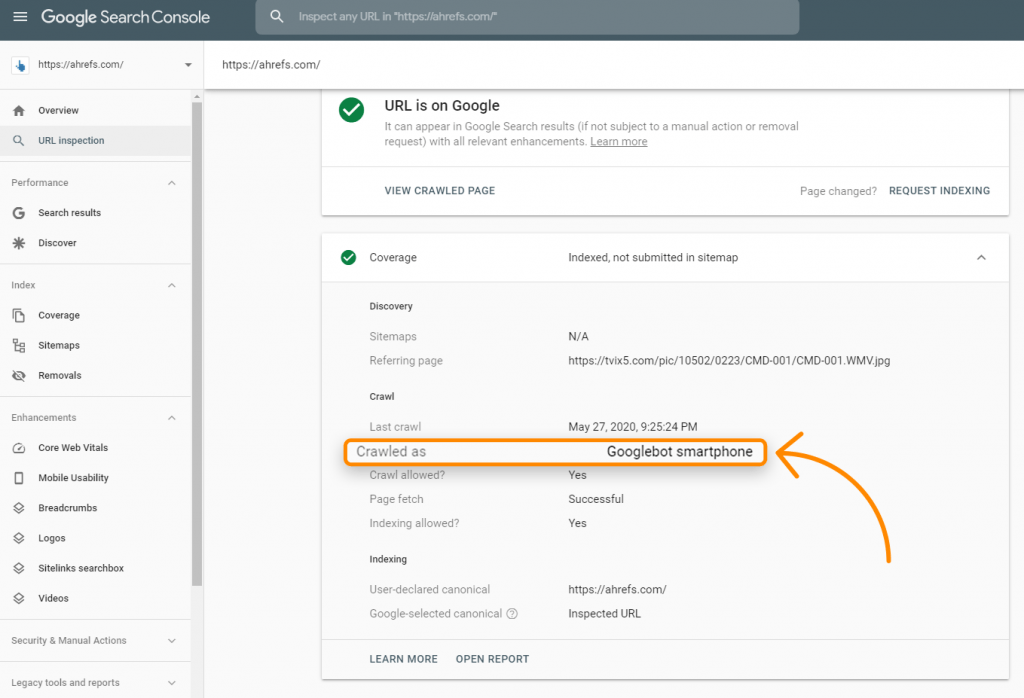

The request is likely to come from a mobile user-agent since Google is mostly on mobile-first indexing now. You can check to see how Google is crawling your site with the URL Inspection Tool inside Search Console. When you run this for a URL, check the Coverage information for “Crawled as,” and it should tell you whether you’re still on desktop indexing or mobile-first indexing.

The requests mostly come from Mountain View, CA, USA, but they also do some crawling for locale-adaptive pages outside of the United States. I mention this because some sites will block or treat visitors from a specific country or using a particular IP in different ways, which could cause your content not to be seen by Googlebot.

Some sites may also use user-agent detection to show content to a specific crawler. Especially with JavaScript sites, Google may be seeing something different than a user. This is why Google tools such as the URL Inspection Tool inside Google Search Console, the Mobile-Friendly Test, and the Rich Results Test are important for troubleshooting JavaScript SEO issues. They show you what Google sees and are useful for checking if Google may be blocked and if they can see the content on the page. I’ll cover how to test this in the section about the Renderer because there are some key differences between the downloaded GET request, the rendered page, and even the testing tools.

It’s also important to note that while Google states the output of the crawling process as “HTML” on the image above, in reality, they’re crawling and storing all resources needed to build the page. HTML pages, Javascript files, CSS, XHR requests, API endpoints, and more.

2. Processing

There are a lot of systems obfuscated by the term “Processing” in the image. I’m going to cover a few of these that are relevant to JavaScript.

Resources and Links

Google does not navigate from page to page as a user would. Part of Processing is to check the page for links to other pages and files needed to build the page. These links are pulled out and added to the crawl queue, which is what Google is using to prioritize and schedule crawling.

Google will pull resource links (CSS, JS, etc.) needed to build a page from things like tags. However, links to other pages need to be in a specific format for Google to treat them as links. Internal and external links need to be an tag with an href attribute. There are many ways you can make this work for users with JavaScript that are not search-friendly.

Good:

simple is good still okay

Bad:

nope, no href nope, missing link nope, missing link not the right HTML element no link Button, ng-click, there are many more ways this can be done incorrectly.

It’s also worth noting that internal links added with JavaScript will not get picked up until after rendering. That should be relatively quick and not a cause for concern in most cases.

Caching

Every file that Google downloads, including HTML pages, JavaScript files, CSS files, etc., is going to be aggressively cached. Google will ignore your cache timings and fetch a new copy when they want to. I’ll talk a bit more about this and why it’s important in the Renderer section.

Duplicate elimination

Duplicate content may be eliminated or deprioritized from the downloaded HTML before it gets sent to rendering. With app shell models, very little content and code may be shown in the HTML response. In fact, every page on the site may display the same code, and this could be the same code shown on multiple websites. This can sometimes cause pages to be treated as duplicates and not immediately go to rendering. Even worse, the wrong page or even the wrong site may show in search results. This should resolve itself over time but can be problematic, especially with newer websites.

Most Restrictive Directives

Google will choose the most restrictive statements between HTML and the rendered version of a page. If JavaScript changes a statement and that conflicts with the statement from HTML, Google will simply obey whichever is the most restrictive. Noindex will override index, and noindex in HTML will skip rendering altogether.

3. Render queue

Every page goes to the renderer now. One of the biggest concerns from many SEOs with JavaScript and two-stage indexing (HTML then rendered page) is that pages might not get rendered for days or even weeks. When Google looked into this, they found pages went to the renderer at a median time of 5 seconds, and the 90th percentile was minutes. So the amount of time between getting the HTML and rendering the pages should not be a concern in most cases.

4. Renderer

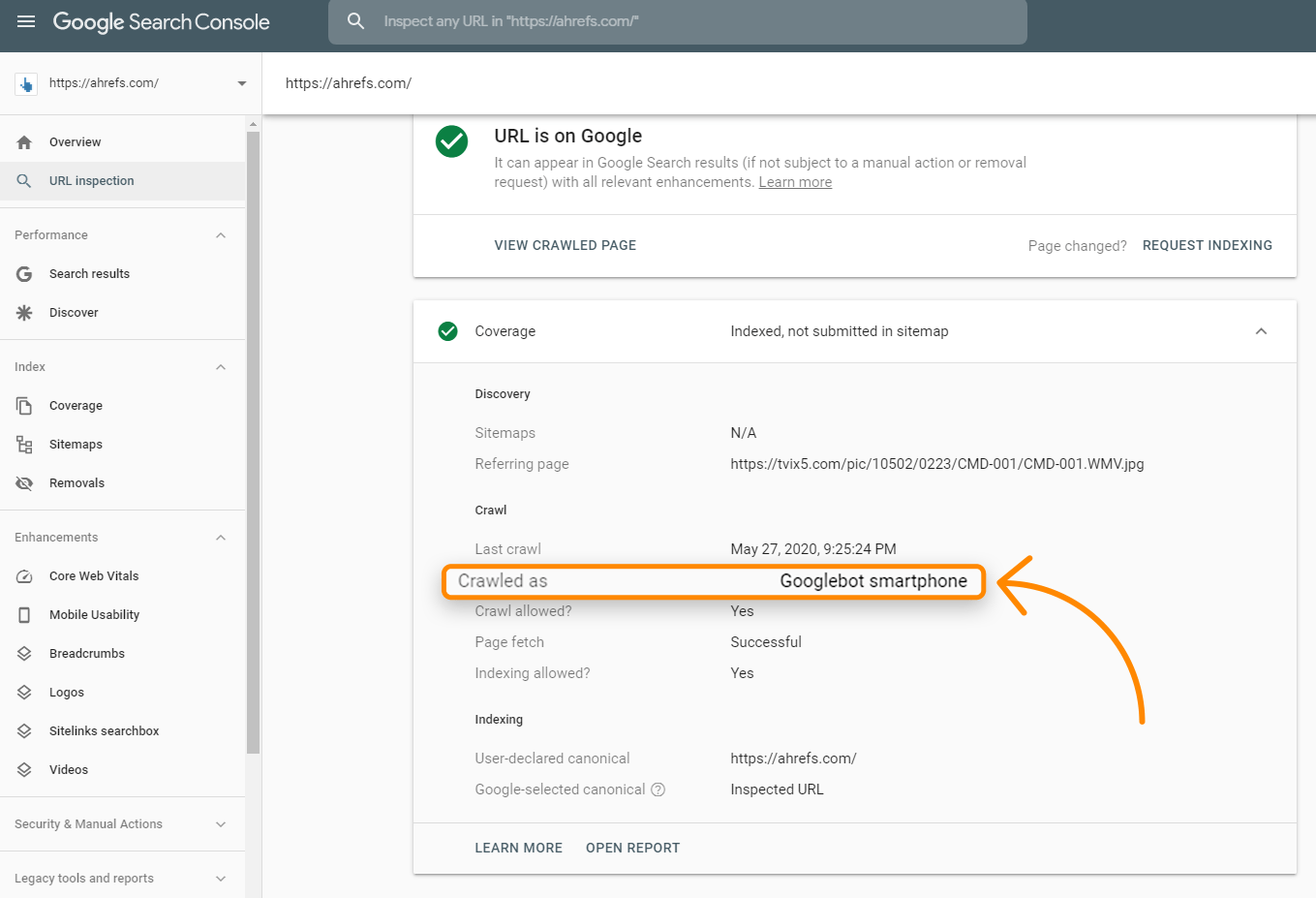

The renderer is where Google renders a page to see what a user sees. This is where they’re going to process the JavaScript and any changes made by JavaScript to the Document Object Model (DOM).

For this, Google is using a headless Chrome browser that is now “evergreen,” which means it should use the latest Chrome version and support the latest features. Until recently, Google was rendering with Chrome 41, so many features were not supported.

Google has more info on the Web Rendering Service (WRS), which includes things like denying permissions, being stateless, flattening light DOM and shadow DOM, and more that is worth reading.

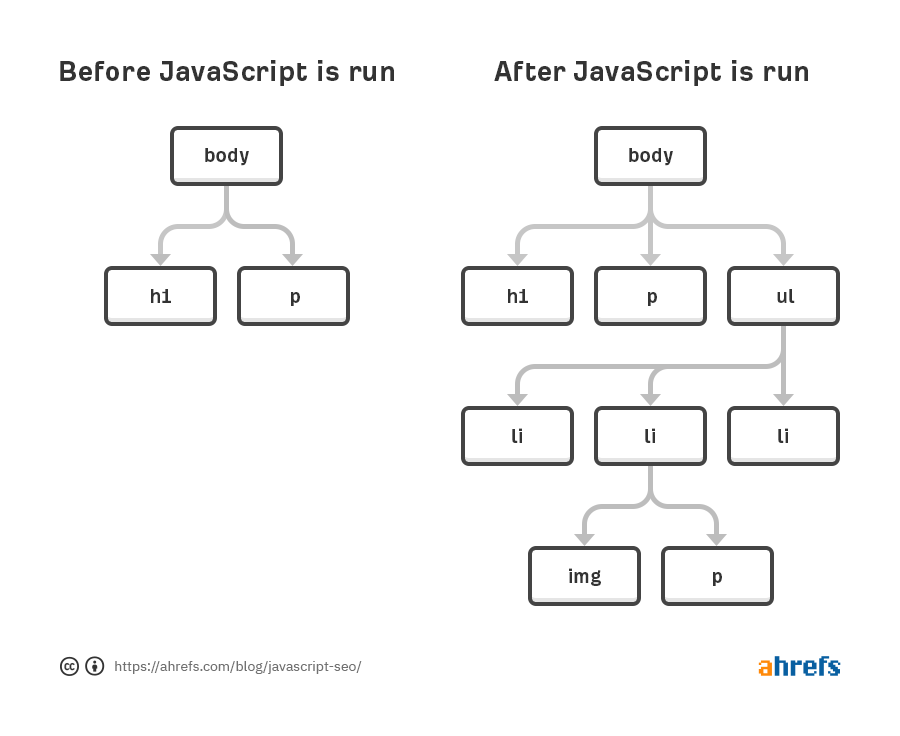

Rendering at web-scale may be the 8th wonder of the world. It’s a serious undertaking and takes a tremendous amount of resources. Because of the scale, Google is taking many shortcuts with the rendering process to speed things up. At Ahrefs, we are the only major SEO tool that renders web pages at scale, and we manage to render ~150M pages a day to make our link index more complete. It allows us to check for JavaScript redirects and we can also show links we found inserted with JavaScript which we show with a JS tag in the link reports:

Cached Resources

Google is relying heavily on caching resources. Pages are cached; files are cached; API requests are cached; basically, everything is cached before being sent to the renderer. They’re not going out and downloading each resource for every page load, but instead using cached resources to speed up this process.

This can lead to some impossible states where previous file versions are used in the rendering process and the indexed version of a page may contain parts of older files. You can use file versioning or content fingerprinting to generate new file names when significant changes are made so that Google has to download the updated version of the resource for rendering.

No Fixed Timeout

A common SEO myth is that the renderer only waits five seconds to load your page. While it’s always a good idea to make your site faster, this myth doesn’t really make sense with the way Google caches files mentioned above. They’re basically loading a page with everything cached already. The myth comes from the testing tools like the URL Inspection Tool where resources are fetched live and they need to set a reasonable limit.

There is no fixed timeout for the renderer. What they are likely doing is something similar to what the public Rendertron does. They likely wait for something like networkidle0 where no more network activity is occurring and also set a maximum amount of time in case something gets stuck or someone is trying to mine bitcoin on their pages.

What Googlebot Sees

Googlebot doesn’t take action on webpages. They’re not going to click things or scroll, but that doesn’t mean they don’t have workarounds. For content, as long as it is loaded in the DOM without a needed action, they will see it. I will cover this more in the troubleshooting section but basically, if the content is in the DOM but just hidden, it will be seen. If it’s not loaded into the DOM until after a click, then the content won’t be found.

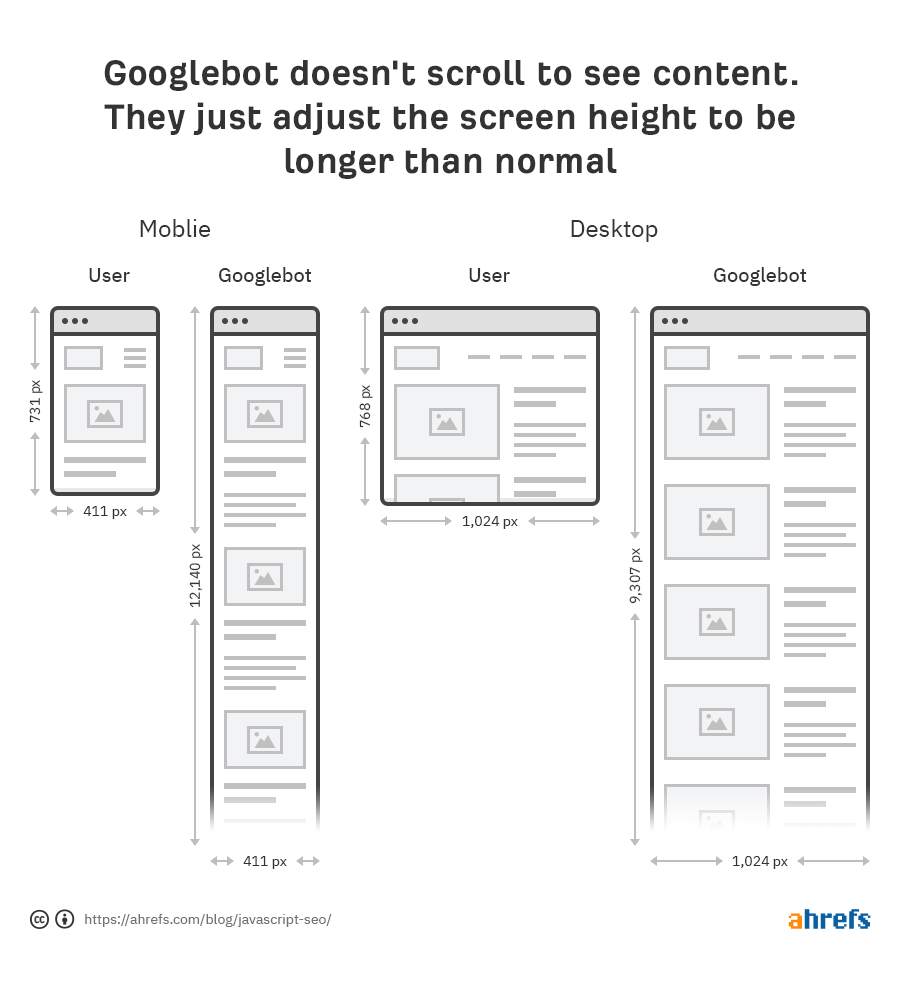

Google doesn’t need to scroll to see your content either because they have a clever workaround to see the content. For mobile, they load the page with a screen size of 411×731 pixels and resize the length to 12,140 pixels. Essentially, it becomes a really long phone with a screen size of 411×12140 pixels. For desktop, they do the same and go from 1024×768 pixels to 1024×9307 pixels.

Another interesting shortcut is that Google doesn’t paint the pixels during the rendering process. It takes time and additional resources to finish a page load, and they don’t really need to see the final state with the pixels painted. They just need to know the structure and the layout and they get that without having to actually paint the pixels. As Martin Splitt from Google puts it:

https://youtube.com/watch?v=Qxd_d9m9vzo%3Fstart%3D154

In Google search we don’t really care about the pixels because we don’t really want to show it to someone. We want to process the information and the semantic information so we need something in the intermediate state. We don’t have to actually paint the pixels.

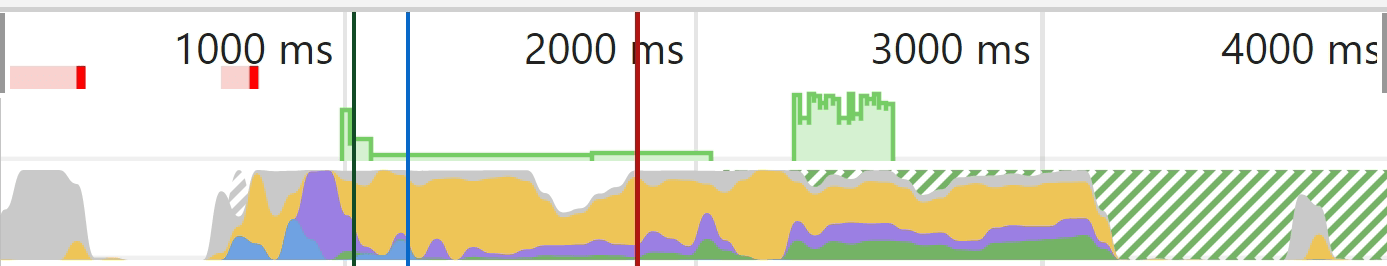

A visual might help explain what is cut out a bit better. In Chrome Dev Tools, if you run a test on the Performance tab you get a loading chart. The solid green part here represents the painting stage and for Googlebot that never happens so they save resources.

Gray = downloads

Blue = HTML

Yellow = JavaScript

Purple = Layout

Green = Painting

5. Crawl queue

Google has a resource that talks a bit about crawl budget, but you should know that each site has its own crawl budget, and each request has to be prioritized. Google also has to balance your site crawling vs. every other site on the internet. Newer sites in general or sites with a lot of dynamic pages will likely be crawled slower. Some pages will be updated less often than others, and some resources may also be requested less frequently.

One ‘gotcha’ with JavaScript sites is they can update only parts of the DOM. Browsing to another page as a user may not update some aspects like title tags or canonical tags in the DOM, but this may not be an issue for search engines. Remember, Google loads each page stateless, so they’re not saving previous information and are not navigating between pages. I’ve seen SEOs get tripped up thinking there is a problem because of what they see after navigating from one page to another, such as a canonical tag that doesn’t update, but Google may never see this state. Devs can fix this by updating the state using what’s called the History API, but again it may not be a problem. Refresh the page and see what you see or better yet run it through one of Google’s testing tools to see what they see. More on those in a second.

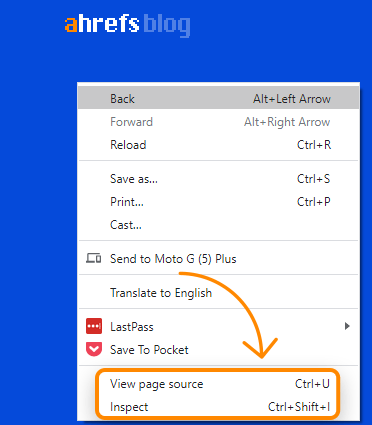

View-source vs. Inspect

When you right-click in a browser window, you’ll see a couple of options for viewing the source code of the page and for inspecting the page. View-source is going to show you the same as a GET request would. This is the raw HTML of the page. Inspect shows you the processed DOM after changes have been made and is closer to the content that Googlebot sees. It’s basically the updated and latest version of the page. You should use inspect over view-source when working with JavaScript.

Google Cache

Google’s cache is not a reliable way to check what Googlebot sees. It’s usually the initial HTML, although it’s sometimes the rendered HTML or an older version. The system was made to see the content when a website is down. It’s not particularly useful as a debug tool.

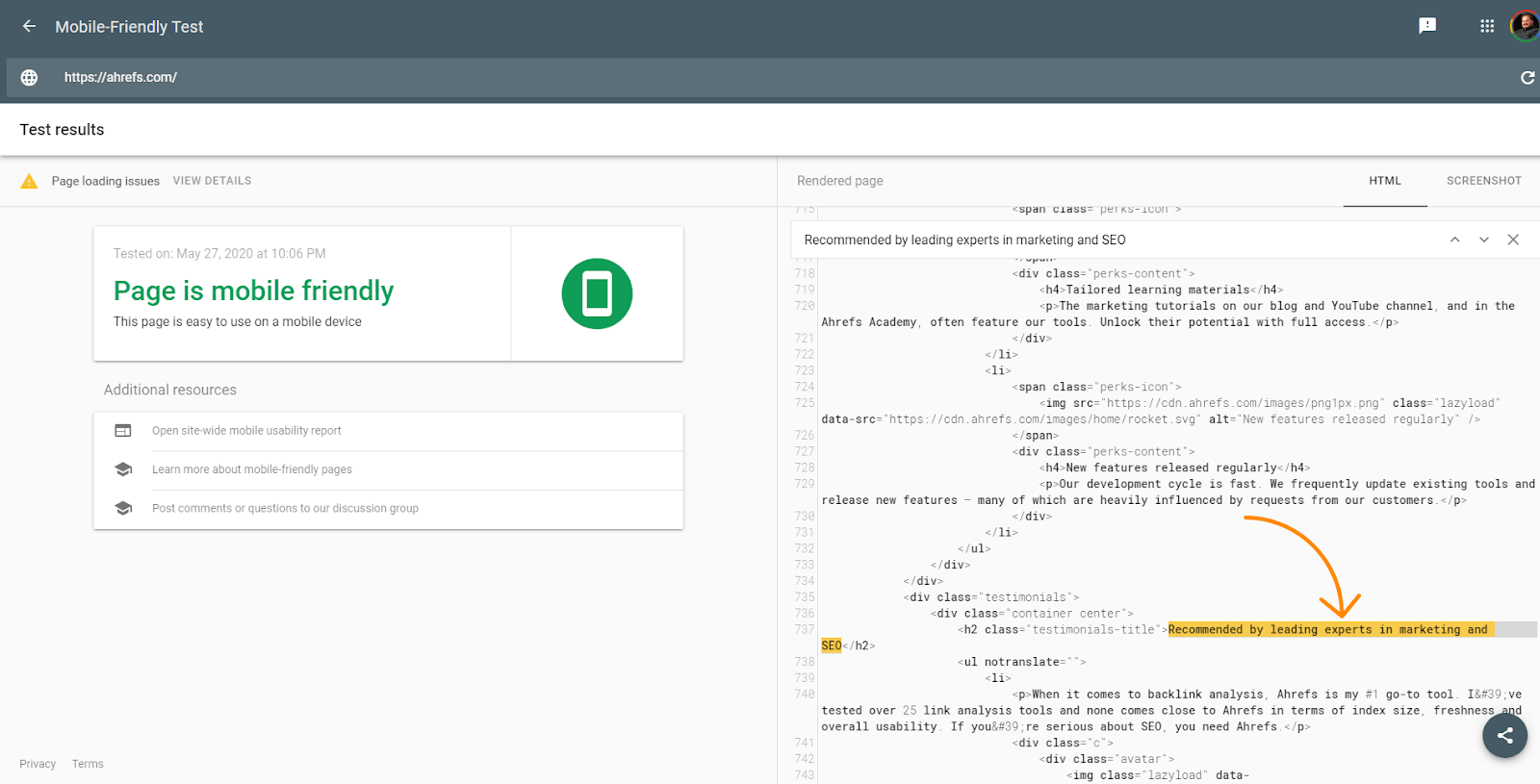

Google Testing Tools

Google’s testing tools like the URL Inspector inside Google Search Console, Mobile Friendly Tester, Rich Results Tester are useful for debugging. Still, even these tools are slightly different from what Google will see. I already talked about the five-second timeout in these tools that the renderer doesn’t have, but these tools also differ in that they’re pulling resources in real-time and not using the cached versions as the renderer would. The screenshots in these tools also show pages with the pixels painted, which Google doesn’t see in the renderer.

The tools are useful to see if content is DOM-loaded, though. The HTML shown in these tools is the rendered DOM. You can search for a snippet of text to see if it was loaded in by default.

The tools will also show you resources that may be blocked and console error messages which are useful for debugging.

Searching Text in Google

Another quick check you can do is simply search for a snippet of your content in Google. Search for “some phrase from your content” and see if the page is returned. If it is, then your content was likely seen. Note that content that is hidden by default may not be surfaced within your snippet on the SERPs.

Ahrefs

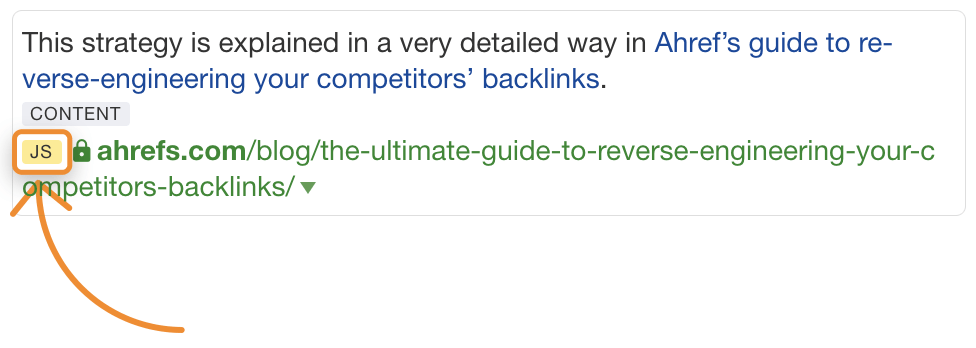

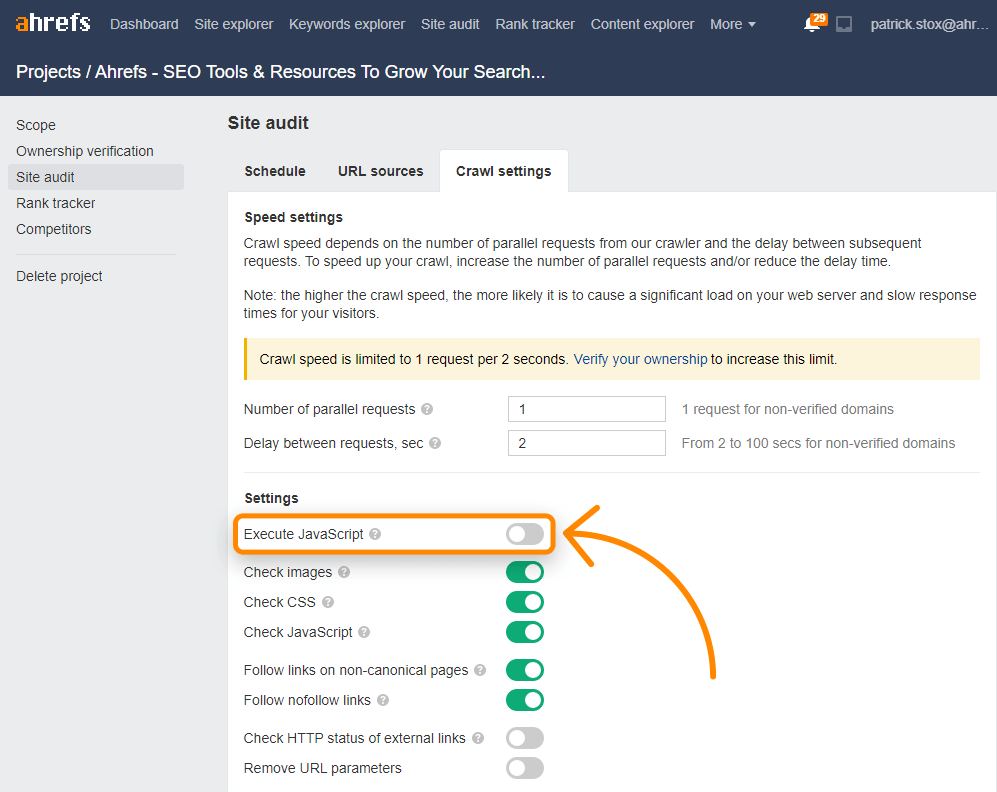

Along with the link index rendering pages, you can enable JavaScript in Site Audit crawls to unlock more data in your audits.

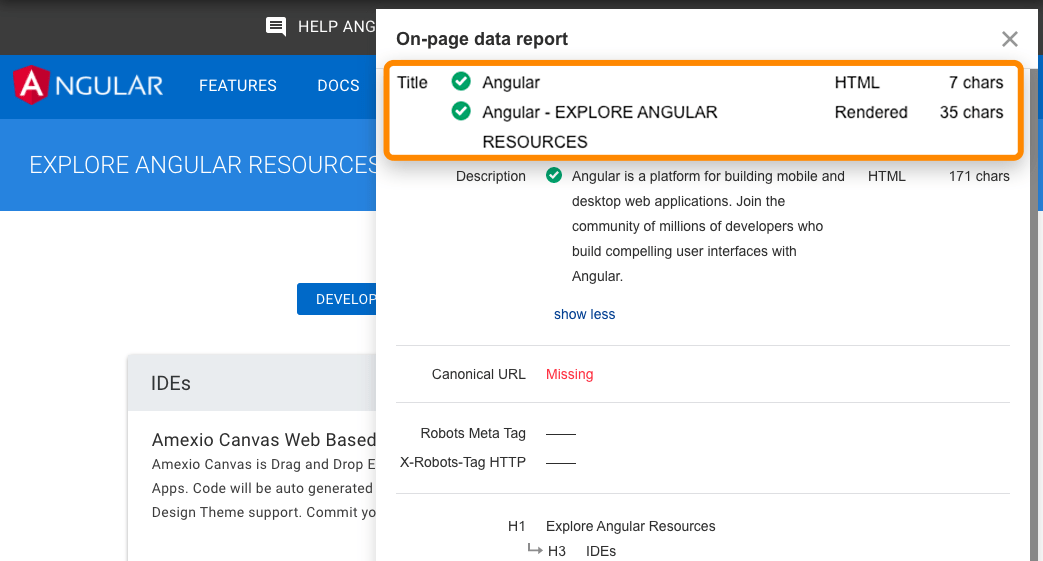

The Ahrefs Toolbar also supports JavaScript and allows you to compare HTML to rendered versions of tags.

There are lots of options when it comes to rendering JavaScript. Google has a solid chart that I’m just going to show. Any kind of SSR, static rendering, prerendering setup is going to be fine for search engines. The main one that causes problems is full client-side rendering where all of the rendering happens in the browser.

While Google would probably be okay even with client-side rendering, it’s best to choose a different rendering option to support other search engines. Bing also has support for JavaScript rendering, but the scale is unknown. Yandex and Baidu have limited support from what I’ve seen, and many other search engines have little to no support for JavaScript.

There’s also the option of Dynamic Rendering, which is rendering for certain user-agents. This is basically a workaround but can be useful to render for certain bots like search engines or even social media bots. Social media bots don’t run JavaScript, so things like OG tags won’t be seen unless you render the content before serving it to them.

If you were using the old AJAX crawling scheme, note that this has been deprecated and may no longer be supported.

A lot of the processes are similar to things SEOs are already used to seeing, but there might be slight differences.

On-page SEO

All the normal on-page SEO rules for content, title tags, meta descriptions, alt attributes, meta robot tags, etc. still apply. See On-Page SEO: An Actionable Guide.

A couple of issues I repeatedly see when working with JavaScript websites are that titles and descriptions may be reused and that alt attributes on images are rarely set.

Allow crawling

Don’t block access to resources. Google needs to be able to access and download resources so that they can render the pages properly. In your robots.txt, the easiest way to allow the needed resources to be crawled is to add:

User-Agent: Googlebot Allow: .js Allow: .css

URLs

Change URLs when updating content. I already mentioned the History API, but you should know that with JavaScript frameworks, they’re going to have a router that lets you map to clean URLs. You don’t want to use hashes (#) for routing. This is especially a problem for Vue and some of the earlier versions of Angular. So for a URL like abc.com/#something, anything after a # is typically ignored by a server. To fix this for Vue, you can work with your developer to change the following:

Vue router:

Use ‘History’ Mode instead of the traditional ‘Hash’ Mode.

const router = new VueRouter ({

mode: ‘history’,

router: [] //the array of router links

)}

Duplicate content

With JavaScript, there may be several URLs for the same content, which leads to duplicate content issues. This may be caused by capitalization, IDs, parameters with IDs, etc. So, all of these may exist:

domain.com/Abcdomain.com/abcdomain.com/123domain.com/?id=123

The solution is simple. Choose one version you want indexed and set canonical tags.

SEO “plugin” type options

For JavaScript frameworks, these are usually referred to as modules. You’ll find versions for many of the popular frameworks like React, Vue, and Angular by searching for the framework + module name like “React Helmet.” Meta tags, Helmet, and Head are all popular modules with similar functionality allowing you to set many of the popular tags needed for SEO.

Error pages

Because JavaScript frameworks aren’t server-side, they can’t really throw a server error like a 404. You have a couple of different options for error pages:

- Use a JavaScript redirect to a page that does respond with a 404 status code

- Add a noindex tag to the page that’s failing along with some kind of error message like “404 Page Not Found”. This will be treated as a soft 404 since the actual status code returned will be a 200 okay.

Sitemap

JavaScript frameworks typically have routers that map to clean URLs. These routers usually have an additional module that can also create sitemaps. You can find them by searching for your system + router sitemap, such as “Vue router sitemap.” Many of the rendering solutions may also have sitemap options. Again, just find the system you use and Google the system + sitemap such as “Gatsby sitemap” and you’re sure to find a solution that already exists.

Redirects

SEOs are used to 301/302 redirects , which are server-side. But Javascript is typically run client-side. This is okay since Google processes the page as follows the redirect. The redirects still pass all signals like PageRank. You can usually find these redirects in the code by looking for “window.location.href”.

Internationalization

There are usually a few module options for different frameworks that support some features needed for internationalization like hreflang. They’ve commonly been ported to the different systems and include i18n, intl, or many times the same modules used for header tags like Helmet can be used to add needed tags.

Lazy loading

There are usually modules for handling lazy loading. If you haven’t noticed yet, there are modules to handle pretty much everything you need to do when working with JavaScript frameworks. Lazy and Suspense are the most popular modules for lazy loading. You’ll want to lazy load images, but be careful not to lazy load content. This can be done with JavaScript, but it might mean that it’s not picked up correctly by search engines.

Final thoughts

JavaScript is a tool to be used wisely, not something for SEOs to fear. Hopefully, this article has helped you understand how to work with it better, but don’t be afraid to reach out to your developers and work with them and ask them questions. They are going to be your greatest allies in helping to improve your JavaScript site for search engines.

Have questions? Let me know on Twitter.