SEOs are, more often than not, “cat people”. That’s no surprise, given that we spend a lot of time online, and given that The Internet Is Made Of Cats. That’s why the first website which I’m looking at in this series belongs to the Cats Protection charity – a UK organization dedicated to feline welfare.

I’m going to explore their website and see where they have issues, errors, and opportunities with their technical SEO. Hopefully, I’ll find some things which they can fix and improve, and thus improve their organic visibility. Maybe we’ll learn something along the way, too.

Not a cat person? Don’t worry! I’ll be choosing a different charity in each post. Let me know who you think I should audit next time in the comments!

Introducing the brand

Cats Protection (formally the Cats Protection League, or CPL) describe themselves as the UK’s Largest Feline Welfare Charity. They have over 250 volunteer-run branches, 29 adoption centers, and 3 homing centers. That’s a lot of cats, a lot of people, and a lot of logistics.

Their website (at https://www.cats.org.uk/) reflects this complexity – it’s broad, deep, and covers everything from adoption to veterinary servers, to advice and location listings.

Perhaps because of that complexity, it suffers from a number of issues, which hinder its performance. Let’s investigate, and try to understand what’s going on.

Understanding the opportunity

Before I dive into some of the technical challenges, we should spend some time assessing the current performance of the site. This will help us spot areas which are underperforming, and help us to identify areas which might have issues. We’ll also get a feeling for how much they could grow if they fix those issues.

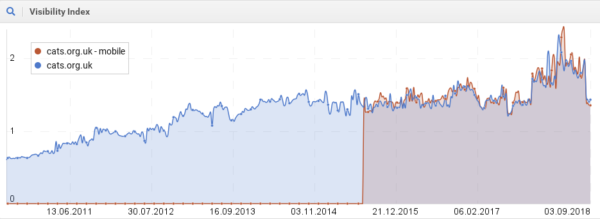

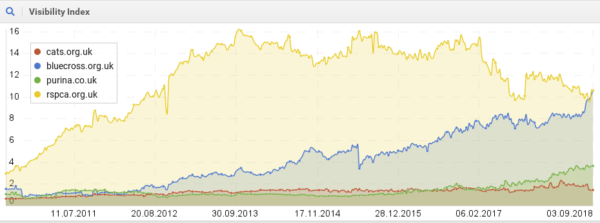

The ‘Visibility Index’ score (Sistrix) for cats.org.uk, desktop and mobile

I’m using Sistrix to see data on the ‘visibility index‘ for cats.org.uk, for desktop and mobile devices. We can see that the site experienced gradual growth from 2010 until late 2013, but – other than a brief spike in late-2018 (which coincides with Google’s “Medic” update in August)- seems to have stagnated.

Where they’re winning

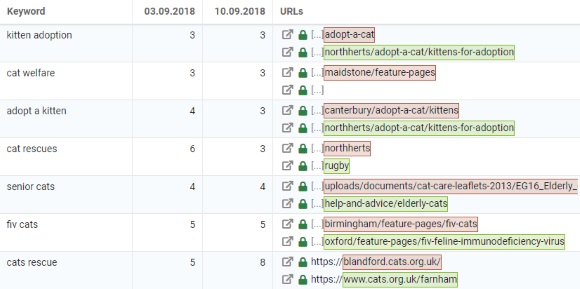

As we dig into some of the visibility data, you can see that Cats Protection rank very well in the UK for a variety of cat- and adoption-related phrases. Let’s see some examples of where they’re winning.

They’re doing a great job. They’re ranking highly for important, competitive keywords. In almost all cases, the result is a well-aligned page which is a great result, full of useful information.

These kinds of keywords likely account for a large proportion of the visits they receive from search engines. It’s reasonable to assume that they also drive many of their conversions and successful outcomes.

But they’re losing to the competition

It’s not all good news, though. Once you explore beyond the keywords where they’re winning, you can see that they’re often beaten to the top positions on key terms (e.g., “adopt a cat”, where they rank #2).

In cases like this, they’re frequently outranked by one of three main competitors in the search results:

- Purina – a cat food manufacturer/retailer.

- Blue Cross – a charity dedicated to helping sick, injured and homeless animals

- The RSPCA – a general animal welfare and rehoming charity

Now, I don’t want to suggest or imply that any one of these is a better or worse charity, service, or result than Cats Protection. Obviously, each of these (and many more) charities and websites can co-exist in the same space, and do good work. There’s plenty of opportunity for all of them to make the world a better place without directly competing with each other.

In fact, for many of the keywords they’re likely to be interested in, the results from each site are equally good. Each of these sites is doing great work in educating, supporting, and charitable activity.

Even Purina, which isn’t a charity, has a website full of high quality, useful, content around cat care.

However, among the major players in this space, Cats Protection has the lowest visibility. Their visibility is dwarfed by Blue Cross and the RSPCA, and the gap looks set to continue to widen. Even Purina’s content appears to be eating directly into Cats Protection market share.

The Visibility Index of Cats Protection vs organic search competitors over time

It’d be a shame if Cats Protection could be helping more cats, but fail to do so because their visibility is hindered by technical issues with their website.

To compete, and to grow, Cats Protection needs to identify opportunities to improve their SEO.

Looking at the long tail

Cats Protection probably doesn’t want to go head-to-head with the RSPCA (or just fight to take market share directly from other charities). That’s why I’ll need to look deeper or elsewhere for opportunities to improve performance and grow visibility.

If the site gets stronger technically, then it’s likely to perform better. Not just against the big players for competitive ‘head’ keywords, but also for long-tail keywords, where they can beat poorer quality resources from other sites.

As soon as you start looking at keywords where Cats Protection has a presence but low visibility, it’s obvious that there are many opportunities to improve performance. Unfortunately, there are some significant architectural and technical challenges which might be holding them back.

I’ve used Sitebulb to crawl the site, and I’ve found three critical issues. These areas contribute significantly to the low (and declining) visibility.

Critical issues

1. The site is fragmented

Every individual branch of the charity appears to get and maintain its own subdomain and own version of the website.

For example, the Glasgow branch maintains what appears to be a close copy of the main website, and both the North London branch and the Birmingham branch both maintain their own divergent ‘local’ versions of the site. Much of the content on these sites is a direct copy of that which is available on the main website.

Fragmentation is harming their performance

This approach significantly limits visibility and potential, as it dilutes the value of each site. In particular;

- Search engines usually consider subdomains to be separate websites. It’s usually better to have one big site than to have lots of small websites. With lots of small sites, you risk value and visibility being split between each ‘sub site’.

- Content is repeated, duplicated, and diluted; pages that one team produces will often end up competing with pages created by other teams, rather than competing with other websites.

- The site doesn’t use canonical URL tags to indicate the ‘main version’ of a given page to search engines. This makes this page-vs-page competition even worse.

This combination of technical and editorial fragmentation means that they’re spread too thin. None of the individual sites, or their pages, are strong enough to compete against larger websites. That means they get fewer visits, less engagement, and fewer links.

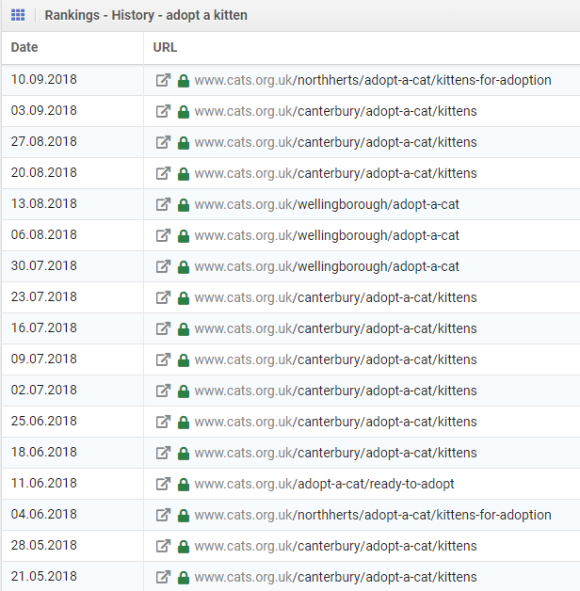

You can see some examples below where fragmentation is a huge issue for search engines. Google – in its confusion between the multiple sites and duplicate pages – continually switches the rankings and ranking pages for competitive terms between different versions. This weakens the performance and visibility of these pages, and the overall site(s).

Google continually switches the ranking page(s) for competitive keywords

Rankings for “adopt a kitten” continually fluctuate between competing pages

If Cats Protection consolidated their efforts and their content, they might have a chance. Otherwise, other brands will continue to outperform them with fewer, but stronger pages.

Managing local vs general content

While it makes sense to enable (and encourage) local branches to produce content which is specifically designed for local audiences, there are better ways to do this.

They could achieve the same level of autonomy and localization by just using subfolders for each branch. Those branches could create locale-specific content within those page trees. ‘Core’ content could remain a shared, centralized asset, without the need to duplicate pages in each section.

At the moment, their site and server configuration actually appears to be set up to allow for a subdomain-based approach. https://birmingham.cats.org.uk/, for example, appears to resolve to the same content as https://www.cats.org.uk/birmingham. It seems that they’ve just neglected to choose which version they want to make the canonical, and/or to redirect the other version.

They’ve also got some additional nasty issues where:

- The non-HTTPS version of many of the subdomains resolves without redirecting to the secure version. That’s going to be fragmenting their page value even further.

- Requests to any subdomain resolve to the main site; e.g., http://somerandomexample.cats.org.uk/ returns the homepage. Aside from further compounding their fragmentation issues, this opens them up to some nasty negative SEO attack vectors.

- There are frequent HTTPS/security problems when local branches link (or are linked to) including the ‘www’ component and the location subdomain (e.g., https://www.vuildford.cats.org.uk/learn/e-learning-ufo).

Incidentally, if Cats Protection were running on WordPress (they’re on a proprietary CMS running on ASP.NET), they’d be a perfect fit for WordPress multisite. They’d be able to manage their ‘main’ site while allowing teams from each branch to produce their own content in neat, organized, local subfolders. They could also manage access, permissions, and how shared content should behave. And of course, the Yoast SEO plugin would take care of canonical tags, duplication, and consolidation.

Canonical URL tags to the rescue

While resolving all of these fragmentation issues feels like a big technical challenge, there might be an easy win for Cats Protection. If they add support for canonical tags, they could tell Google to consolidate the links and value on shared pages back to the original. That way each local site can contribute to the whole, while maintaining its own dedicated pages and information.

That’s not a perfect solution, but it’d go some way to arresting the brand’s declining visibility. Regardless of their approach to site structure, they should prioritize adding support and functionality for canonical URL tags. That way they can ensure that they aren’t leaking value between duplicate and multiple versions of pages. That would also allow them to pool resources on improving the performance of key, shared content.

The great news is, because they’re running Google Tag Manager, they could insert canonical URL tags without having to spend development resources. They could just define triggers and tags through GTM, and populate the canonical tags via JavaScript. This isn’t best practice, but it’s a lot better than nothing!

2. Their best content is buried in PDFs

In many of the cases where Cats Protection is outranked by other charities or results, it’s because some of its best content is buried in PDF files like this one.

PDFs typically perform poorly in search results. That’s because they can be harder for search engines to digest, and provide a comparatively poor user experience when clicked from search results. That means that they’re less likely to be linked to, cited or shared. This seriously limits the site’s potential to rank for competitive keywords.

As an example, this excellent resource on cat behavior currently ranks in position #5, behind Purina (whose content is, in my personal opinion, not even nearly as good or polished), and behind several generic content pages.

The information in here is deeper, more specific, and better written than many of the resources which outrank it. But its performance is limited by its format.

If this were a page (and was as well-structured and well-presented as the PDF), it would undoubtedly create better engagement and interaction. That would drive the kinds of links and shares which could lead to significantly increased visibility. It would also benefit from being part of a networked hub of pages, linking to and being linked from related content.

Amazingly, this particular PDF is also only a summary. It references other, more specific PDFs throughout, which are of equally high quality. But it doesn’t link to them, so search engines struggle to discover or understand the connections between the documents.

This is just the tip of the iceberg. There are dozens of these types of PDFs, and hundreds of scenarios where they’re being outranked by lower quality content. This is costing Cats Protection significant visibility, visits, and adoptions.

Aside from being easier to style (outside of the constraints of rigid website templates and workflows), there’s very little reason to produce web content in this manner. This type of content should be produced in a ‘web first’ manner, and then adapted as necessary for other business purposes.

How bad is it?

To demonstrate the severity of the issues, I’ve looked at several examples of where PDFs rank for potentially important keywords.

In the following table, I’ve used Sistrix to filter down to only see keywords where the ranking URL is a PDF, and it contains the word “new” (i.e., “new cat”, “get a new kitten”). These are likely to represent the kinds of searches people make when deciding to adopt. You can see that Cats Protection frequently ranks relatively poorly and that these PDFs aren’t particularly effective as landing pages.

This tiny subset of keywords represents over 1,000 searches per month in the UK. That’s 1000 scenarios where Cats Protection inadvertently provides a poor user experience and loses to other, often lower quality resources.

And value might not even be getting to those resources…

Many of the links to these resources appear to route through an internal URL shortener – likely a marketing tool for producing ‘pretty’ or shorter URLs than the full-length file locations.

E.g., https://www.cats.org.uk/-behaviour-topcatpart1 redirects to https://www.cats.org.uk/uploads/documents/Behaviour_-_Top_cat_part_1.pdf, with a 302 redirect code.

This is common practice on many sites, and usually not a problem – except, in this case, resources redirect via a 302 redirect. They should change this to a 301; otherwise, there’s a chance that any equity which might have flowed through the link to the PDF gets ‘stuck’ at the redirect.

It’s not too late!

The good news is, it’s not too late to convert these into pages and to alter whichever internal workflows and processes currently exist around these resources. That will almost certainly improve the rankings, visibility, and traffic for these kinds of keywords.

Except, upon investigation, you can see from the URL path that it looks like all of these assets were produced in 2013. My guess is that these PDFs were commissioned as a batch, and haven’t been updated or extended since. That goes some way to explaining the format, and why they’re so isolated. Their creation was a one-off project, rather than part of the day-to-day activities of the site and marketing teams.

There’s more opportunity here

Because much of the key content is tucked away in PDF files, the performance of many of the site’s ‘hub’ pages is also limited. Sections like this one, which should be the heart of a rich information library, is simply a set of links pointing out to aging PDF files. That limits the likelihood that people will engage, link, share or use these pages.

This a shame, because Cats Protection could choose to compete strategically on the quality of these assets. They could go further; produce more, make them deeper and better, and refine their website templating system to allow them to present them richly and beautifully. This could go a long way toward helping them reclaim lost ground from Purina and other competitors.

At the very least, they should upgrade the existing PDFs into rich, high-quality pages.

Once they’ve done that, they should update all of the links which currently point to the PDF assets, to point at the new URLs. Lastly, they should set a canonical URL on the PDF files via an HTTP header (you can’t insert canonical URL meta/link tags directly into PDFs) pointing at the new page URL.

Not only would that directly impact the performance and visibility of that content, but it’ll also help them to build relevance and authority in the ‘hub’ pages, like their veterinary guides section.

3. Their editorial and ‘marketing’ content is on the wrong website

While this is primarily a technical audit, bear with me as I talk briefly about content and tone of voice. I believe that technical issues and constraints have played a significant role in defining the whole brand’s tone of voice online, and not for the better.

Because, surprisingly for such an emotive ‘product’, much of the content on the website might be considered to be ‘dry’; perhaps even a bit ‘corporate’.

In order to attract and engage visitors (and to encourage them to cite, link and share content – which is critical for SEO performance), content needs to have a personality. It needs to stand for something and to have an opinion. Pages have to create an emotional response. Of course, Cats Protection do all of this, but they do it on the wrong website.

Much of their emotive content lives on a dedicated subdomain (the ‘Meow blog‘), where it rarely sees the light of day.

It’s another fragmentation issue

The Meow blog runs on an entirely separate CMS from the main site (Blogger/Blogspot). This site is also riddled with technical issues and flaws – not to mention the severely limited stylistic, layout and presentation options. This site gets little traffic or attention, and very few links/likes/shares. Much of its content competes with – and is beaten by – dryer content on the main site.

Heartwarming stories about re-homing kittens abound on the Meow blog

But the blog is full of pictures of cats, rescue and recovery stories from volunteers and adopters, and warm ‘from the front line’ editorial content. This is the kind of content you’d want to read before deciding whether or not to engage with Cats Protection, and it should be part of the core user journey. Today, most users miss this entirely.

We can see from the following table, which shows the site’s highest rankings, that their content gets very little traction. The site only ranks in the top 100 results of Google for 258 keywords, and only ranks in the top ten for 5 of those. Nobody who is searching for exactly the kinds of things which Cats Protection should have an opinion on – and be found for – is arriving here.

Furthermore, this separation means that where personality is ‘injected’ into core site pages, the stark contrast can make it feels artificial and contrived. It often reads like ‘marketing content’ when compared with the flat tone of the content around it.

Why is the blog on a different system?

Typically, this kind of separation occurs when a CMS hasn’t properly anticipated (or otherwise can’t support) ‘editorial’ content; blog posts and articles which are authored, categorized, media-rich, and so forth. These are different types of requirements and functions from a website which just supports ‘pages’, or which has been designed and built to serve a very specific set of requirements.

As a result, a marketing team will typically create and maintain a separate ‘blog’, often separated from the main site. This can cripple the performance of those companies’ marketing and reach, as the blog never inherits the authority of the main site (and therefore, has little visibility), and fails to deliver against marketing and commercial goals. This often leads to abandonment, and over-investment in marketing in rented channels, like Facebook. Speculatively, it looks like this is exactly what’s happened here.

Conversely, either through poor training and management or outright rebellion, some teams prefer to publish ‘blog-like’ content as static articles within the constraints and confines of their local branch news sections. These attempts, lacking the kind of architecture, framework, and strategy which a successful blog requires, also fail to perform. Here’s an example from the Brighton branch.

From a technical perspective, the main site should be able to house this content on the same domain, as part of the same editorial and structural processes which manage their ‘main’ content. If the separation of the blog from the main site is due to technical constraints inherent in the main site, this is a devastating failure of planning, scoping and/or budgeting. It’s limited their ability to attract and engage audiences, to integrate and showcase their personality into their main site content, and to convert more of their audience to donation, adoption or other outcomes.

While fixing some of the technical issues we’ve spotted should result in immediate improvements to visibility, the long-term damage of this separation of content types will require years of effort to undo.

This is something which WordPress gets right at a deep architectural level. The core distinction between ‘pages’ and ‘posts’ (as well as support for custom post types, custom/shared, and post capability management) is hugely powerful and flexible. Other platforms could learn a lot from studying how WordPress solves exactly this kind of problem.

Regardless of their choice of platform(s), Cats Protection need to have a solid strategy for how they seamlessly house and integrate blog content with ‘core’ site content in a way which aligns with technical and editorial best practice.

4. Serious technical SEO standards abound

I understand that as a charity, Cats Protection has limited budget and resource to invest in their website. It’s unreasonable to expect their custom-built site to be completely perfect when it comes to technical SEO, or to adhere 100% to cutting edge standards.

However, the gap between ‘current’ and ‘best’ performance is wide – enough that I’d be remiss in our review not to point out some of the more severe issues which I’ve identified.

I’ve spotted dozens of issues throughout the site which are likely impacting performance, ranging from severe problems with how the site behaves, to minor challenges with individual templates or pages.

Individually, many of these problems aren’t serious enough to cause alarm. Collectively, however, they represent one of the biggest factors limiting the site’s visibility.

I’ve highlighted the issues which I think represent the biggest opportunities – those which, if fixed, should help to unlock increased performance worth many times the resource invested in resolving them.

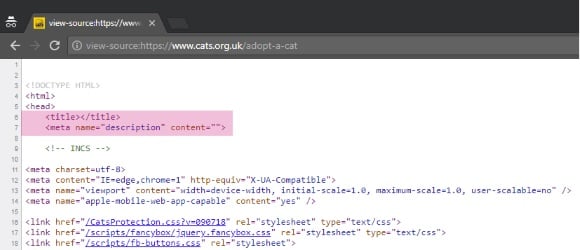

Many pages and templates are missing title, description or H1 tags

Page titles and meta descriptions are hugely important for SEO. They’re an opportunity to describe the focus of the page, and to optimize your ‘advert’ (your organic listing) in search engines. H1 tags act as the primary heading for a page, and help users and search engines to know where they are, and what a page is about.

Not having these tags is a serious omission, and can severely impact the performance of a page.

Many important pages on the site (like the “Adopt a cat” page) are missing titles, descriptions and headings, which is likely impacting the visibility of and traffic to many key pages.

As well as harming performance, omitting titles often forces Google to try and make its own decisions – often with terrible effect. The following screenshot is from a search result for the ‘Kittens’ page – the site’s main page for donation signups. An empty title tag and missing <h1> heading tag has caused Google to think that the title should be ‘Cookie settings’.

Google incorrectly assumes the page title should be ‘Cookie settings’

There are hundreds of pages where this, and similar issues are occurring.

A good CMS should provide controls for editors and site admins to craft titles, descriptions, and headings. But the site should also automatically generate a sensible default title, description and primary heading for any template or page where there’s nothing manual specified (based on the name and content of the page). Needless to say, this is one of the core features in Yoast SEO!

Errors and content retirement processes are poorly managed

Requests for invalid URLs (such as https://www.cats.org.uk/this-is-an-example) frequently return a 200 HTTP header status. That tells Google that everything is okay and that the page is explicitly not an error. Every time somebody moves or deletes a page, they create more ‘error’ pages.

As a result, Google is frequently confused about what constitutes an actual error, and many error pages are incorrectly indexed.

Is this an error page? The 200 HTTP header says not.

This further dilutes the performance of key pages, and the site overall.

Then again, at least this page provides links and routes back into the main content. Other types of errors (such as those generated by requesting invalid URL structures like this one, which ends in .aspx) return a ‘raw’ server error, which although it correctly returns a 404 HTTP header status, is essentially a dead end to search engines. Needless to say, that negatively impacts performance.

The poor error management here also makes day-to-day site management much harder. Tools like Google Search Console, which report on erroneous URLs and offer suggestions, are rendered largely useless due to the ambiguity around what constitutes a ‘real’ 404 error. That makes undergoing a process of identifying, resolving and/or redirecting these kinds of URLs pretty much impossible. The site is constantly leaking, and accruing technical debt.

A ‘live’ adoption listing page

And it’s not just deleted or erroneous URLs; the site handles dynamic and retired content poorly. When a cat has been adopted, the page which used to have information about it now returns a 200 HTTP header status and a practically empty page.

An invalid ‘cid’ parameter in the URL returns an empty page, but a 200 HTTP header status

Every time Cats Protection list, or unlist a cat for adoption, they create new issues and grow their technical debt.

Their careers subdomain has the opposite problem; pages are seemingly never removed, and just build up over time. This wastes resources on crawling, indexing, and equity. Expired jobs should be elegantly retired (and the URLs redirected) after they expire. Properly retiring old jobs (or, at least their markup) is a requirement if Cats Protection want to take advantage enhanced search engine listings from resulting from implementing schema markup for jobs.

Issues like this crop up throughout their whole ecosystem. Value is constantly ‘leaking’ out of their site as a result. To prevent this, they need to ensure that invalid requests return a consistent, ‘friendly’ 404 page, with an appropriate HTTP status. They also need to implement processes which make sure that content expiry, movement, and deletion processes redirect or return appropriate HTTP status (something which the Redirect Manager in Yoast SEO Premium handles automatically).

Oher areas

In my opinion, these issues represent some of the biggest (technical SEO) barriers to growth which the brand faces. These are just the tip of the iceberg, but until they address and resolve them, fixing a million tiny issues page-by-page isn’t going to move the needle. I could definitely dig deeper, into areas like site speed (why haven’t they adopted HTTP/2?), their .NET implementation (why are they still using ViewState blocks?) and the overall UX – but this is already a long post.

There is, however, just one last area I’d like to consider.

A light at the end of the tunnel?

In researching and evaluating the platform, I couldn’t help but notice a link in the footer to the agency who designed and built the website – MCN NET. Excitingly, their homepage contains the following block of content:

After a very tense and nerve-racking tender process we were thrilled to hear that we had once again being nominated as the preferred supplier for the redevelopment of the Cats Protection website. This complex and feature rich website is scheduled to make its debut in 2019, so be sure to keep an eye out for it. It’ll be Purrrfect.

Hopefully, that means that many of the problems I’ve identified have already been solved. Hopefully.

Except, that’s perhaps a little ambitious. I don’t know the folks at MCN, and I don’t know what the brief, budget or scope they received was like when they built the current site. As I touched on earlier, charities don’t have money to burn on building perfect websites, and maybe what they got was the right balance of cost and quality for them, at the time. I’m certainly not suggesting that it’s solely MCN’s fault that the Cats Protection website suffers from these issues.

However, my many years of experience in and around web development has given me have a well-earned nervousness around .NET and Microsoft technologies, and a deep distrust of custom-built and proprietary content management systems.

That’s because, in my opinion, all of the issues I’ve pointed out in this article are basic. Arguably, they’re not even really SEO things – they’re just a “build a decent website which works reasonably well” level of standards. I recognize that, in part, that’s because the open source community – and WordPress, and Yoast, in particular – has made these the standards. And proprietary solutions and custom CMS platforms often struggle to keep up with to the thousands of improvements which the open source community contributes every month. If the current website is reaching its end of life, it’s not surprising that it’s creaking at the seams.

With a new website coming, I hope that all of this feedback can be taken on board, and the problems resolved. I understand the commercial realities which mean that ‘best’ isn’t achievable, but they could achieve a lot just by going from ‘bad’ to ‘good’.

With that in mind, if I were working for or on behalf of Cats Protection, I’d want to be very clear about the scope of the new website. I’d want detailed planning and documentation round its functionality, and the baseline level of technical quality required. Of course, that will have cost and resource implications, but the new site will have to work a lot harder than the current one. Cats Protection are competing in an aggressive, crowded market, full of strong competitors. The stability of their foundations will make the difference between winning and losing.

I’d also want to have a very clear plan in place for the migration strategy from the current website to a new website. Migrations of this size and complexity, when handled poorly, have been known to kill businesses.

Every existing page, URL and asset will need to be redirected to a new home. Given the types of fragmentation issues, errors and orphaned assets we’ve seen, this is a huge job. Even mapping out and creating that plan – never mind executing it – feels like a mammoth task.

Hopefully, this is all planned out, and in good hands. I can’t wait to see what the new site looks like, and how it boosts their visibility.

Summary

Cats Protection is a strong brand, doing good work, crippled by the condition of its aging and fragmented website. Other charities and brands are eating into its market share and visibility with worse content and marketing. They’re able to do this, in part, because they have stronger technical platforms.

Some of the technical decisions Cats Protection has made around its content strategy have caused long-term harm. The fragmentation of local branches and the separation of the blog have seriously limited their performance.