On Friday Google’s Gary Illyes announced Penguin 4.0 was now live.

Key points highlighted in their post are:

- Penguin is a part of their core ranking algorithm

- Penguin is now real-time, rather than something which periodically refreshes

- Penguin has shifted from being a sitewide negative ranking factor to a more granular factor

Things not mentioned in the post

- if it has been tested extensively over the past month

- if the algorithm is just now rolling out or if it is already done rolling out

- if the launch of a new version of Penguin rolled into the core ranking algorithm means old sites hit by the older versions of Penguin have recovered or will recover anytime soon

Since the update was announced, the search results have become more stable.

No signs of major SERP movement yesterday – the two days since Penguin started rolling out have been quieter than most of September.— Dr. Pete Meyers (@dr_pete) September 24, 2016

They still may be testing out fine tuning the filters a bit…

Fyi they’re still split testing at least 3 different sets of results. I assume they’re trying to determine how tight to set the filters.— SEOwner (@tehseowner) September 24, 2016

…but what exists now is likely to be what sticks for an extended period of time.

Penguin Algorithm Update History

- Penguin 1: April 24, 2012

- Penguin 2: May 26, 2012

- Penguin 3: October 5, 2012

- Penguin 4: May 22, 2013 (AKA: Penguin 2.0)

- Penguin 5: October 4, 2013 (AKA Penguin 2.1)

- Penguin 6: rolling update which began on October 17, 2014 (AKA Penguin 3.0)

- Penguin 7: September 23, 2016 (AKA Penguin 4.0)

Now that Penguin is baked into Google’s core ranking algorithms, no more Penguin updates will be announced. Panda updates stopped being announced last year. Instead we now get unnamed “quality” updates.

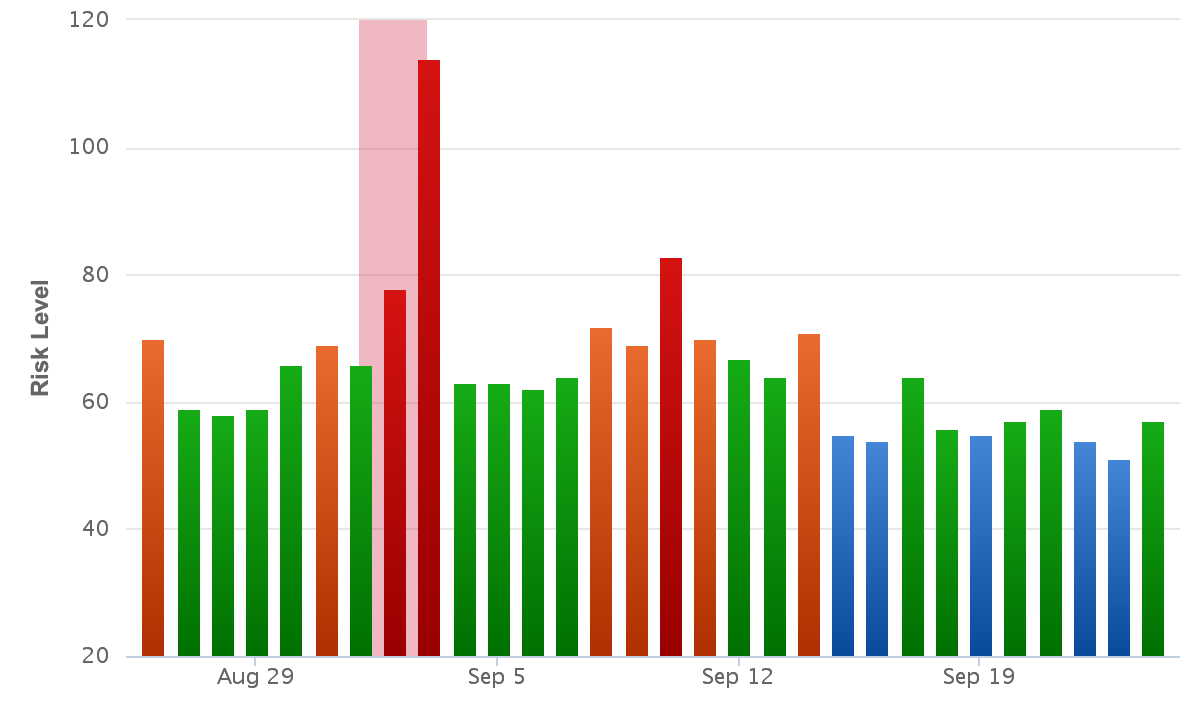

Volatility Over the Long Holiday Weekend

Earlier in the month many SEOs saw significant volatility in the search results, beginning ahead of Labor Day weekend with a local search update. The algorithm update observations were dismissed as normal fluctuations in spite of the search results being more volatile than they have been in over 4 years.

There are many reasons for search engineers to want to roll out algorithm updates (or at least test new algorithms) before a long holiday weekend:

- no media coverage: few journalists on the job & a lack of expectation that the PR team will answer any questions. no official word beyond rumors from self-promotional marketers = no story

- many SEOs outside of work: few are watching as the algorithms tip their cards.

- declining search volumes: long holiday weekends generally have less search volume associated with them. Thus anyone who is aggressively investing in SEO may wonder if their site was hit, even if it wasn’t.

The communications conflicts this causes between in-house SEOs and their bosses, as well as between SEO companies and their clients both makes the job of the SEO more miserable and makes the client more likely to pull back on investment, while ensuring the SEO has family issues back home as work ruins their vacation. - fresh users: as people travel their search usage changes, thus they have fresh sets of eyes & are doing somewhat different types of searches. This in turn makes their search usage data more dynamic and useful as a feedback mechanism on any changes made to the underlying search relevancy algorithm or search result interface.

Algo Flux Testing Tools

Just about any of the algorithm volatility tools showed far more significant shift earlier in this month than over the past few days.

Take your pick: Mozcast, RankRanger, SERPmetrics, Algaroo, Ayima Pulse, AWR, Accuranker, SERP Watch & the results came out something like this graph from Rank Ranger:

One issue with looking at any of the indexes is the rank shifts tend to be far more dramatic as you move away from the top 3 or 4 search results, so the algorithm volatility scores are much higher than the actual shifts in search traffic (the least volatile rankings are also the ones with the most usage data & ranking signals associated with them, so the top results for those terms tend to be quite stable outside of verticals like news).

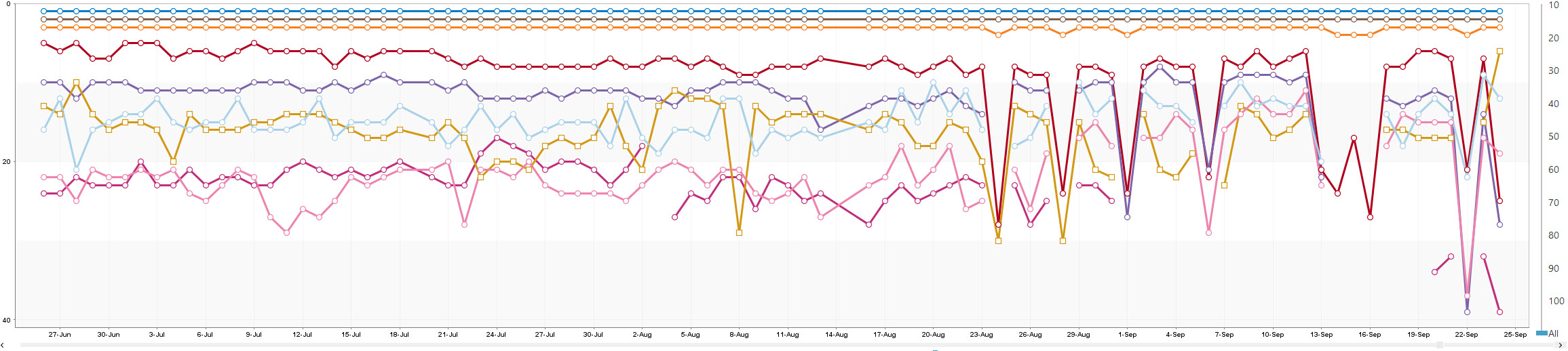

You can use AWR’s flux tracker to see how volatility is higher across the top 20 or top 50 results than it is across the top 10 results.

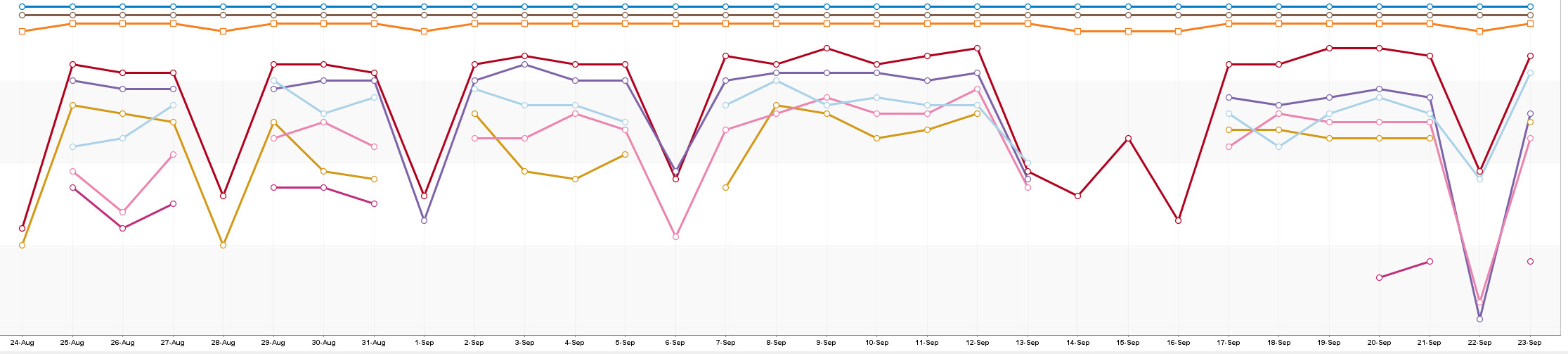

Example Ranking Shifts

I shut down our membership site in April & spend most of my time reading books & news to figure out what’s next after search, but a couple legacy clients I am winding down working with still have me tracking a few keywords & one of the terms saw a lot of smaller sites (in terms of brand awareness) repeatedly slide and recover over the past month.

Notice how a number of sites would spike down on the same day & then back up. And then the pattern would repeat.

As a comparison, here is that chart over the past 3 months.

Notice the big ranking moves which became common over the past month were not common the 2 months prior.

Negative SEO Was Real

There is a weird sect of alleged SEOs which believes Google is omniscient, algorithmic false positives are largely a myth, AND negative SEO was never a real thing.

As it turns out, negative SEO was real, which likely played a part in Google taking years to roll out this Penguin update AND changing how they process Penguin from a sitewide negative factor to something more granular.

@randfish Incredibly important point is the devaluing of links & not “penalization”. That’s huge. Knocks negative SEO out. @dannysullivan— Glenn Gabe (@glenngabe) September 23, 2016

Update != Penalty Recovery

Part of the reason many people think there was no Penguin update or responded to the update with “that’s it?” is because few sites which were hit in the past recovered relative to the number of sites which ranked well until recently just got clipped by this algorithm update.

When Google updates algorithms or refreshes data it does not mean sites which were previously penalized will immediately rank again.

Some penalties (absent direct Google investment or nasty public relations blowback for Google) require a set amount of time to pass before recovery is even possible.

Google has no incentive to allow a broad-based set of penalty recoveries on the same day they announce a new “better than ever” spam fighting algorithm.

They’ll let some time base before the penalized sites can recover.

Further, many of the sites which were hit years ago & remain penalized have been so defunded for so long that they’ve accumulated other penalties due to things like tightening anchor text filters, poor user experience metrics, ad heavy layouts, link rot & neglect.

What to do?

So here are some of the obvious algorithmic holes left by the new Penguin approach…

- only kidding

- not sure that would even be a valid mindset in the current market

- hell, the whole ecosystem is built on quicksand

The trite advice is to make quality content, focus on the user, and build a strong brand.

But you can do all of those well enough that you change the political landscape yet still lose money.

“Mother Jones published groundbreaking story on prisons that contributed to change in govt policy. Cost $350k & generated $5k in ad revenue”— SEA☔☔LE SEO (@searchsleuth998) August 22, 2016

Google & Facebook are in a cold war, competing to see who can kill the open web faster, using each other as justification for their own predation.

Even some of the top brands in big money verticals which were known as the canonical examples of SEO success stories are seeing revenue hits and getting squeezed out of the search ecosystem.

And that is without getting hit by a penalty.

It is getting harder to win in search period.

And it is getting almost impossible to win in search by focusing on search as an isolated channel.

I never understood mentality behind Penguin “recovery” people. The spam links ranked you, why do you expect to recover once they’re removed?— SEOwner (@tehseowner) September 25, 2016

Efforts and investments in chasing the algorithms in isolation are getting less viable by the day.

Obviously removing them may get you out of algorithm, but then you’ll only have enough power to rank where you started before spam links.— SEOwner (@tehseowner) September 25, 2016

Anyone operating at scale chasing SEO with automation is likely to step into a trap.

When it happens, that player better have some serious savings or some non-Google revenues, because even with “instant” algorithm updates you can go months or years on reduced revenues waiting for an update.

And if the bulk of your marketing spend while penalized is spent on undoing past marketing spend (rather than building awareness in other channels outside of search) you can almost guarantee that business is dead.

“If you want to stop spam, the most straight forward way to do it is to deny people money because they care about the money and that should be their end goal. But if you really want to stop spam, it is a little bit mean, but what you want to do, is break their spirits.” – Matt Cutts