Visual search engines will be at the center of the next phase of evolution for the search industry, with Pinterest, Google, and Bing all announcing major developments recently.

How do they stack up today, and who looks best placed to offer the best visual search experience?

Historically, the input-output relationship in search has been dominated by text. Even as the outputs have become more varied (video and image results, for example), the inputs have been text-based. This has restricted and shaped the potential of search engines, as they try to extract more contextual meaning from a relatively static data set of keywords.

Visual search engines are redefining the limits of our language, opening up a new avenue of communication between people and computers. If we view language as a fluid system of signs and symbols, rather than fixed set of spoken or written words, we arrive at a much more compelling and profound picture of the future of search.

Our culture is visual, a fact that visual search engines are all too eager to capitalize on.

Already, specific ecommerce visual search technologies abound: Amazon, Walmart, and ASOS are all in on the act. These companies’ apps turn a user’s smartphone camera into a visual discovery tool, searching for similar items based on whatever is in frame. This is just one use case, however, and the potential for visual search is much greater than just direct ecommerce transactions.

After a lot of trial and error, this technology is coming of age. We are on the cusp of accurate, real-time visual search, which will open a raft of new opportunities for marketers.

Below, we review the progress made by three key players in visual search: Pinterest, Google, and Bing.

Pinterest’s visual search technology is aimed at carving out a position as the go-to place for discovery searches. Their stated aim echoes the opening quote from this article: “To help you find things when you don’t have the words to describe them.”

Rather than tackle Google directly, Pinterest has decided to offer up something subtly different to users – and advertisers. People go to Pinterest to discover new ideas, to create mood boards, to be inspired. Pinterest therefore urges its 200 million users to “search outside the box”, in what could be deciphered as a gentle jibe at Google’s ever-present search bar.

All of this is driven by Pinterest Lens, a sophisticated visual search tool that uses a smartphone camera to scan the physical world, identify objects, and return related results. It is available via the smartphone app, but Pinterest’s visual search functionality can be used on desktop through the Google Chrome extension too.

Pinterest’s vast data set of over 100 billion Pins provides the perfect training material for machine learning applications. As a result, new connections are forged between the physical and digital worlds, using graphics processing units (GPUs) to accelerate the process.

In practice, Pinterest Lens works very well and is getting noticeably better with time. The image detection is impressively accurate and the suggestions for related Pins are relevant.

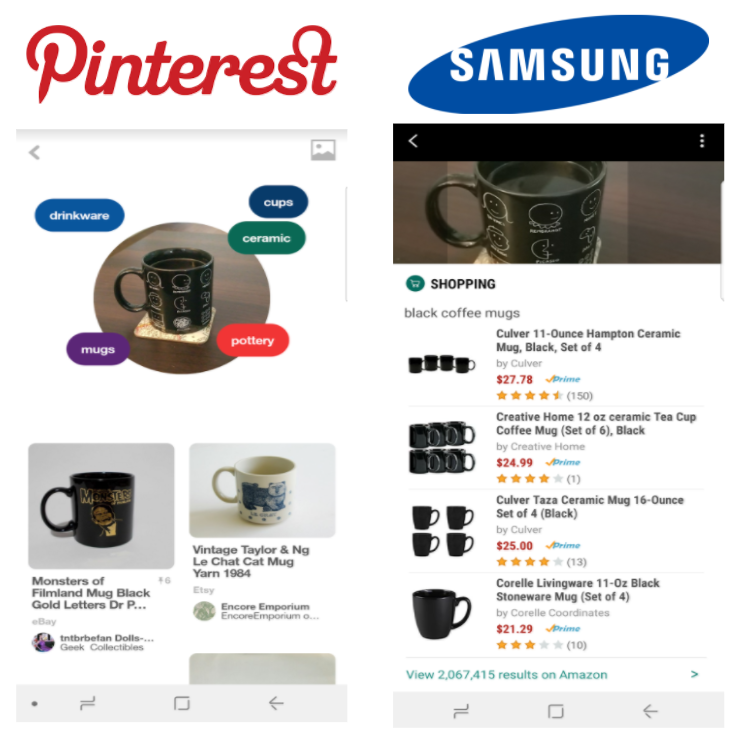

Below, the same object has been selected for a search using Pinterest and also Samsung visual search:

The differences in the results are telling.

On the left, Pinterest recognizes the object’s shape, its material, its purpose, but also the defining features of the design. This allows for results that go deeper than a direct search for another black mug. Pinterest knows that the less tangible, stylistic details are what really interest its users. As such, we see results for mugs in different colors, but that are of a similar style.

On the right, Samsung’s Bixby assistant recognizes the object, its color, and its purpose. Samsung’s results are powered by Amazon, and they are a lot less inspiring than the options served up by Pinterest. The image is turned into a keyword search for [black coffee mugs], which renders the visual search element a little redundant.

Visual search engines work best when they express something for us that we would struggle to say in words. Pinterest understands and delivers on this promise better than most.

Pinterest visual search: The key facts

- Over 200 million monthly users

- Focuses on the ‘discovery’ phase of search

- Pinterest Lens is the central visual search technology

- Great platform for retailers, with obvious monetization possibilities

- Paid search advertising is a core growth area for the company

- Increasingly effective visual search results, particularly on the deeper level of aesthetics

Google made early waves in visual search with the launch of Google Goggles. This Android app was launched in 2010 and allowed users to search using their smartphone camera. It works well on famous landmarks, for example, but it has not been updated significantly in quite some time.

It seemed unlikely that Google would remain silent on visual search for long, and this year’s I/O development revealed what the search giant has been working on in the background.

Google Lens, which will be available via the Photos app and Google Assistant, will be a significant overhaul of the earlier Google Goggles initiative.

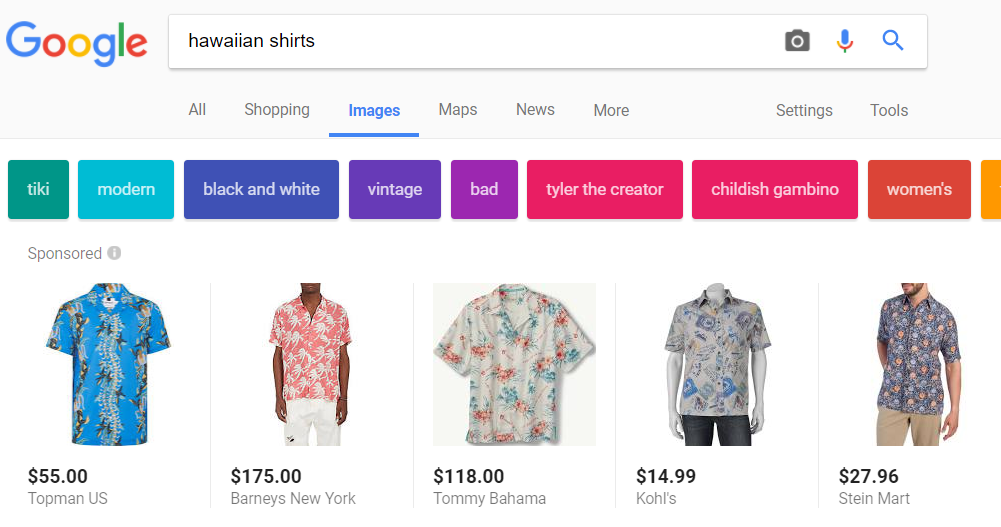

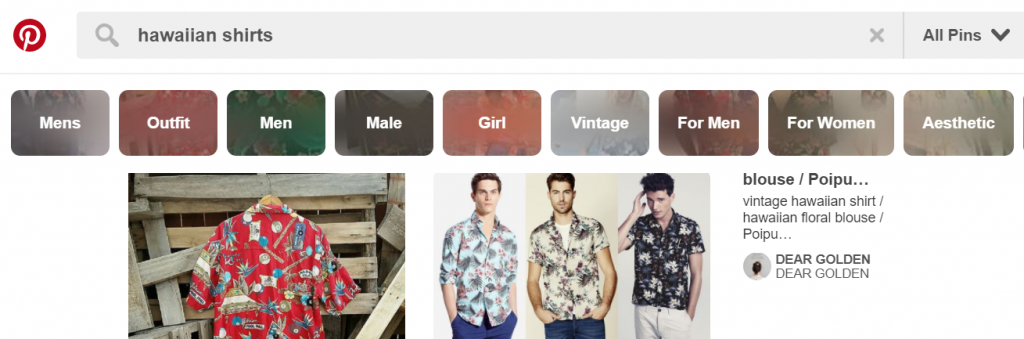

Any nomenclative similarities to Pinterest’s product may be more than coincidental. Google has stealthily upgraded its image and visual search engines of late, ushering in results that resemble Pinterest’s format:

Google’s ‘similar items’ product was another move to cash in on the discovery phase of search, showcasing related results that might further pique a consumer’s curiosity.

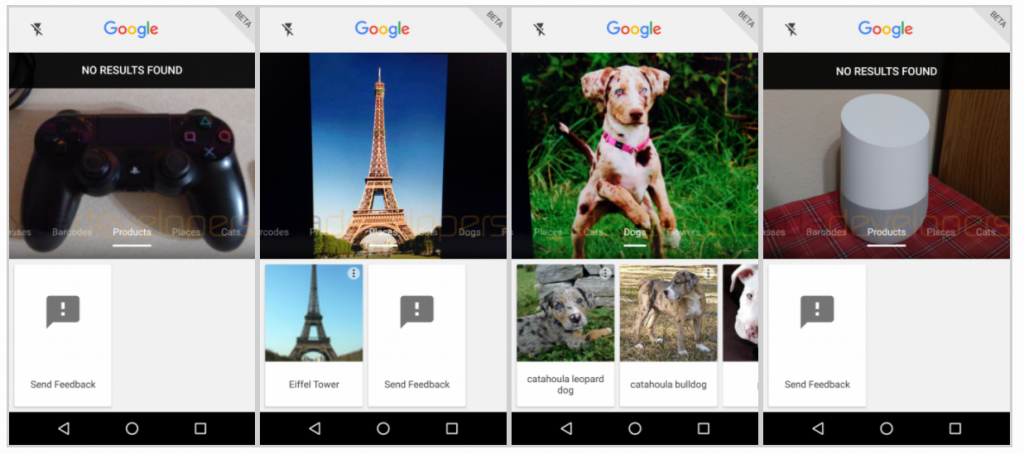

Google Lens will provide the object detection technology to link all of this together in a powerful visual search engine. In its BETA format, Lens offers the following categories for visual searches:

- All

- Clothing

- Shoes

- Handbags

- Sunglasses

- Barcodes

- Products

- Places

- Cats

- Dogs

- Flowers

Some developers have been given the chance to try an early version of Lens, with many reporting mixed results:

Looks like Google doesn’t recognize its own Home smart hub… (Source: XDA Developers)

These are very early days for Google Lens, so we can expect this technology to improve significantly as it learns from its mistakes and successes.

When it does, Google is uniquely placed to make visual search a powerful tool for users and advertisers alike. The opportunities for online retailers via paid search are self-evident, but there is also huge potential for brick-and-mortar retailers to capitalize on hyper-local searches.

For all its impressive advances, Pinterest does not possess the ecosystem to permeate all aspects of a user’s life in the way Google can. With a new Pixel smartphone in the works, Google can use visual search alongside voice search to unite its software and hardware. For advertisers using DoubleClick to manage their search and display ads, that presents a very appealing prospect.

We should also anticipate that Google will take this visual search technology further in the near future.

Google is set to open its ARCore product up to all developers, which will bring with it endless possibilities for augmented reality. ARCore is a direct rival to Apple’s ARKit and it could provide the key to unlock the full potential of visual search. We should also not rule out another move into the wearables market, potentially through a new version of Google Glass.

Google visual search: The key facts

- Google Goggles launched in 2010 as an early entrant to the visual search market

- Goggles still functions well on some landmarks, but struggles to isolate objects in crowded frames

- Google Lens scheduled to launch later this year (Date TBA) as a complete overhaul of Goggles

- Lens will link visual search to Google search and Google Maps

- Object detection is not perfected, but the product is in BETA

- Google is best placed to create an advertising product around its visual search engine, once the technology increases in accuracy

Bing

Microsoft had been very quiet on this front since sunsetting its Bing visual search product in 2012. It never really took off and perhaps the appetite wasn’t quite there yet among a mass public for a visual search engine.

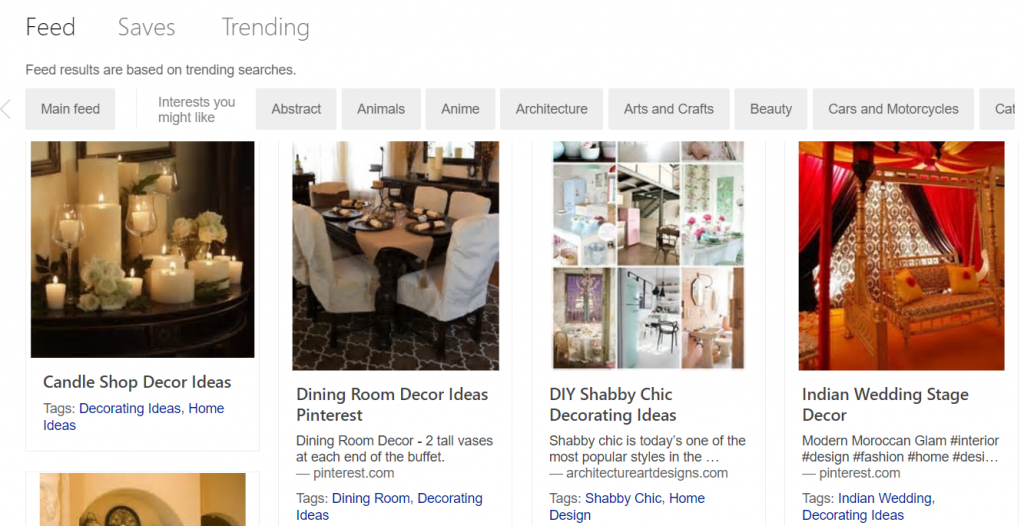

Recently, Bing made an interesting re-entry to the fray with the announcement of a completely revamped visual search engine:

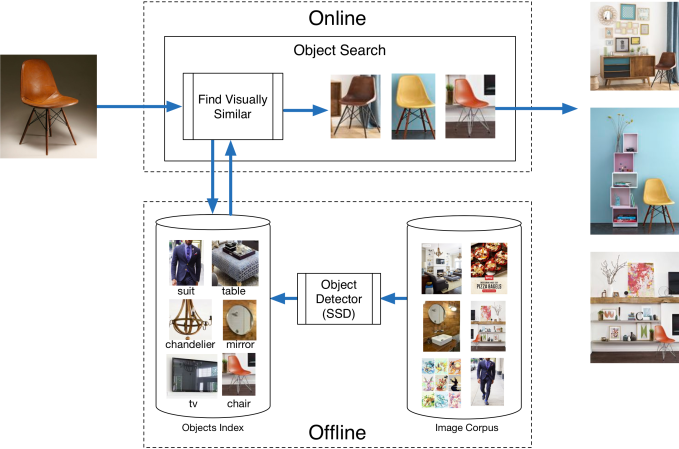

This change of tack has been directed by advances in artificial intelligence that can automatically scan images and isolate items.

The early versions of this search functionality required input from users to draw boxes around certain areas of an image for further inspection. Bing announced recently that this will no longer be needed, as the technology has developed to automate this process.

The layout of visual search results on Bing is eerily similar to Pinterest. If imitation is the sincerest form of flattery, Pinterest should be overwhelmed with flattery by now.

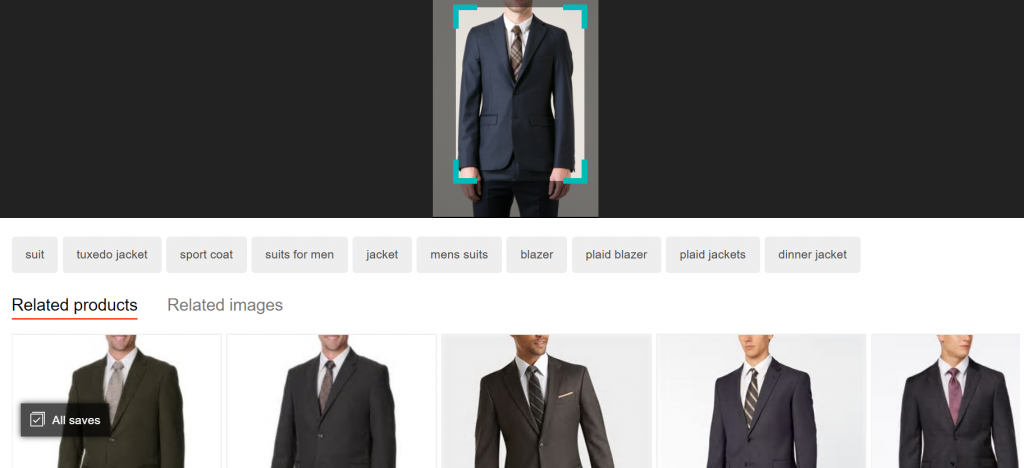

The visual search technology can hone in on objects within most images, and then suggests further items that may be of interest to the user. This is only available on Desktop for the moment, but Mobile support will be added soon.

The results are patchy in places, but when an object is detected relevant suggestions are made. In the example below, a search made using an image of a suit leads to topical, shoppable links:

It does not, however, take into account the shirt or tie – the only searchable aspect is the suit.

Things get patchier still for searches made using crowded images. A search for living room decor ideas made using an image will bring up some relevant results, but will not always hone in on specific items.

As with all machine learning technologies, this product will continue to improve and for now, Bing is a step ahead of Google in this aspect. Nonetheless, Microsoft lacks the user base and the mobile hardware to launch a real assault on the visual search market in the long run.

Visual search thrives on data; in this regard, both Google and Pinterest have stolen a march on Bing.

Bing visual search: The key facts

- Originally launched in 2009, but removed in 2012 due to lack of uptake

- Relaunched in July 2017, underpinned by AI to identify and analyze objects

- Advertisers can use Bing visual search to place shoppable images

- The technology is in its infancy, but the object recognition is quite accurate

- Desktop only for now, but mobile will follow soon

So, who has the best visual search engine?

For now, Pinterest. With billions of data points and some seasoned image search professionals driving the technology, it provides the smoothest and most accurate experience. It also does something unique by grasping the stylistic features of objects, rather than just their shape or color. As such, it alters the language at our disposal and extends the limits of what is possible in search marketing.

Bing has made massive strides in this arena of late, but it lacks the killer application that would make it stand out enough to draw searchers from Google. Bing visual search is accurate and functional, but does not create connections to related items in the way that Pinterest can.

The launch of Google Lens will surely shake up this market altogether, too. If Google can nail down automated object recognition (which it undoubtedly will), Google Lens could be the product that links traditional search to augmented reality. The resources and the product suite at Google’s disposal make it the likely winner in the long run.