If you want more information about your site’s natural search performance, Google gives it to you. Take some of it with a grain of salt, but there’s no reason not to listen.

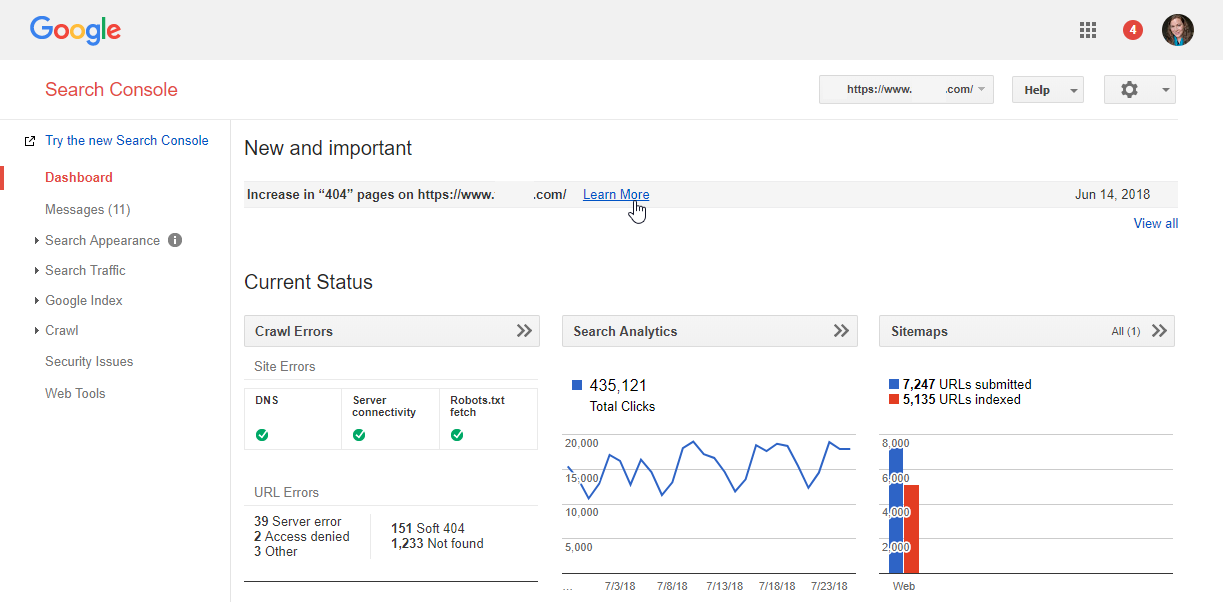

Google Search Console’s dashboard view displays messages, errors, performance, and indexation.

Search Console is Google’s way of communicating with webmasters and search engine optimization professionals about the status of their site within its search results.

Setting up Google Search Console can be a little daunting. It usually requires a developer’s help, unless you have access to a tag manager. It’s well worth the effort, though, for all of the tools you have access to.

After “verifying” your site, you’ll see messages from Google about how your site looks in search results, as well as its performance, indexation, and crawl data.

Messages

This section is Google’s way of communicating how your site can perform better in natural search. Messages rarely tell you everything you want to know, but they provide a place to start. Each message includes links to additional reports or help files.

For example, in the image above, there’s a message about 404 errors. Google can’t tell why your site is throwing errors or how to fix them. But just knowing that there’s an increase and which URLs are impacted can make diagnosing the issue easier.

You’ll also see messages on other crawl issues; notifications about how new algorithm updates or search features impact your site; and new features available in Search Console.

Search Appearance

How do your search results look? This section isn’t about ranking order, but about the visual optimization of your results. Use this data to take advantage of Google’s opportunities to deliver results that are more likely to catch searchers’ eyes and result in higher click rates.

- Structured Data, Rich Cards. Google loves structured data because it makes it easier to display accurate and relevant information in search results. These tools help identify the structured data you have on your pages and the “cards” that show in search results.

- Data Highlighter. Use this tool to show Google where different types of data reside in your pages. For example, if the price on your product pages is always in the same place, use the highlighter to select that text and tag it as a price. Google does the rest of it for you and replicates the tagging to other similar pages.

- HTML Improvements. The title tag and meta descriptions on your pages can be displayed as the text on the search results page. Learn more about the quality of your metadata in this tool.

- Accelerated Mobile Pages. If you’re using AMP to deliver faster results to mobile users, Google shows you the issues your pages may face.

Search Traffic

This section is one of the top reasons to verify your sites in Search Console. It offers insight into natural search performance that you can’t get anywhere else.

- Search Analytics. The impression and average ranking data, by keyword and landing page, make this tool worth the hassle it sometimes takes to set Search Console up. This is the only place you can find (theoretically) reliable data on keyword performance for natural search.

The numbers you see in this section will never match the exact data in your web analytics. Google can only supply analytics data in Search Console based on its own performance, not from other search engines. And even then, the Google-referred data reported in Search Console won’t match the Google-referred data in analytics. But the trends should be the same. And don’t miss the new beta version of this tool, with access to 16 months of data!

- Links to Your Site and Internal Links. Similar to the analytics tool, these link tools help you understand which links are pointing to your site and the pages on your site that they link to. They’re invaluable when attempting to diagnose a link quality issue that may be algorithmically dampening your site’s performance in rankings.

- Manual Actions. If you’ve been banned for web spam, you’ll find out more about it here, as well as in the Messages section.

- International Targeting. Analyze the signals your site is sending Google about the languages and countries supported in HREFLANG tags. You can also alert Google to the country your site is targeting. This is especially useful if you manage a .com site that doesn’t have a specific ccTLD such as .de or .kr.

- Mobile Usability. Since more than half of its searches are conducted on smartphones, Google has a soft spot for mobile usability. This report identifies issues that mobile searchers may experience.

Google Index

Your pages can’t rank in natural search or generate traffic without first being indexed. Google uses this section to communicate your site’s indexation status.

- Index Status. Think of this tool as showing the number of pages that are eligible to rank in Google.

- Blocked Resources. The items shown in this chart are images and other files on your site that Google cannot access. If you’re blocking CSS and JavaScript files, it can have an impact on the amount of trust that Google has in the quality of your site. And blocking image files will remove the ability to rank in image search.

- Remove URLs. Treat this tool with care because it will do just what it says: remove pages from the index. That’s beneficial when private or secure information has been published accidentally, or when a section of content adds no value to natural search. Unfortunately, it’s also easy to slip up and remove content that should be indexed.

- URL Inspection. The URL Inspection tool is new in the beta Search Console. It rolls up crawl and indexation information for one URL into individual pages.

Crawl

It all starts with the crawl. If Google has problems crawling your site to index it, the pages that are not crawlable will have a very hard time ranking.

- Crawl Errors. Google also displays this information on the dashboard. You’ll find server header issues here, from 404 file-not-found to 500 server unavailable issues. When they spike, you likely have a larger crawl issue that can result in decreased rankings and performance.

- Crawl Stats. In the last 90 days, how much time did Google spend crawling your site? You’ll find the answer here. Spikes may indicate server issues or the launch of new content that requires crawling for the first time.

- Fetch as Google. The best way to see your content like Google does is to fetch the page and render it in this tool. You’ll see the way that Google interprets the page, as well as what the users see in their browsers. You’ll have the option to submit the page to Google’s index as well, which is a handy feature when you’re launching new content.

- txt Tester. The robots.txt file can restrict and allow crawl access to different areas of the site. Like the “Remove URLs” tool, changes to the robots.txt file should be managed carefully. Test all proposed changes in this tool to prevent costly accidents like preventing search engines from crawling your entire site.

- Sitemaps. Roll out the red carpet for Google by submitting your XML sitemaps for crawling. This tool allows you to submit the sitemaps. The tool then reports on how many of the URLs in the sitemaps are indexed.

- URL Parameters. If you have parameter-based URLs that you want Google to stay away from, submit those parameters in this tool. For example, search engines don’t need to crawl versions of pages sorted in different orders. If a parameter controls that sorting, submit it here to make Google’s crawl more efficient and increase crawl equity.