We are a visually oriented species. Humans can understand pictures in the blink of an eye. In comparison, we read terribly slow and understand the text even slower. What’s more, a percentage of the world’s population consists of visual thinkers — people who also think in pictures. Considering this, it is not strange to see search move towards a more visual way. You might just start your next search by opening the camera app on your smartphone. Meet visual search.

What is visual search?

Visual search consists of every search that uses real-world images like photos or screenshots as a starting point. Every time you point your Google Lens camera at a piece of clothing, you are performing a visual search. Whenever you use Pinterest to do a style match, you are doing a visual search. Visual search answers questions like: “Show me stuff that’s kinda like that but different”, or “I don’t know what I want, but I’ll know it when I see it”.

It’s not just style matching that awesome outfit you saw or finding out what type of chair is in that hipster interior, it going much farther than that and still we’re only at the beginning. Photo apps can read text in images and translate it. Computer vision can recognize tons of entities, from celebrities to logos and from landmarks to handwriting. At the moment, visual search is making waves in the fashion and home decor sectors, with big brands like Amazon, Macy’s, ASOS and Wayfair leading the way.

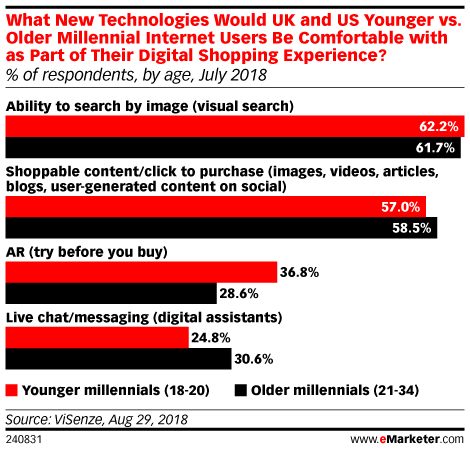

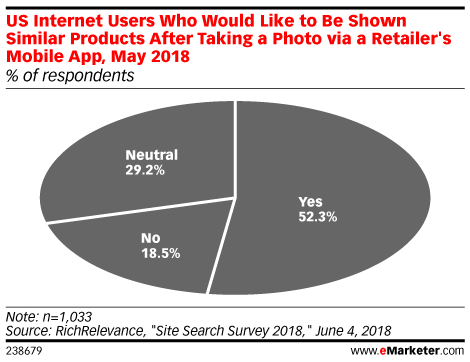

Research shows consumers are very interested in using visual search as part of their shopping experience. What’s more, a recent Sparktoro article uncovered that Google Images is the second largest player in the search engine market with 21% of searches starting there. Images are here to stay.

Many US internet users would consider visual search a great addition to regular site search channels:

Today, people are taking pictures of everything, not just beautiful sceneries or mementos of their adventures, but stuff they need to remember or tasks they need to do. Visual search will increasingly help turn those images into actual tasks. Take a photo of a business card and automatically add the contact details to my address book. Or take a photo of my written shopping list and add the items to the shopping cart of my favorite supermarket. Everything is possible.

Visual search differs from image search

Visual search is part of something called sensory search, which consists searching via text, voice and vision. In the past, nearly every search started with someone typing in a couple of words in a text field. Today, increasingly, searches start by voice or by pointing a camera app at something. You’ll see these different types of searches converge more and more as visual search is a great addition to text and voice search.

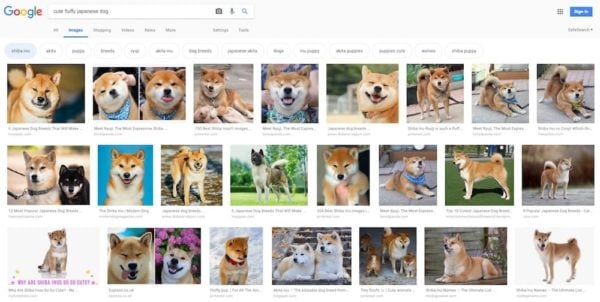

While visual search uses visuals as a starting point, image search is different. Image search has been around forever. A classic image search starts with a typed search prompt in a search field and leads to a SERP that shows a number of images that match that specific search. These images can be narrowed down by selecting smart filters from the menu bar.

How does visual search work?

People have been talking about visual search for a long time, but over the past couple of years it has really come into its own. Very powerful smartphones, increasingly smart artificial intelligence and consumer interest drive the growth of this exciting development. But how does visual search work?

Visual search is powered by computer vision and trained by machine learning. Computer vision can be described as the technology that lets computers see. Not only that, it makes computers understand what they see and to make them do something useful with that knowledge. In a sense, computer vision tries to get machines to understand the world we as humans see.

Computer vision has been around for ages, but thanks to hardware developments and vast new discoveries in the field of machine learning it is improving with leaps and bounds. Machine learning provides much of the input needed for an algorithm to make sense of images. To a machine, an image is just a bunch of random numbers — it needs context to get even the slightest understanding of what’s on it. Machine learning can provide that context.

Teaching a computer to see

Using machine learning, you can literally teach a computer what something is with a training set — starting small and then scaling up quickly. Feed it enough data and it can tell the differences between slight variations as well. To increase the knowledge of these machines, Google cross-references its finding with its knowledge graph. This way, it is becoming much easier for machines to connect the pieces of the puzzle to find out what’s in a particular image and how that image fits within the bigger picture — pun intended. Many providers also give their computer vision models OCR capabilities, meaning they understand text as well.

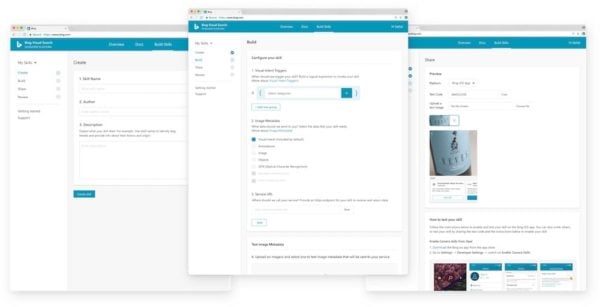

There are many third-party providers of this kind of technology if you want to add integrate computer vision into your software. In addition to all the third-party providers, platforms like Google’s Cloud Vision and Bing’s Visual Search Developer Platform give you various ways of incorperating visual search into your sites and apps.

Uses of visual search

You might be mistaken to think visual search is of not much use to the average site owner. Or maybe you think it doesn’t mean much for SEO. That would be wrong. Big brands are out there testing this and condition their consumers to use visual search. If you’re in commerce, you have to keep an eye on this development. We’ll see many more players enter the visual search ecommerce space, or quickly build their presence and power, like Pinterest with their new automated Shop The Look pins. There’s a lot happening, but visual search is still only at the beginning of its lifecycle.

Currently, the focus is on making sense of images and doing something useful with it. Soon, we’ll also see visual search come into contact with augmented reality and maybe even virtual reality? While AR and VR have been hyped to death by now, the killer application of these technological developments still has to be found. Maybe augmenting the real-world onto visual search results might be just that?

Visual search can be used for a lot of things, like helping you discover landmarks in a strange city, helping you increase productivity or find the beautiful pair of shoes that fits perfectly with that new dress you bought. It can also help you identify stuff like plants and animals and teach you how to do a particular chore. Who knows what else?

Some visual search applications

When we think of visual search there are a couple of players that immediately come to mind. It’s not so weird that almost every big brand is experimenting with visual search or doing research into what computer vision can bring for their platform.

Facebook, for instance, works on building an AI powered version of their Marketplace. They even purchased a visual search technology start up called GrokStyle that could drive that development. Apple also bought several companies active in the visual search space, mostly to improve their photo apps, while their ARKit developer program has very interesting options for working with visuals. Both Snapchat and Instagram let you buy stuff on Amazon by pointing your camera at an object.

Here are some of the most used visual search tool of this moment:

Pinterest Lens

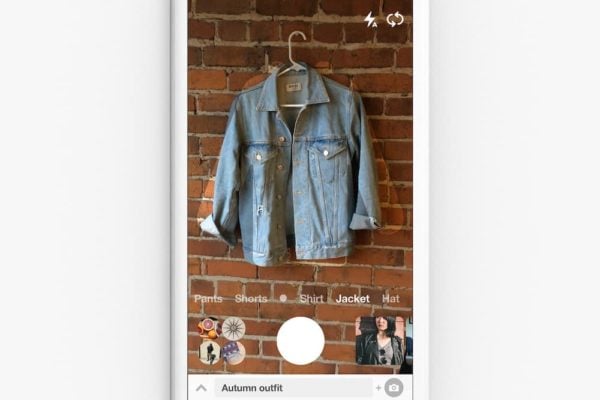

Visual search has been around for some time, but there’s one platform that brought it into the limelight: Pinterest. Pinterest is the OG, so to say. It is an inherently visual platform as it lets users collect images in boards and helps them get inspired by looking at other peoples boards. While helpful and interesting, it was a fairly static product.

A couple of years ago, the company started investing loads in computer vision, AI and machine learning that eventually led to an app called Lens. Ongoing development brought things like Shop the Look, which was the first visual shopping tool of its kind. Things really took off for Pinterest. In 2018, a year after the release of Lens people did more than 600 million visual searches every month. That’s a 140 percent increase year over year. That suggests a meteoric rise, but its platform dependency makes it too ‘closed’ to reach critical mass. Plus, the competition is picking up speed.

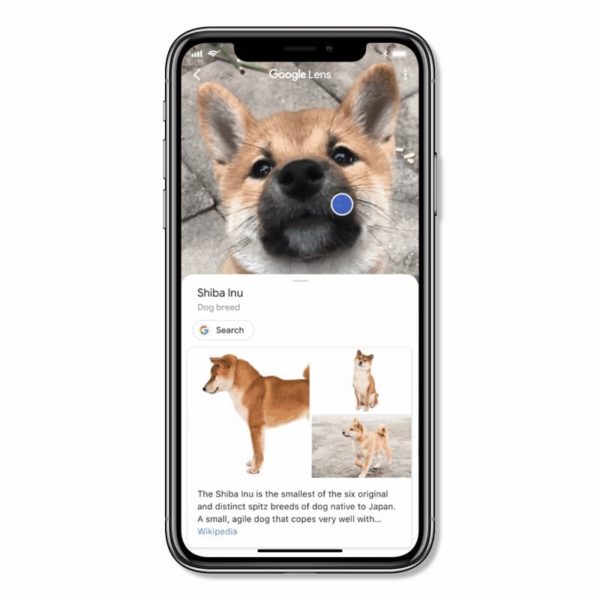

Google Lens

Google Lens wants to turn your phone into a visual search engine. Hit the tiny Lens icon, point your camera at an item and voila! Google is pushing Lens pretty hard. You can find it everywhere: the Photos app, Google Search app and Google Assistant. That last one is interesting, as you can use Assistant to do something with the images you capture. Take a picture of a recipe and ask Assistant to add it to your recipe book. Or use Google Translate to translate the foreign text on that sign — live, if you want.

Google Lens works in real time and recognizes over a billion products. At the end of last year, Google announced that Lens was also available for regular Image searches. The US only for now, but it is expected to be rolled out worldwide later this year.

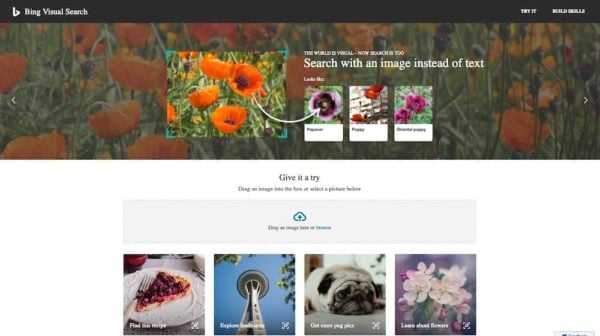

Bing Visual Search

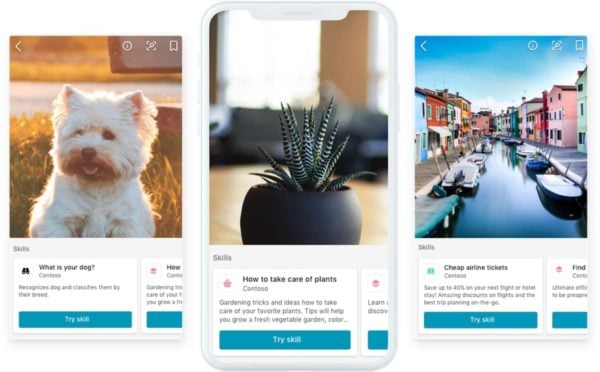

Bing is very active in the visual search space. Microsoft has been doing a lot of research and making lots of knowledge freely available. Not only do they have integrated their visual search in a really cool mobile app for the large platforms, but they also have a dedicated web platform. This website demonstrates the power of Bing visual search and it works very well. Just upload an image and see what Bing makes of it. Or use one of the example images to get a quick idea of how good it works.

While Bing mobile does much of the same stuff the other visual search providers do — point your camera at something and have it figure out what it is —, they have a big differentiator: skills. Developers can harness the power of visual search to append a skill to a matched image. So, if you have loads of products that Bing recognizes, you can define what a searcher should be able to do once your image has been analyzed. For this, the visual search identifies the intent of the search and requests different skills based on that intent. After that, Bing combines the skills and sends them to the app. If you have a home decor store, a search like this might not only yield a buy skill, but also a DIY skill. You can build these skills yourself. Try it on the Bing Visual Search Development Platform.

Amazon

Amazon is using their visual search technology mostly to provide other platforms a way of visually shopping for stuff. I’ve already mentioned they are working with Snapchat and Instagram to let shop via their camera app. For Amazon, visual search is important as it gives them a new way to have users shop. Now, if you see something in a store you can take a picture of it with the Camera Search functionality inside the Amazon app. It shows you all the relevant products that are available on Amazon and you can refine your search via visual attributes if you want.

Apparently, Amazon was working on an AI-powered shopping platform called Scout. Last month, however, Amazon announced a new delivery robot with the name Scout. As of now, it’s unclear what happened to the old Scout product. The old Scout let users build up a sort of taste database by liking or disliking products and product variants. This would eventually help them narrow down the number and uncover new products that would fit their taste.

Another interesting thing Amazon is working on, is combining visual and voice search. Products like the Echo Show and Echo Spot bring a smart voice assistant into your home, that supports the search results with visuals. Amazon also offers a lot of insights into how visual search works and how you can build your own integration on AWS.

But how does Google Image search tie into this?

Reading all this, you might think that good old image search is on its way out. Well, you’re wrong. A large part of searches happen in image search. Visual search is a kind of add-on for image search. You use it in different circumstances. The results are different as well. If you know what you need, you’ll go find an image in image search. If you see something interesting on an image, but you’re not quite sure what it is, hit that image and let visual search do its job.

Google Image search had a makeover this year. Almost every month, Google changed something or added new features. I’ve already mentioned the availability of Lens inside the image search results on mobile. Simply tap an image and see if Lens can see what it is. Image search now also has related concepts filters that let you drill down into your topic or uncover related items you never thought of. There are now badges to identify if something is a product you can buy directly. This is only a small sampling of the changes Image search underwent. O, did I mention that you can just type [fluffy Japanese dog] to come up with the search result of those cute Shina Ibu dogs you saw earlier in this post? Yay entities, yay knowledge graph!

Image SEO: More important than ever

As we are using images more and more to search for stuff, we need to take better care of our image SEO. Image SEO has always been something of an afterthought for many people, but please don’t be like that. You can win a lot if you just high-quality, relevant images and optimize these thoroughly. Google sees the massive potential and is putting even more weight into it. Here’s Googles Gary Illyes in a recent AMA on Reddit:

“We simply know that media search is way too ignored for what it’s capable doing for publishers so we’re throwing more engineers at it as well as more outreach.”

Gary Illyes

Last year, Google’s latest algorithmic change for Image search focussed on:

- ranking results based on both great images and content

- authority

- relevance

- freshness

- location

- context

It’s not hard to get your images ready for image search. We have an all-encompassing post on image SEO if you need to learn more. In short:

- Use structured data where relevant: mark up your images

- Use alt attributes to describe the images

- Find unique images — not stock photos

- Make them high quality if you want AI to figure out what’s on it

- Add them in a relevant place, where they provide context to the text

- Use descriptive filenames, not IMG168393.jpg

- Add captions where necessary

- Use the right sized images

- Always compress them!

Our world is visual: now you can search with visuals

Over the past year, we truly see sensory search come to light. Everyone was all about voice search, but visual search is providing a helpful new dimension. Lots of the time, a visual search just makes much more sense than a spoken one. And sometimes, it’s the other way around. That’s why voice and visual will never be the default search experience: they build on the others strength and weaknesses. Combined with good-old text search, you have every possibility to search the way you want!

Now… where’s that mindreader?