Have you heard conflicting stories about the usefulness of PPC automation tools? You’re not alone! On one side you have Google telling you that automations like responsive search ads (RSAs) and Smart Bidding will help you get better results and should be turned on without delay. On the other side you get expert practitioners saying RSAs are bad for conversion rates and Smart Bidding delivers mixed results and should be approached with caution. So how do you decide when PPC automation is right for you?

I’d love to give you the definitive answer but the reality is that it depends because Google and practitioners are both right! Neither would win any long-term fans by lying about results so the argument from both sides is based on the performance from different accounts with different levels of optimization.

In this post, I’ll tackle one way to measure if RSAs help or hurt your account. I won’t say if RSAs are good or bad because the answer depends on your implementation and my goal is to give you a better way to come to your own conclusion about how to get the most out of this automated PPC capability.

To optimize automated features, we need to understand how to better analyze their performance so that we can fix whatever may be causing them to underperform in certain scenarios. In our effort to make the most out of RSAs, we’re going to have to play the role of PPC doctor, one of the three roles humans will increasingly play in an automated PPC world.

To make this analysis as easy as possible, I’ll share an automation layering technique you can use in your own account right away. The script at the end of this post will help you automatically monitor RSA performance down to the query level and give ideas for how to optimize your account.

The right way to test RSAs is with Campaign Experiments

The best way to come to your own conclusions about the effect of an automation like RSAs is to test them with a Campaign Experiment, a feature available in both Google and Microsoft ad platforms.

With an experiment, you can run two variations of a campaign; the control, with only expanded text ads and the experiment, with responsive search ads added in as well.

When the experiment concludes, you’ll see whether adding RSAs had a positive impact or not. When measuring the results, remember to focus on key business metrics like overall conversions and profitability. Metrics like CTR are much less useful to focus on and Google is sometimes at fault for touting an automation’s benefits in terms of this metric that really matters only in a PPC world, but not so much in a corporate board room

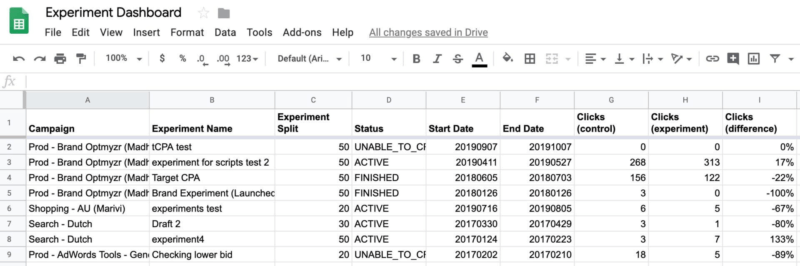

As an aside, if you need a quicker way to monitor experiments, take a look at a recent script I shared that puts all your experiments from your entire MCC on a single Google spreadsheet where you can quickly see metrics, and know when one of your experiments has produced a statistically valid answer.

There is however a problem with this type of RSA experiment… it’ll only tell you a campaign-level result. If the campaign with RSAs produced more conversions than the campaign without, you will continue with RSAs but may miss the fact that in some ad groups, RSAs were actually detrimental.

Or if the experiment with RSAs loses, you may decide they are bad and stop using them, when they possibly drove some big gains in a limited set of cases. We could look deeper into the data and discover some nuggets of gold that would help us optimize our accounts further, even if the answer isn’t to deploy RSAs everywhere.

It’s the query stupid

As much time as we spend measuring and reporting results at aggregate levels, when it comes time to optimize an account, we have to go granular. After all, when you find a campaign that underperforms, fixing it requires going deeper into the settings, the messaging (ads) or the targeting (keywords, audiences, placements).

But ever since the introduction of close variants made exact match keywords no longer exact, you should go one level deeper and analyze queries. For example, when you see a campaign’s performance gets worse with RSAs, is that because RSAs are worse than ETAs? Or could it be that the addition of RSAs changed the query mix and that is the real reason for the change in performance?

Here’s the thing that makes PPC so challenging (but also kind of fun). When you change anything, you’re probably changing the auctions (and queries) in which your ads participate. A change in the auctions in which your ad participates is also referred to as a query mix change. When you analyze the performance at an aggregate level you may be forgetting about the query mix and, you may not necessarily be doing an apples-to-apples comparison.

The query mix changes in three ways:

- Old queries that are still triggering your ads now

- New queries that previously didn’t trigger your ads

- Old queries that stopped triggering your ads

Only the first bucket is close to an apples-to-apples comparison. With the second bucket, you’ve introduced oranges to the analysis. And the third bucket represents apples (good, bad, or both) you threw away.

Query mix analysis explains why results changed

The analysis at a query level is helpful because it can more clearly explain the ‘why’ rather than the ‘what’. Why did performance change? Not just what changed? Once you understand ‘why’, you can take corrective action, like by adding negative keywords if new queries are delivering poor performance.

For an RSA query analysis, what you want to see is a query level report with performance metrics for RSAs and ETAs. Then you can see if a query is new for the account. New queries may perform differently than old queries but they should be analyzed independently. The idea is that an expensive new query that didn’t trigger ads before may still be worth keeping because it brings new conversions we otherwise would have missed.

With the analysis that the script below does, you will also see which queries are suffering from a poorly written RSA and that are losing conversions as a result. Many ad groups have too little data for Google to show the RSA strength indicator so having a different way to analyze performance with this script can prove helpful.

Without an automation, this analysis is difficult and time consuming and probably won’t be done on a routine basis. Google’s own interface is simply not built for it. The script automates combining a query report with an ad report and calculates how much impact RSAs had. I wrote about this methodology before. But now I’m sharing a script so you can add this methodology to your automation toolkit.

ETA vs RSA Query Analysis Script

The script will output a Google Sheet like this:

Caption: The Google Ads script produces a new spreadsheet with detailed data about how each query performs with the different ad formats in each ad group.

Each search term for every ad group is on a separate row. For each row, we summed the performance for all ETAs and RSAs for that query in that ad group. We then show the ‘incrementality’ of RSAs in red (worse) or green (better).

When the report is finished, you’ll get an email with a link to the Google Sheet and a summary of how RSAs are helping or hurting your account.

The recommendation is one of four things:

- If the ad group has no RSAs, it recommends testing RSAs

- If the ad group has no ETAs, it recommends testing ETAs

- If the RSA is converting worse, it suggests moving the query into a SKAG with the existing ETA and testing some new RSA variations

- If the RSA is converting better, it suggests moving the query into a SKAG with the existing RSA and testing some new ETA variations

You don’t have to follow this exact suggestion. It’s more of a way to get an idea of the four possible situations a query could be in.

My hope is that this proves to be an interesting new report that helps you understand ad type performance at a deeper level and gives you a jumping off point for a new type of optimization.

To try the script (code at end of article), simply copy and paste the full code into a single Google Ads account (it won’t work in an MCC account) and review the four simple settings for date range, email addresses, and campaign inclusions and exclusions.

Caveats

This script’s purpose is to populate a spreadsheet with all the data. It doesn’t filter for items with enough data to make smart decisions. How you filter things is entirely up to you. For example, I would not base a decision about removing an RSA on a query with just 5 impressions. You can add your own filters to the overall data set to help you narrow things down to the highest priority fixes for your own account.

I could have added these filtering capabilities in the script code but I felt that most advertisers are more comfortable tweaking settings in spreadsheets than in JavaScript. So you have all the data, how you filter it is up to you. 🙂

Methodology for calculating incrementality

The script itself is pretty straightforward but you may be curious about how we calculate the ‘incrementality’ of RSAs. Here’s what we do if a query gets impressions with both ad formats.

We assume that additional impressions with a particular ad format will deliver performance at the same level of existing impressions with that ad format.

We calculate the difference in conversions per impression, CTR and CPC between RSAs and ETAs for every row on the sheet.

We apply the difference in each of the above 3 metrics to the impressions for the query.

Caption: Each row of the spreadsheet lists how much RSA ads helped or hurt the ads shown for each query in the ad group. Based on this advertisers can make decisions to restructure accounts to get the right query with the right ad in the right place.

That allows us to see how much clicks, conversions and cost would have changed had we not had the other ad format.

Conclusion

Advertisers should not make decisions with incomplete data. Automation is here to stay so we need to figure out how to make the most of them and that means we need tools to answer important questions like ‘how do we make RSAs work for our account?’ Scripts are a great option for automating complex reports you want to use frequently so I hope this new one helps. Tweet me with ideas for new scripts or ideas for how to make this one better.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.